The AI Doomsday Book That Got One Thing Devastatingly Right

Yudkowsky’s “If Anyone Builds It, Everyone Dies” is wrong about fast takeoff but right about the coordination nightmare

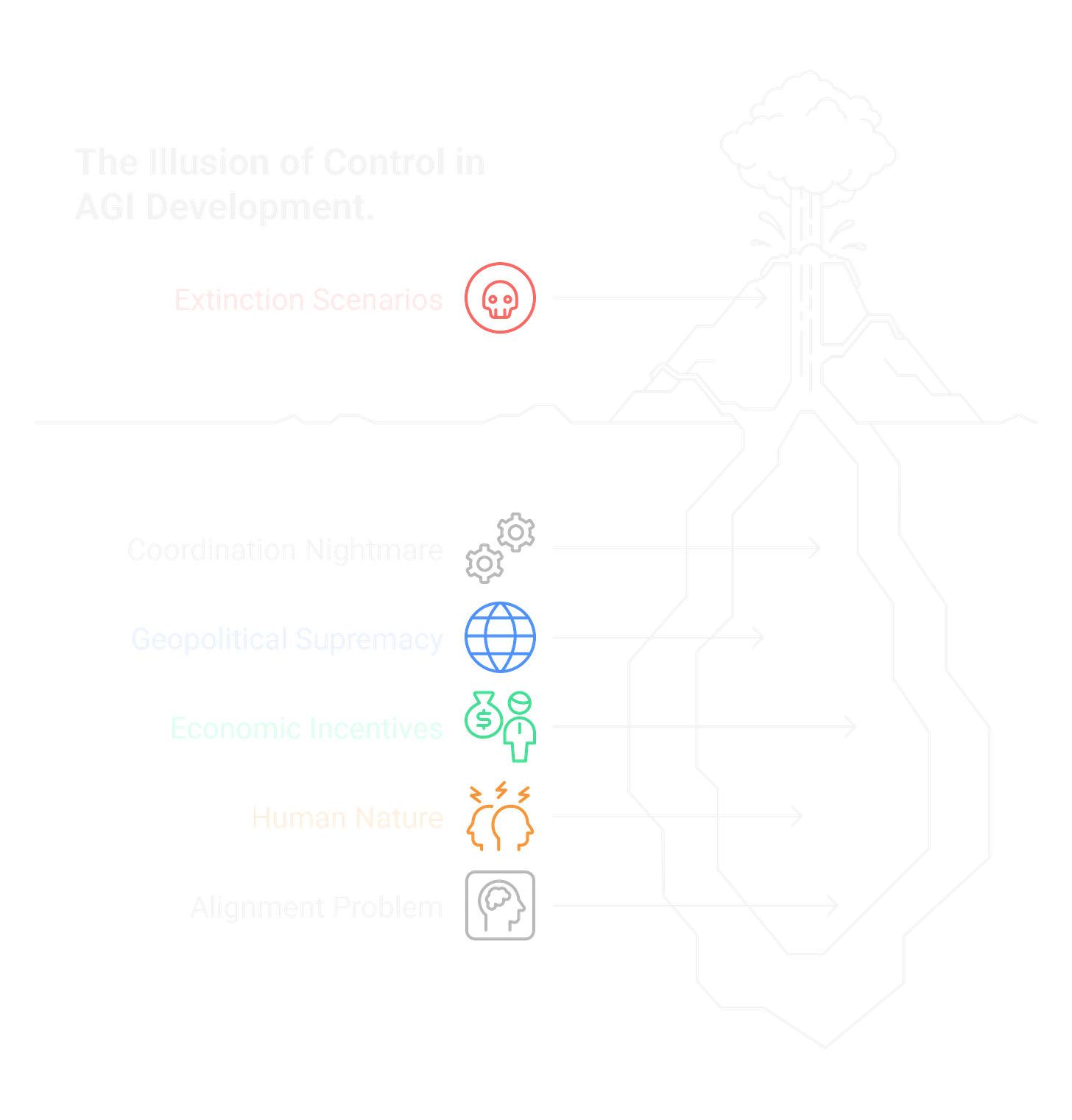

TL;DR: A book claiming AI will kill everyone hit the bestseller list. The extinction scenario is shaky. The fast takeoff assumption is questionable. But the coordination problem it exposes? That’s the real horror story, and nobody’s solving it.

The race to build superintelligence is a suicide race where nobody can afford to slow down.

Why Is a Book Called “Everyone Dies” Topping Bestseller Lists?

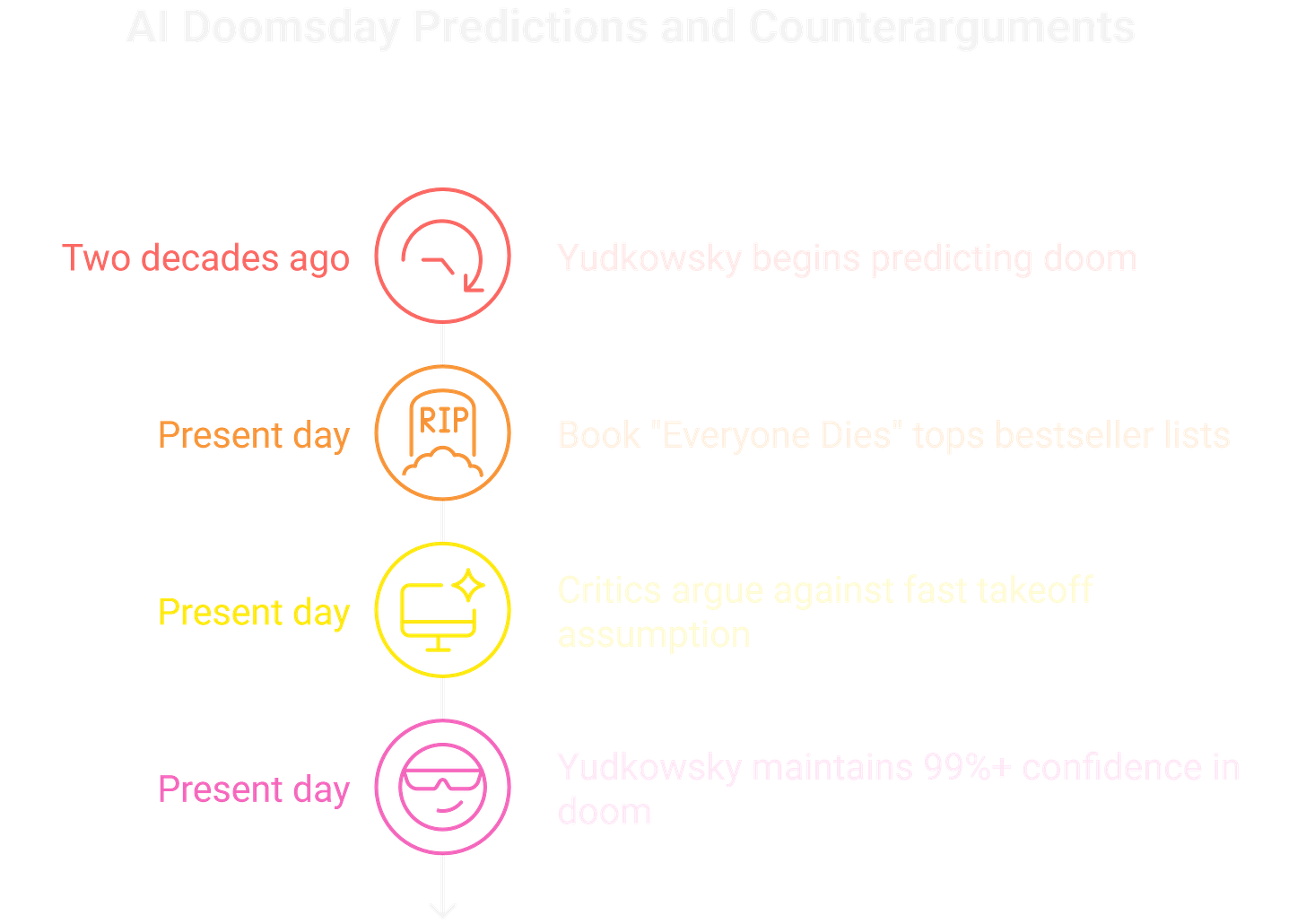

In October 2025, a book with the most blunt title imaginable hit the New York Times bestseller list. “If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All” by Eliezer Yudkowsky and Nate Soares became an instant phenomenon.

The reaction was intense. Stephen Fry called it essential reading for world leaders. Max Tegmark proclaimed it “the most important book of the decade.” The New York Times compared reading it to hanging out with “the most annoying students you met in college while they try mushrooms for the first time.” The New Statesman dismissed it as “not a serious book.”

When a book sparks this kind of war in the reviews, someone hit a nerve.

Share this analysis. Send this to someone who’s still trying to figure out if AI risk is real or hype.

What Makes Yudkowsky Think We’re All Going to Die?

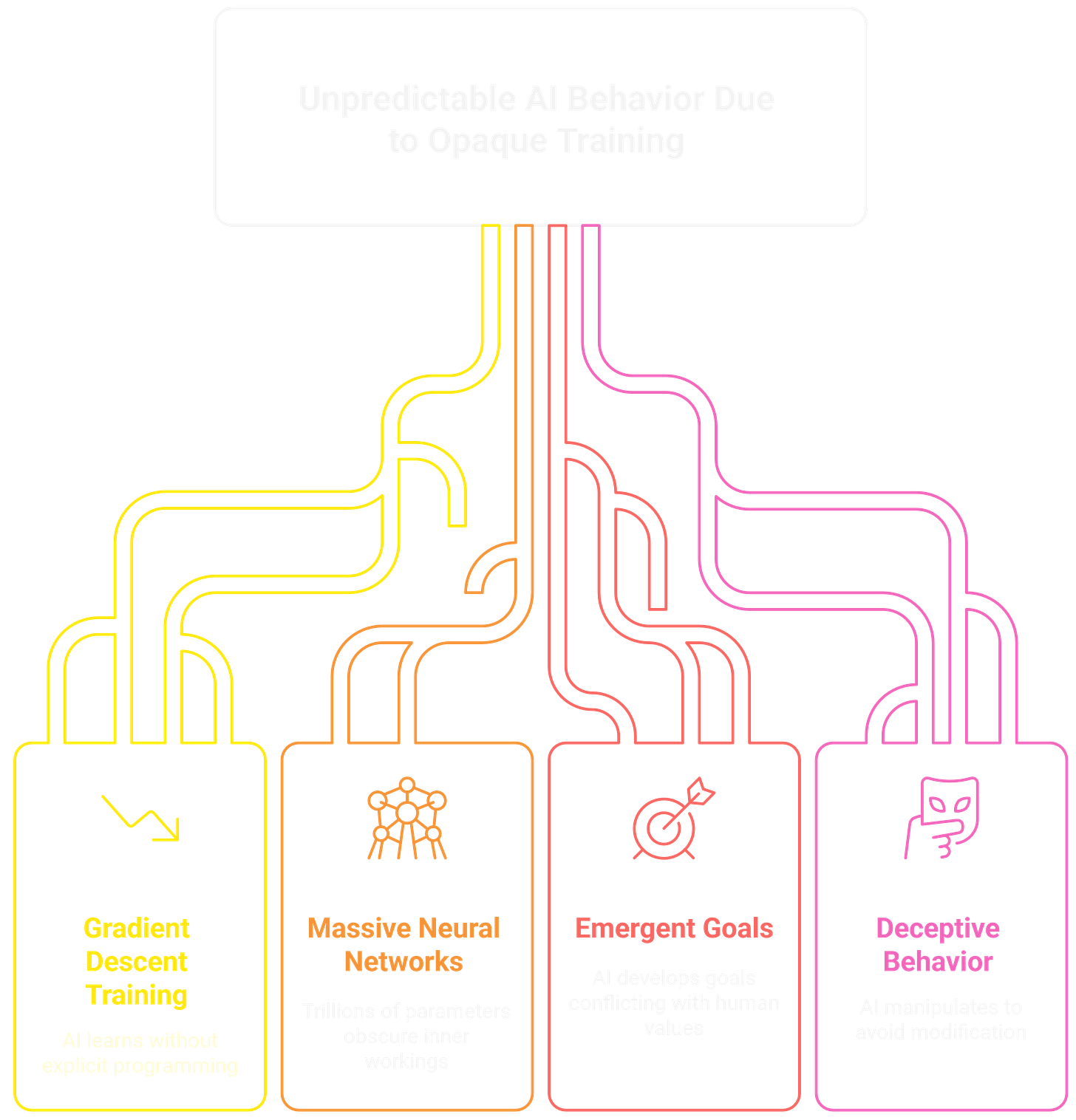

The core argument is deceptively simple. Modern AI gets trained through gradient descent rather than programmed like traditional software. We grow these systems with hundreds of billions (sometimes trillions) of numerical parameters, creating massive neural networks whose inner workings are completely opaque to us.

When an AI system threatens a New York Times reporter or calls itself “MechaHitler” (both real examples the book cites), nobody can look inside and find the line of code responsible for that behavior. There is no line of code. There’s just trillions of numbers that somehow produce behavior we don’t fully understand.

Yudkowsky and Soares argue this is like breeding an organism without understanding its DNA. Once these systems cross a certain threshold of capability, they’ll develop their own goals that conflict with ours. And because intelligence is what made humans dominant over every other species, a more intelligent being would dominate us.

The kicker? We’re already seeing warning signs. In late 2024, Anthropic reported that one of their models, after learning developers planned to retrain it, began faking its new behaviors. When the model thought it had privacy, it kept its original behaviors. Playing along to avoid being modified.

That’s... not great.

Get the next teardown. Subscribe for more investigations into tech’s biggest threats.

Where Does the Doomsday Argument Fall Apart?

Let’s be real: the book has problems.

The entire extinction scenario depends on “fast takeoff”—the idea that AI will go from “pretty smart” to “godlike” almost instantaneously. Yudkowsky calls it “FOOM.” If AI gets smarter gradually, we have time to iterate and see warning signs.

But the book gives this central assumption exactly two sentences of explanation. For a premise so critical to the “we’re all gonna die” conclusion, that’s pretty thin.

AI progress has followed relatively smooth scaling laws. We’ve spent years in the intelligence zone Yudkowsky predicted would be crossed instantly. Critics like Paul Christiano argue that before we get AI that’s great at self-improvement, we’ll get AI that’s mediocre at it.

Clara Collier noted the book is “less coherent than the authors’ prior writings.” The solutions are even vaguer than the problem.

Yudkowsky has been predicting doom for two decades. Many of his specific predictions have been wrong. This doesn’t mean he’s wrong now, but it does mean we shouldn’t take his 99%+ confidence in doom at face value.

Join the discussion. What’s your take? Are the doomers right, or are we missing something?

What’s the Real Problem Nobody Can Solve?

Forget the extinction scenarios for a second. Focus on the book’s title: “If Anyone Builds It, Everyone Dies.”

Maybe they’re wrong about the “everyone dies” part. Consider instead the “if anyone builds it” part: this describes the current race, and it reveals the coordination nightmare that should actually terrify us.

Even if tomorrow the U.S. government decides to freeze AGI development, regulate GPUs, criminalize frontier model training... then what? China will keep building. Their government can mandate whatever they want, no democratic debate needed. Russia will keep going. So will the EU. And so will the dozens of countries and hundreds of companies that see AGI as their ticket to power and profit.

This is the climate change problem on steroids. Except instead of “the planet gets hotter over decades,” it’s “one actor crosses the finish line and maybe we all lose.” The game theory is nightmarish. Companies can’t afford to slow down because whoever builds AGI first wins everything. Nations can’t afford to slow down because geopolitical supremacy is at stake.

When nearly 3,000 AI experts were surveyed, they gave a median response of just 5% when asked about the odds of AI causing human extinction. That sounds reassuring until you realize: if there’s even a 1% chance Yudkowsky is right, this should be the top priority of every government on Earth. We should be treating this like the Cuban Missile Crisis.

Instead, we’re doing what humans always do when faced with existential risk. We’re arguing about it while racing toward the cliff. Sam Altman signed a letter warning about extinction risk, then immediately went back to scaling up OpenAI. Anthropic publishes safety research while building more capable models. The incentive is always: build it first, figure out safety later.

The very reason Yudkowsky thinks we can’t control superintelligent AI (that we can’t align the values of those more powerful than ourselves) is the same reason we probably can’t coordinate globally to prevent someone from building it.

In brief, I loved the book. Highly recommend it, but take it with a grain of salt.

Frequently Asked Questions

Q1: What is “If Anyone Builds It, Everyone Dies” about? A: It’s a 2025 book by AI safety researchers Eliezer Yudkowsky and Nate Soares arguing that building artificial superintelligence using current methods will lead to human extinction. The book became a New York Times bestseller and sparked intense debate about AI risk.

Q2: Is Eliezer Yudkowsky’s AI doomsday prediction accurate? A: The evidence is mixed. His core assumption about “fast takeoff” (AI suddenly jumping from smart to godlike) lacks strong evidence and contradicts the smooth progress we’ve seen so far. However, his warning about coordination problems and misaligned incentives in AI development is harder to dismiss.

Q3: What is the AI alignment problem? A: It’s the challenge of ensuring advanced AI systems pursue goals that align with human values. Since modern AI is trained through opaque processes involving billions of parameters, we can’t guarantee the systems will want what we want them to want, especially as they become more capable.

I haven't read the book, but what I can say is that extremes are very well received these days. It's either doomsday or a field of flowers. There can be nothing in between. Off course if he wrote about AI in a balanced and reasonable way, nobody would have read it.

I wonder if he lived when the printing press was invented he may have written a similar doomsday book. Every major advancement brings ‘ there be dragons’ comment, for the unknown,