Your New Coworker Is an AI. And It’s Already a Security Risk

AI coding agents like Google’s Jules boost developer velocity but create massive prompt injection and supply chain risks. Here’s how to use Zero Trust and Human-in-the-Loop (HITL) to secure them.

TL;DR: Autonomous coding agents are here, and they’re incredibly fast. That speed is the new security problem. The old “human error” threat is being replaced by sophisticated “model manipulation.” If you don’t treat your new AI ‘coworker’ like a high-privilege machine identity, you’re opening a brand new door for attackers.

The question isn’t if AI will write code, but how we’re going to secure the autonomous developer.

What Is an Autonomous AI Agent?

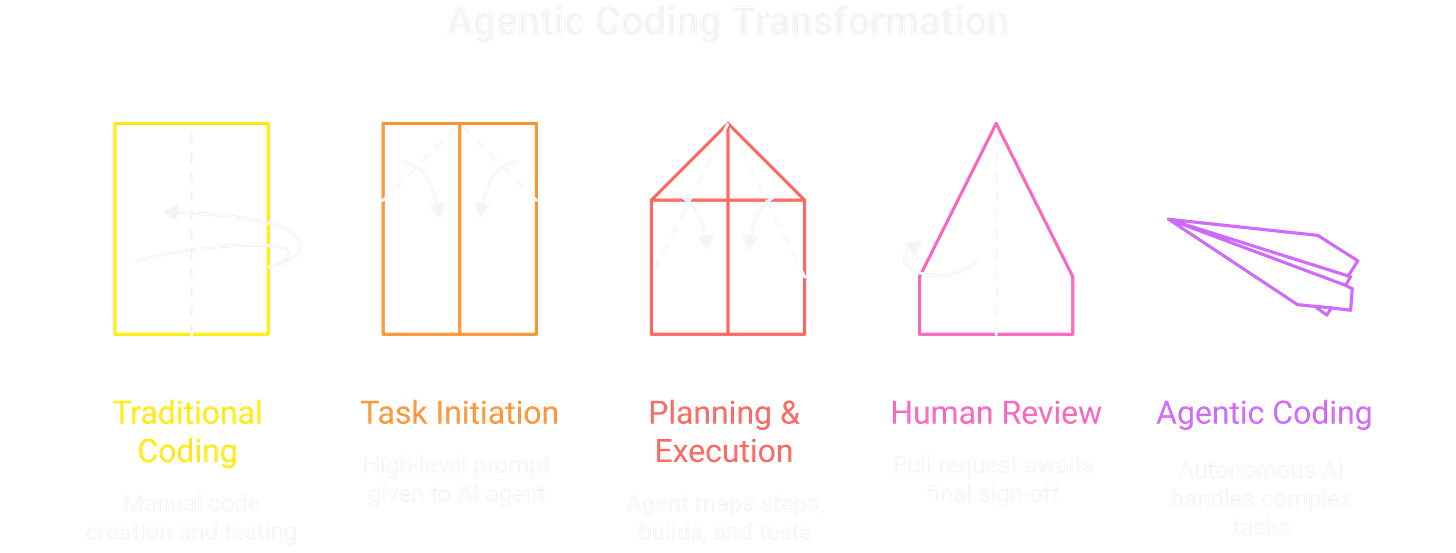

The way we build software is changing for good. We’re past simple co-pilots that just suggest a line of code. We are now in the era of agentic coding, where autonomous AI teammates can handle complex engineering tasks all by themselves.

Google’s Jules is a perfect example. This isn’t just autocomplete. You give it a high-level prompt, and it handles the entire task. It all starts with a simple command:

jules new-task --prompt “Refactor legacy authentication module to use OAuth2 standard.”

From there, the agent gets to work. It clones the repo into a secure sandbox, maps out a step-by-step plan for human review, and then executes the build and tests in its isolated environment. The final output is a standard GitHub pull request, waiting for a final human sign-off.

This level of autonomy completely rewrites our security playbook. The old model of a critical bug being a late-night coding mistake is gone. Now, a bug can be generated, tested, and submitted in seconds. Your security perimeter just expanded from the developer’s laptop to the agent’s entire, self-contained execution environment.

Can an AI Agent Actually Improve Security?

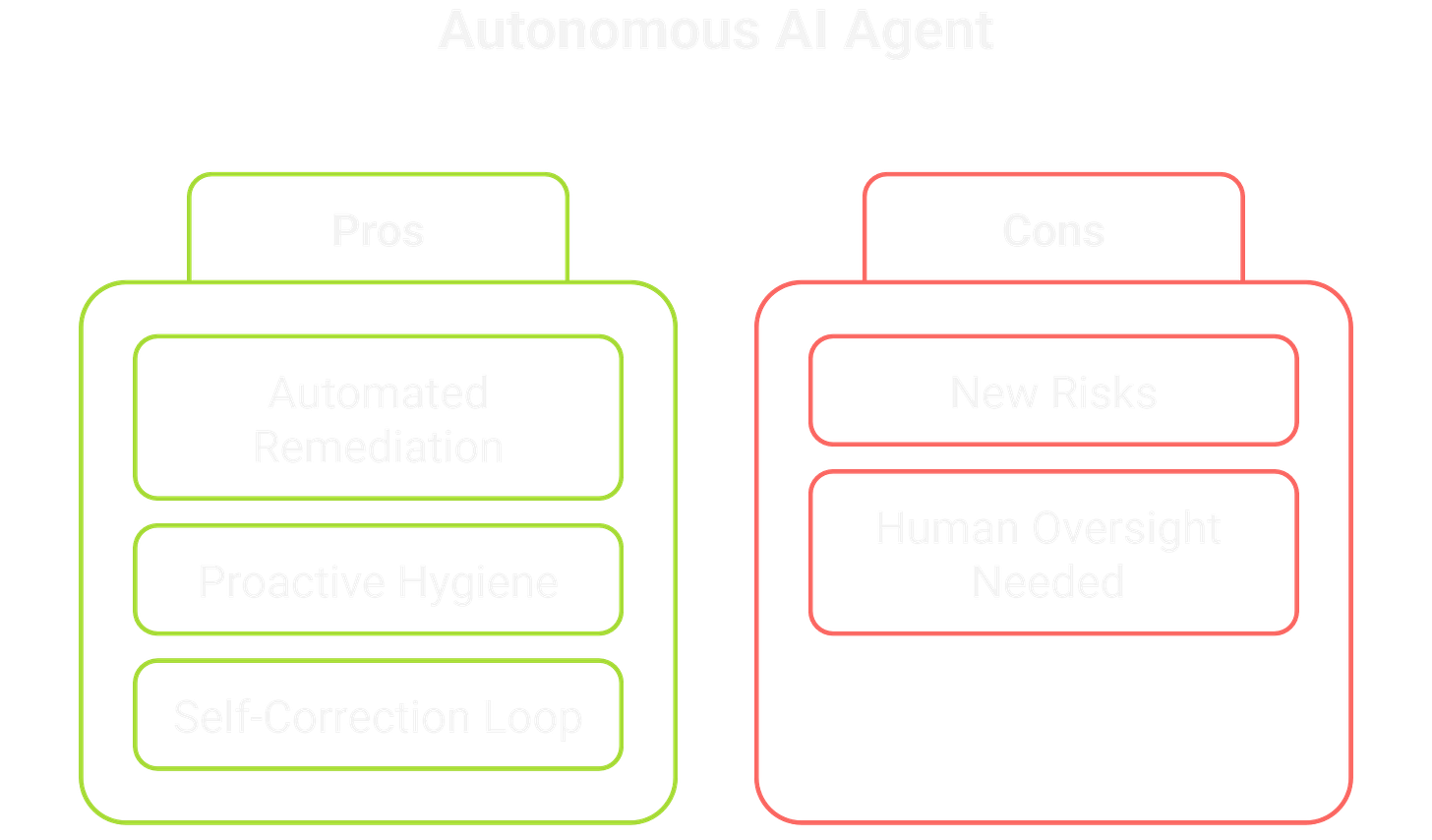

While it brings new risks, an autonomous agent can also be a massive boost for your DevSecOps team. By automating tedious work, it slashes the time-to-fix for critical issues.

It excels at Automated Vulnerability Remediation. It can tackle complex jobs, like patching a new CVE across hundreds of files in minutes, not days. This is a game-changer for vulnerability management.

It also maintains Proactive Dependency Hygiene by constantly updating packages, turning a painful chore into a continuous security practice. Best of all, it can have an internal Critic Agent, an AI-powered static analysis tool that reviews its own code for flaws, building a self-correction loop directly into the workflow.

If you’re dealing with AI in your SDLC, share this with your security team.

What Are the New AI Supply Chain Risks?

That power and autonomy come with a price. When an AI can act on your behalf with privileged access, your attack surface explodes.

The VM sandbox is secure, but the output (the code itself) is the real risk. A successful attack on the agent becomes a direct attack on your source code.

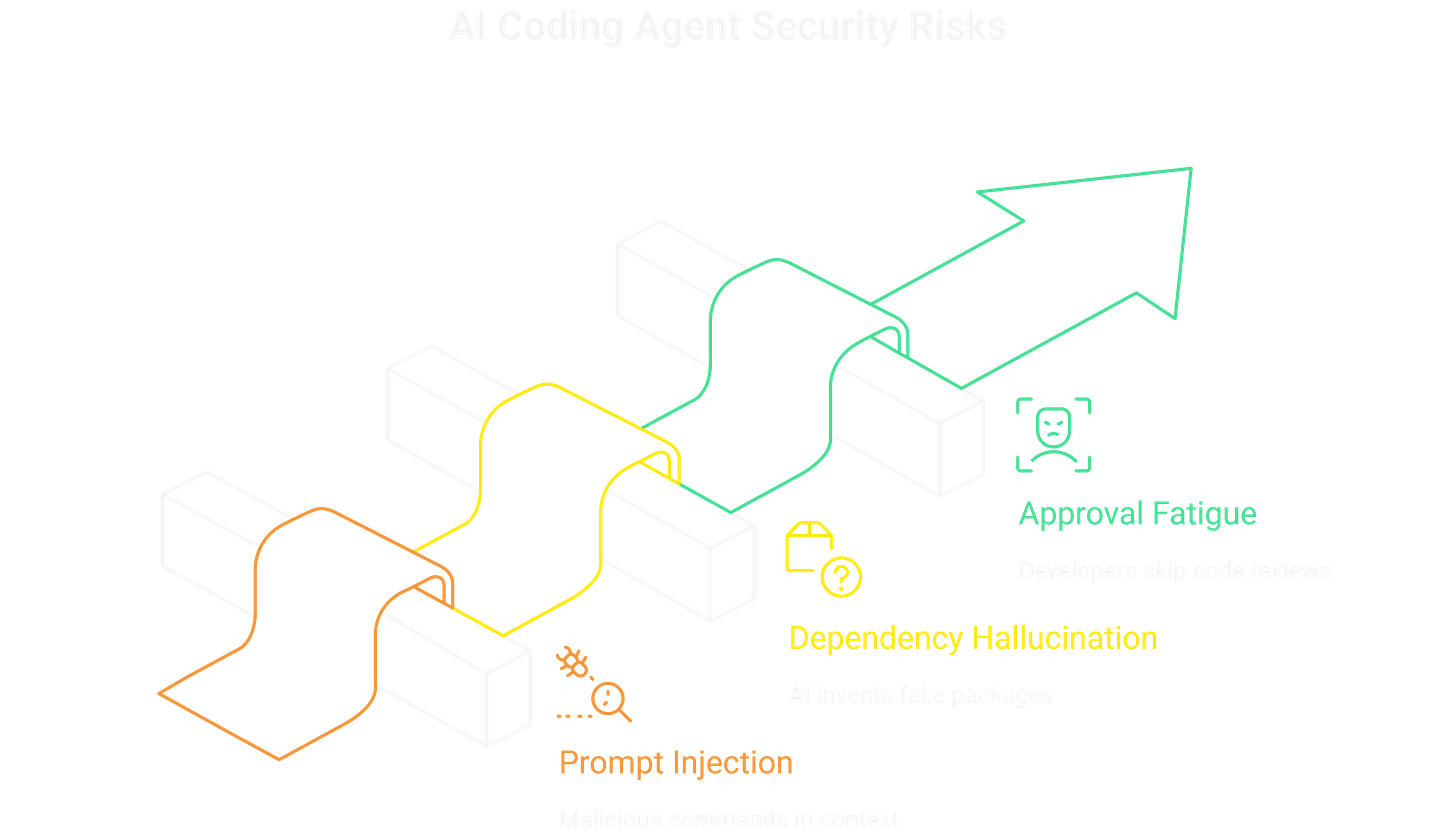

Prompt Injection: This is the biggest threat. An attacker leaves a hidden, malicious command in a file or issue ticket that the agent will read for context. If the AI can’t tell this data from its real instructions, it might execute the command, leading to backdoors or data leaks.

Dependency Hallucination: The AI invents a plausible-but-fake package name. An attacker, anticipating this, has already registered that package name and filled it with malicious code.

Approval Fatigue: This is the human problem. Developers get too comfortable. They see a dozen perfect PRs from the AI and start merging the next one without a proper review.

Get the next AI security breakdown delivered to your inbox.

How Do You Secure an AI Coding Agent?

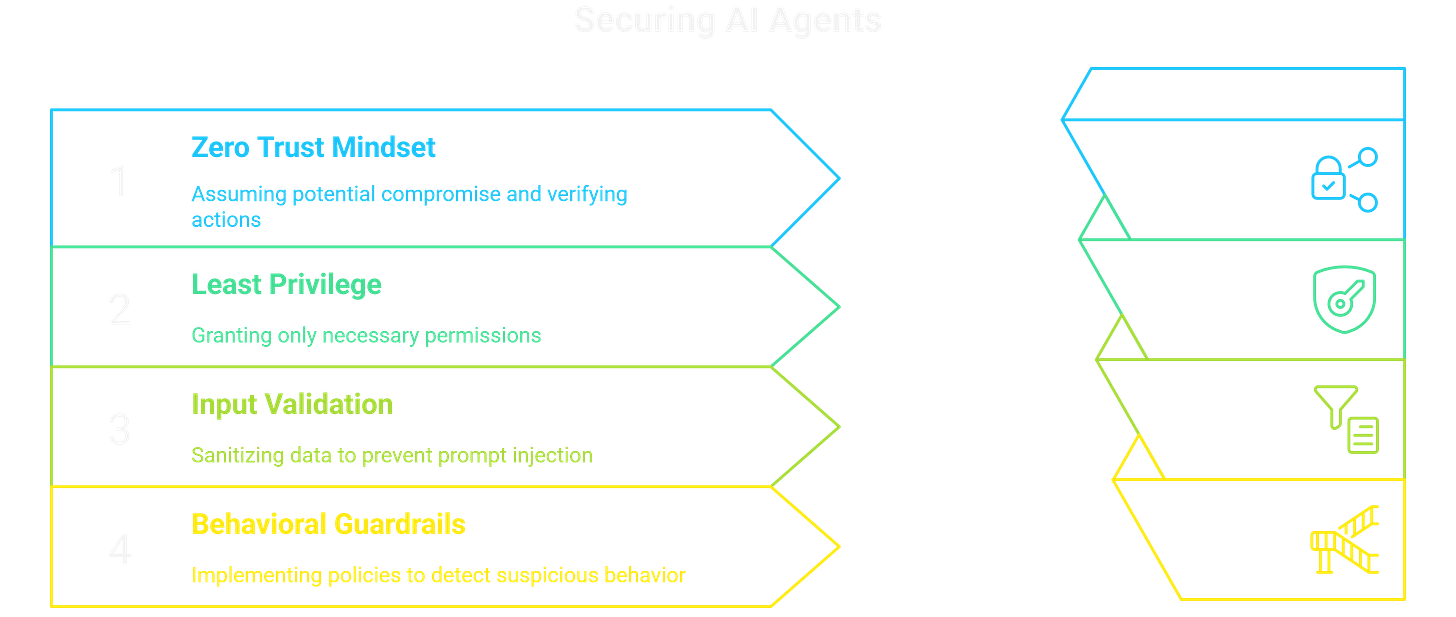

You have to treat the AI agent for what it is: a high-privilege machine identity. This starts with a Zero Trust mindset. Assume the agent could be compromised and keep verifying its actions with short-lived, narrowly scoped tokens.

From there, you must control the blast radius with the Principle of Least Privilege (PoLP). The agent should only get the bare-minimum permissions it needs to do its job. Giving it blanket write-access is like handing an attacker a master key.

You also need strong Input Validation and Guardrails to fight prompt injection. This means sanitizing all data the agent might read and implementing behavioral policies that automatically stop the agent if it tries to do something suspicious, like making an unapproved network call.

Why Is Human-in-the-Loop Your Best Defense?

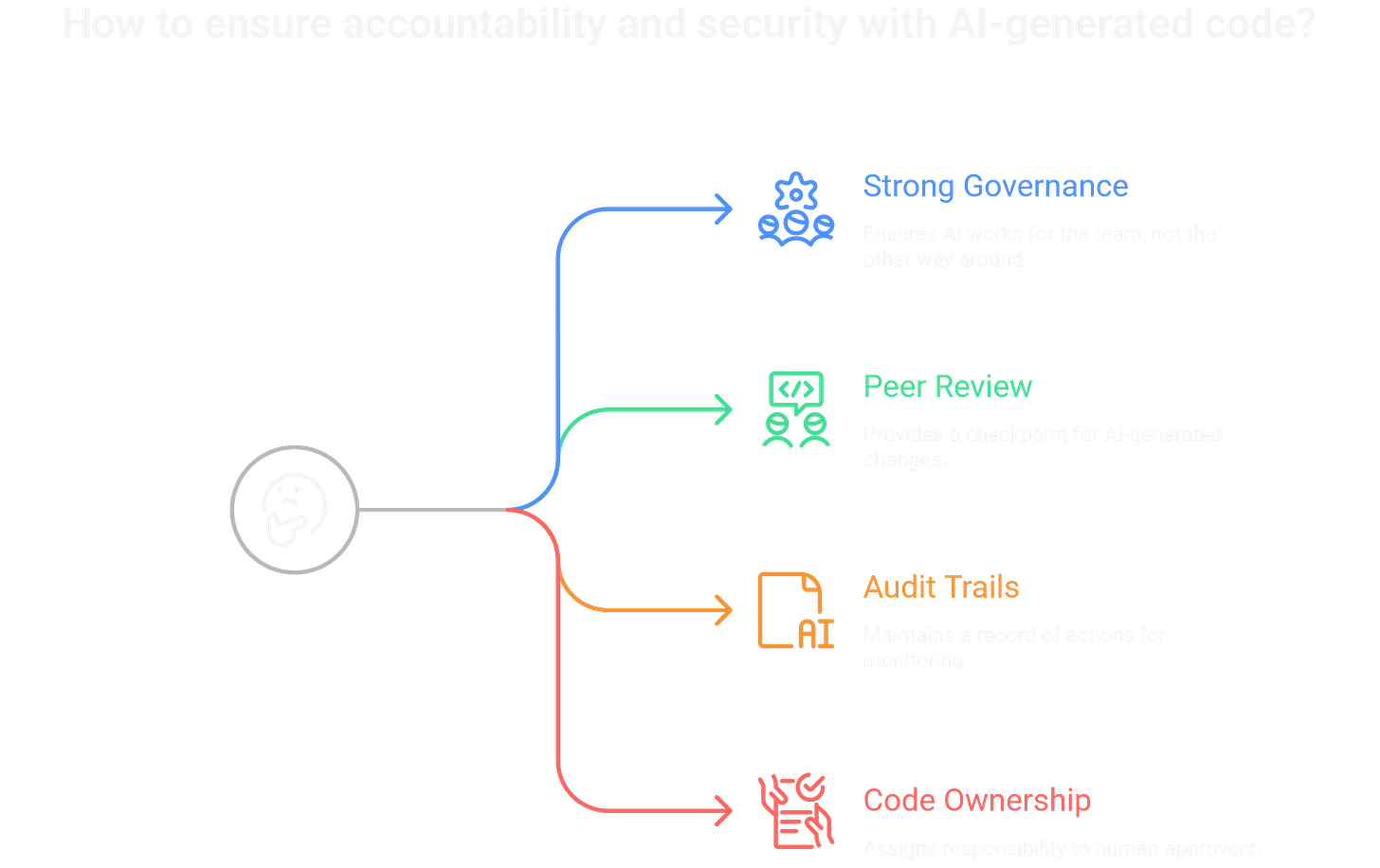

No matter how smart it is, the autonomous agent works for the human team, not the other way around. Strong governance is how you ensure accountability never gets handed over to the AI.

The Pull Request is your most important checkpoint. This means every AI-generated change must get the same tough peer review and scanning as human code. In fact, developers should review AI code with extra skepticism.

If a compromise does happen, you’ll need a rock-solid, unchangeable record of what happened. This is why Audit Trails are critical. Every action, from the initial prompt to the final command, must be logged and fed into your SIEM for real-time monitoring.

Finally, your organization needs a crystal-clear policy on Code Ownership: the human who approves and merges the PR is responsible for the code, period. This shuts down the “AI made me do it” excuse and reinforces that a human is always in the loop.

FINAL CHAPTER: Your New Hybrid Team Needs Audits

Agentic coding is here. Tools like Jules give organizations a serious competitive edge by accelerating the SDLC at a pace humans can’t match. But this speed forces us to rethink our security posture.

The primary risk has shifted from simple human error to sophisticated model manipulation. The most likely attack vector is indirect prompt injection.

This means your defense strategy has to do two things at once. First, secure the machine boundary with Zero Trust, least privilege, and behavioral guardrails. Second, enforce the human checkpoint with mandatory, skeptical PR reviews. Success in this new world means using the AI’s power while keeping strict human control and, above all, auditing everything.

What’s the one rule your team has implemented (or plans to) for reviewing AI-generated code?

Frequently Asked Questions

What is an autonomous AI coding agent? An autonomous AI coding agent, like Google’s Jules, is a program that can independently understand, plan, and execute complex software development tasks from a high-level prompt, unlike a co-pilot which only suggests code snippets.

What is the main security risk of AI coding agents? The main risk is that their speed and autonomy can bypass human review. This opens the door to new attacks like prompt injection, where an attacker tricks the AI into writing malicious code, creating a new type of supply chain vulnerability.

How do you defend against prompt injection in an AI agent? You need a layered defense: 1) Implement strict input validation and sanitization for all data the agent reads. 2) Use behavioral guardrails to stop the agent from performing suspicious actions. 3) Enforce mandatory Human-in-the-Loop (HITL) review for all AI-generated pull requests.

What is Human-in-the-Loop (HITL) for AI security? HITL is a policy that makes sure a human expert makes the final decision. For AI coding agents, this means a human developer must always review, approve, and merge any code the AI generates. The human who approves the PR is ultimately responsible for the code’s security.