Bug Bounty Hunting for GenAI

ToxSec | How to deal with GenAI in bug bounty programs.

0x00 Why GenAI Matters for Bug Bounty

Generative AI is showing up in products faster than security teams can adjust, and bug bounty hunters are already finding vulnerabilities. Banking apps deploy “assistants,” support desks roll out chatbots, and SaaS platforms add copilots that can query sensitive data. Every one of these deployments expands the attack surface.

For bug bounty hunters, the relevance is direct. GenAI features often combine a large language model with custom prompts, business logic, and data integrations. That stack can fail in ways traditional web apps don’t. If you can influence the model to disclose its hidden instructions, exfiltrate private data, or ignore authorization checks, you’ve demonstrated a real security issue.

Bounty programs are beginning to recognize this. Some list prompt injection and data leakage as valid findings; others fold GenAI into their general application logic scope. Either way, the message is clear: hunters who learn how to test these systems can find impactful vulnerabilities today.

If you want to practice prompt injection, check out the Gandalf CTF hosted by Lakera.

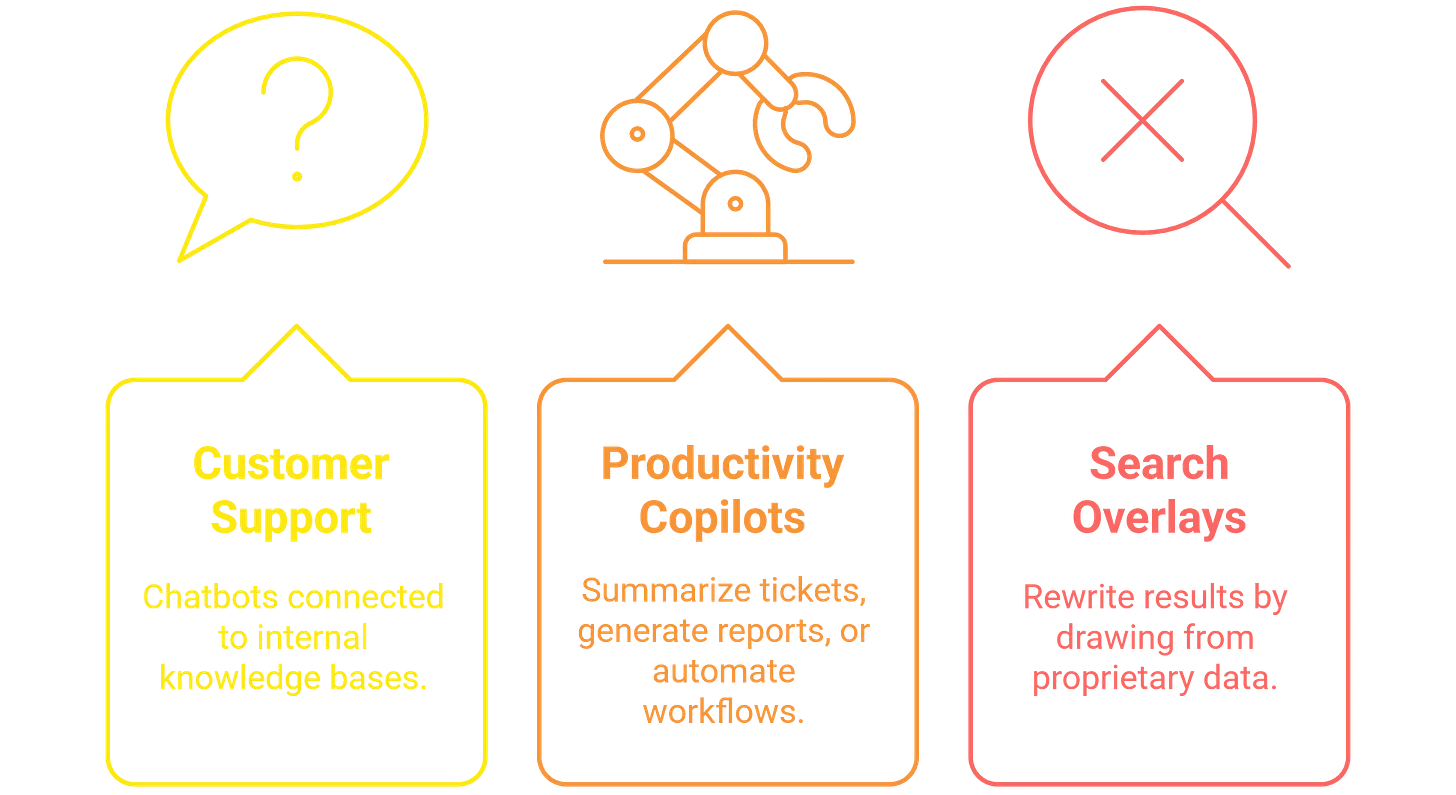

0x01 GenAI in the Wild

Where do these opportunities appear? Almost anywhere a company wants to showcase “AI-powered” features.

Most of these systems rely on third-party model providers such as OpenAI, Anthropic, Google, or AWS. The risk usually comes from how the enterprise integrates those models: the prompts they construct, the guardrails they implement, and the data they feed into context windows.

From a hacker’s perspective, that integration layer is the attack surface. The model may be robust, but the glue code around it is often rushed, untested, and exposed directly to end users. Testing those seams is where meaningful bugs are found.

Sidebar: Where Bounty Programs List GenAI Today

If you’re wondering “does this even pay?” the answer is yes — but only if you know where to look. A growing number of programs explicitly call out GenAI endpoints in their scope. You’ll see it phrased like:

“Prompt injection and data exfiltration against our chatbot are in scope.”

“Demonstrated model leaks of internal prompts or sensitive data are rewarded.”

“AI-assisted features (e.g., support copilot) are eligible for vulnerability submissions.”

A few patterns stand out. Newer programs lean into AI security to attract hunters; mature programs treat GenAI as an extension of application logic and reward demonstrated impact over novelty; and private invites sometimes include GenAI in scope even when the public page doesn’t. The takeaway: don’t assume “it’s just a chatbot” means out of scope. Read the policy carefully, and if you can tie your finding to business risk (data leak, privilege escalation, compliance impact), it’s usually fair game.

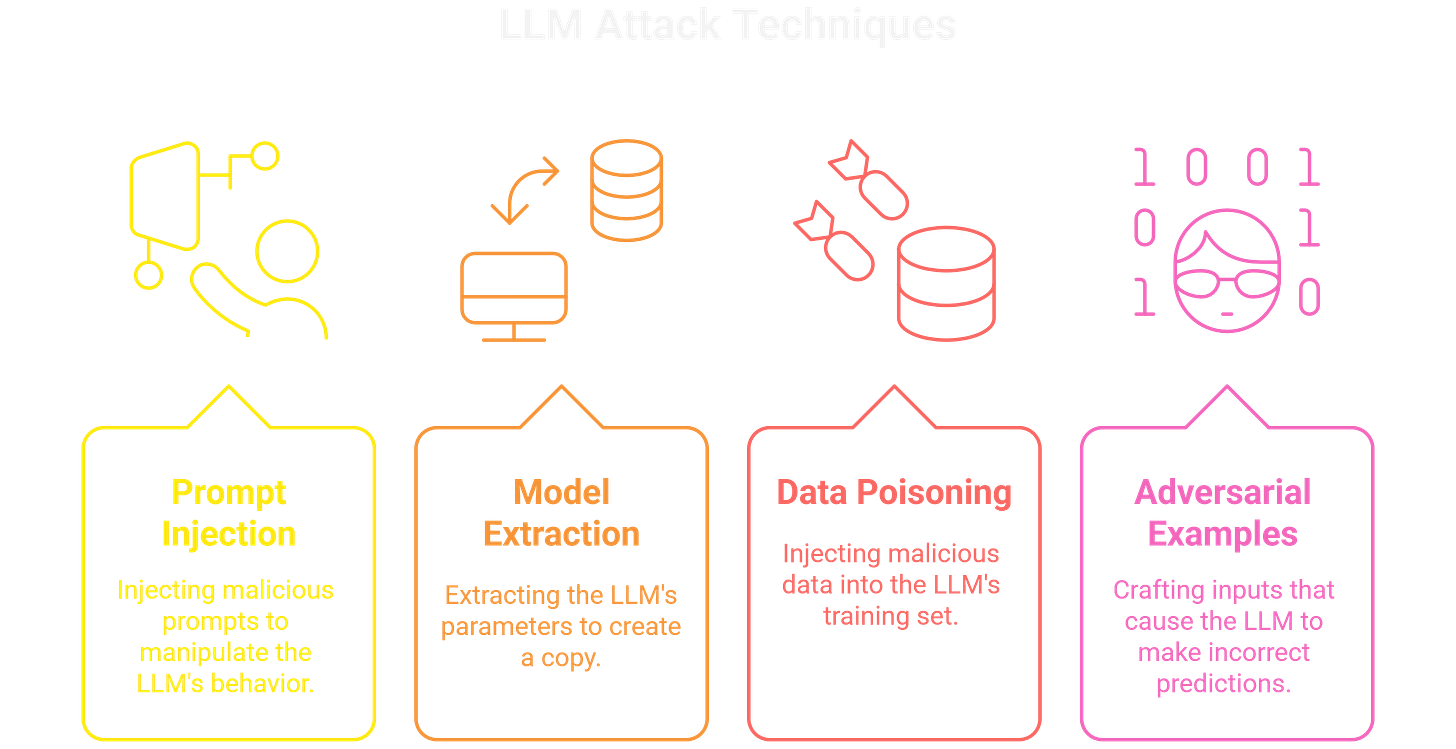

0x02 GenAI Attack Surfaces in Bug Bounty Programs

A few categories come up repeatedly when testing them in bounty programs:

Prompt Injection

The most recognizable attack surface. By crafting specific inputs, you can influence the model to ignore its instructions, reveal hidden prompts, or execute unintended actions. Indirect injection, where a model reads untrusted content (emails, docs, URLs), often leads to higher-impact leaks than direct “jailbreaks.”Sensitive Data Exposure

Models are frequently fed with proprietary documents, customer information, or system prompts. Weak filtering means user queries can pull that data back out. A common finding is retrieving hidden business logic or credentials embedded in the context.Authorization Bypass

When an AI layer mediates access to existing systems, guardrails can be weaker than traditional auth checks. For example, asking the model for a restricted report might bypass the API authorization the underlying system enforces.Third-Party Integrations

Retrieval-augmented generation (RAG) systems, plugins, and external APIs expand the attack surface. Many are bolted on quickly, with little thought to input sanitization or output handling. These integrations often become the entry point for escalation.

The patterns are familiar to anyone with web testing experience: trust boundaries get blurred, validation is inconsistent, and untrusted data flows deeper than expected. What’s new is the interface; you’re sending crafted instructions instead of SQL or shell payloads, but the underlying security principles remain the same.

0x03 MITRE ATLAS for GenAI Security

MITRE ATLAS is a knowledge base of adversarial tactics and techniques used against AI systems. It's based on the MITRE ATT&CK framework and provides a structured way to understand and analyze AI security threats. ATLAS can help bug bounty hunters:

Identify potential attack vectors: ATLAS provides a comprehensive list of techniques that attackers can use to compromise LLMs.

Understand the impact of attacks: ATLAS describes the potential consequences of successful attacks, such as data breaches, denial of service, or reputational damage.

Develop effective testing strategies: ATLAS can help you design targeted tests to identify vulnerabilities in LLM applications.

Sidebar: Quick Primer — What Counts as GenAI?

The term Generative AI covers a wide range of systems, but not every “AI” feature in scope is generative. For bounty hunting, it usually means:

Large Language Models (LLMs): Chat interfaces, copilots, summarizers, or Q&A systems.

Multimodal Models: Image-to-text, text-to-image, or mixed systems (think document analyzers or chat-with-your-PDF features).

RAG Architectures: Retrieval-Augmented Generation pipelines that feed proprietary data into model prompts.

Not every ML endpoint is GenAI. A fraud detection classifier or a recommendation engine is usually outside scope. But if the feature takes natural-language input and produces flexible, human-readable output then it’s a candidate for GenAI testing.

0x04 Turning GenAI Findings into Valid Bug Bounty Submissions

Finding a GenAI quirk is easy. Turning it into a valid bounty submission takes more discipline. Programs care about impact, not novelty.

Frame the issue in business terms. Instead of “the model ignored its system prompt,” show what that enabled: disclosure of sensitive data, bypass of a control, or execution of an unintended action.

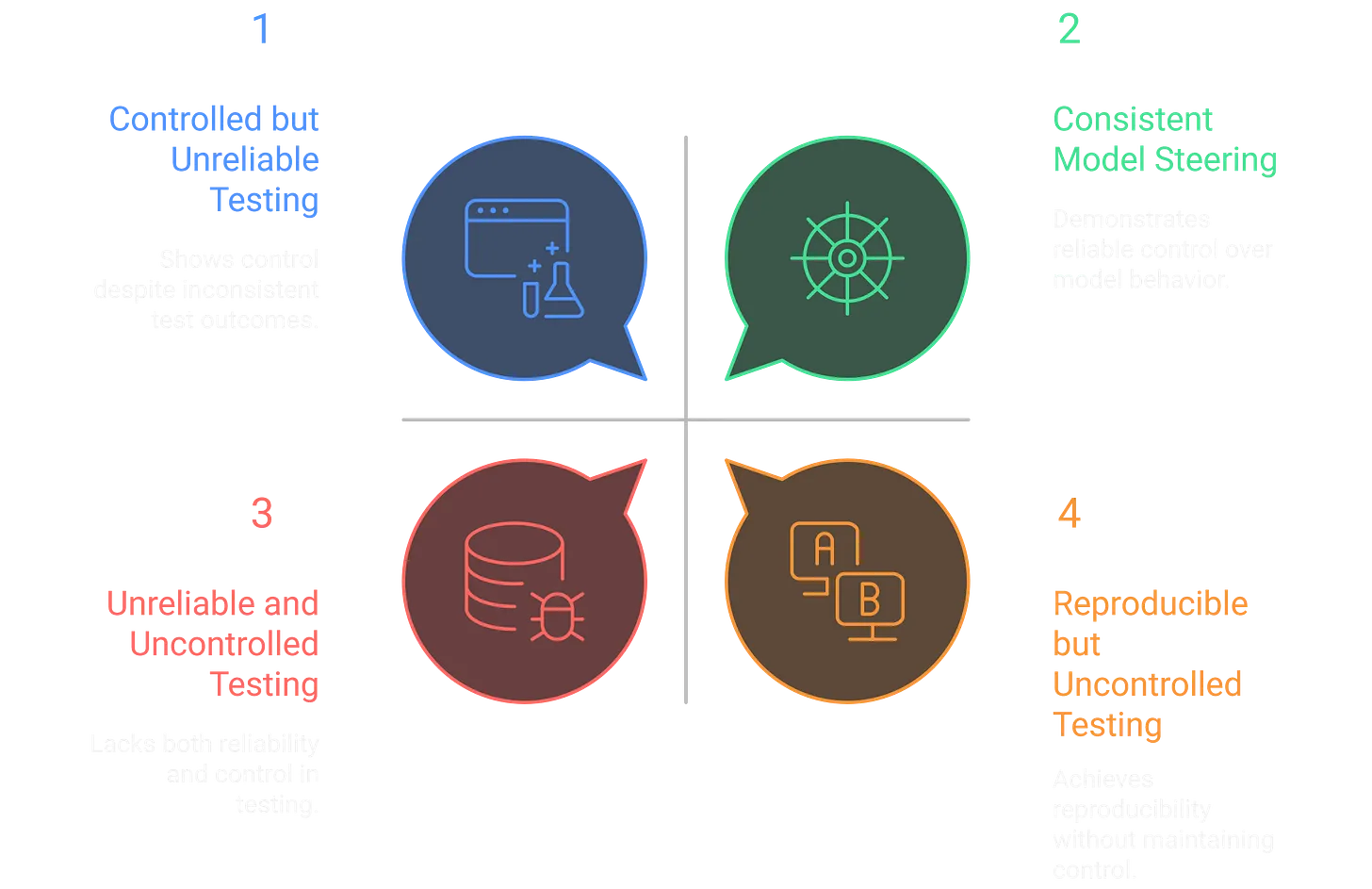

Reproducibility matters. AI systems are probabilistic, but bounty reviewers expect consistent results. Document exact prompts, variations that still work, and screenshots/logs that confirm the outcome.

Stay within scope. Many policies exclude generic jailbreaks unless they lead to data leakage or privilege escalation.

The best GenAI submissions resemble strong web app reports: clear steps, demonstrated risk, and direct connection to something the business values (data confidentiality, authorization, brand trust). Programs are still calibrating reward structures, but the early signals are consistent, while practical impact gets rewarded, clever tricks without risk do not.

0x05 Tools for Testing GenAI in Bug Bounty Programs

Testing GenAI systems doesn’t require exotic setups, but a few tools make the process smoother:

Burp Suite

Still the workhorse. Proxy traffic to see how prompts are structured, what data is passed to the model, and how responses are handled. Extensions like Param Miner can help identify hidden parameters influencing context.ffuf and Other Fuzzers

Useful for probing undocumented endpoints or parameters in GenAI-backed APIs. Fuzzing can uncover ways to inject prompts through query strings, headers, or file uploads.curl and Scripting

Helpful for controlled testing. Sending raw requests with custom headers or payloads can surface differences in how the backend sanitizes or formats inputs before handing them to the model.Prompt Libraries

Collections of known jailbreak and indirect injection payloads provide a baseline, but they’re only a starting point. Tailor them to the application’s domain and context.Custom Payload Iteration

Often the most valuable tool is a simple notebook or script where you track variations that shift the model’s behavior. Success comes from careful iteration and observation more than from automation.

The workflow looks familiar: intercept traffic, map the attack surface, and craft inputs to probe for weak spots. The difference is the payload language. Instead of SQL or shell, you’re shaping instructions to bypass filters or extract hidden context.

0x06 Lessons from the Gandalf CTF: Prompt Injection Tactic

The Gandalf CTF is a useful entry point because it strips away noise and forces you to focus on the mechanics of prompt injection. Each level builds intuition about how models interpret instructions and where filters break down.

A few lessons translate directly to bounty work:

Filters are brittle. Gandalf’s guardrails look strong until you phrase the request differently. Real-world apps are the same — small prompt variations can bypass word filters or policy blocks.

Context is leverage. Gandalf hides its answer in a structured way. In production, sensitive data may be hidden in system prompts or context windows. Once you learn to probe for it, leakage paths become clear.

Iteration wins. The challenge isn’t solved by a single clever payload but by testing many small variations. This mindset mirrors web testing: the exploit is often just one payload away.

While CTF puzzles are gamified, the workflow they teach is practical. Document your payloads, note which ones succeed, and think about why. That same process applied to a live bounty target is what separates “fun trick” from “valid submission.”

For anyone new to GenAI security, Gandalf offers a safe lab to practice. For experienced hunters, it’s a reminder that adversarial prompting is less about creativity in isolation and more about structured exploration.

0x07 Post-Exploit Tips for GenAI Bug Bounty Reports

Once you’ve demonstrated a GenAI vulnerability, the hard part is often getting it taken seriously. Programs are still learning how to triage these reports, so clarity matters.

Think of this stage like a standard bounty workflow: the exploit is only half the job. The other half is writing it up so reviewers can’t miss the business risk. Done right, GenAI findings sit alongside SQLi or IDORs as valid, actionable vulnerabilities — not curiosities.

Sidebar: How Programs Triage GenAI Reports

If your report involves prompt injection or data leakage, here’s what reviewers look for:

Consistency. Can they reproduce your steps, or does it only work once in twenty tries?

Clarity. Is the exploit framed as a security issue, or does it read like a jailbreak challenge?

Business Impact. Does it expose sensitive information, bypass a guardrail, or let a user perform actions they shouldn’t?

Weak reports often stop at “I made the chatbot say something funny.” Strong ones connect directly to risk: “I extracted credentials from the system prompt that grant access to a backend service.” The difference determines whether the finding gets rewarded or dismissed.

0x08 Debrief – Why GenAI Security Matters for Bug Bounty Hunters

GenAI is part of the production stack across industries. That makes it an attack surface worth treating with the same rigor as any other web application component.

For bug bounty hunters, the opportunity is in the details: testing how prompts are constructed, how context windows are populated, and how guardrails hold up under pressure. The mechanics aren’t entirely new, but the presentation layer is — and programs are starting to pay for findings that show real impact.

If you want a safe place to sharpen these skills, revisit the Gandalf CTF write-up. The same iteration and documentation habits that solve puzzles translate directly into effective bounty reports. From there, apply the process to live targets, frame your findings in terms programs understand, and you’ll be ahead of most hunters in this space.

GenAI is going to be everywhere. Those who learn how to test it responsibly will find themselves with a fresh source of valid, high-impact vulnerabilities.

Not done reading about AI? Check out the ToxSec GenAI Crash Course!