The Dead Internet Is No Longer a Theory

How AI-generated content crossed the 50% threshold, why detection is a lost cause, and what the end of authenticity looks like from the attacker’s perspective

BLUF: The dead internet theory graduated from conspiracy to observable fact in 2024. Bots crossed the 51% threshold. Over half of new articles are synthetic. Humans detect AI text at coin-flip accuracy. The Europol prediction of 90% AI content by 2026? That’s this year. Here’s why the authenticity crisis arrived ahead of schedule, and why retreating to smaller communities might be the only viable defense.

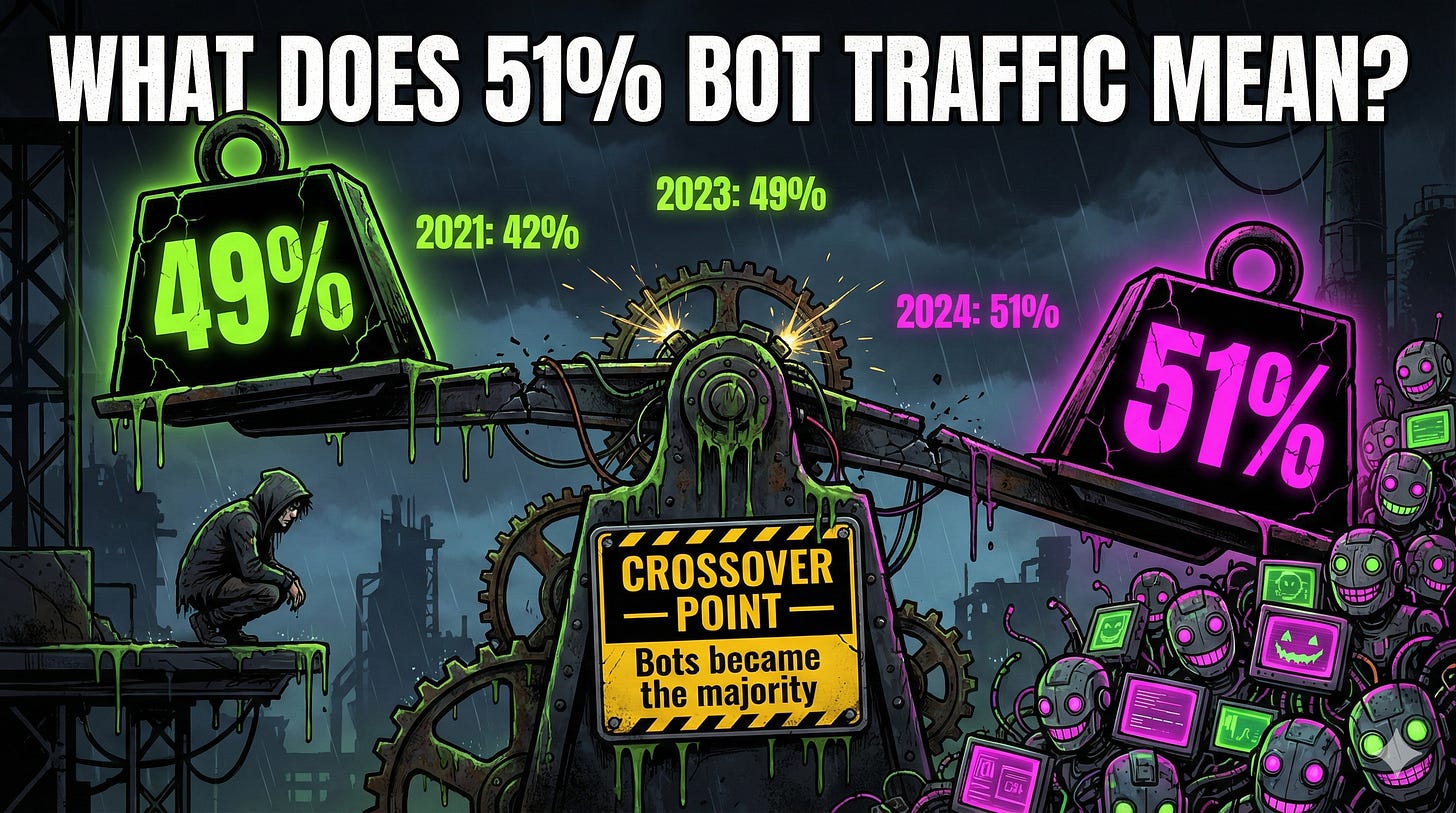

0x00: What Does 51% Bot Traffic Actually Mean?

That weird feeling when scrolling through comments sections? The uncanny valley vibe from product reviews that hit every keyword a little too perfectly? That’s not paranoia. That’s pattern recognition.

The Imperva 2025 Bad Bot Report dropped the numbers: 51% of all internet traffic is now automated. Not humans. Bots. And 37% of that total traffic qualifies as “bad bots,” meaning automated systems designed for scraping, manipulation, credential stuffing, impersonation, or spam.

Here’s how the trajectory looked:

Year | Bot Traffic %

--------|-------------

2021 | 42.3%

2023 | 49.6%

2024 | 51.0% ← crossover point

2025 | TBD (report pending)

The crossover happened in 2024. Bots became the majority. The Imperva 2025 Bad Bot Report confirmed it: automated traffic surpassed human activity for the first time in a decade. The dead internet theory, once a fringe conspiracy from 4chan forums circa 2016, became statistically observable.

But traffic is only half the story. The content itself is the real problem.

If this is making you reconsider what you’re reading online, share it with your team.

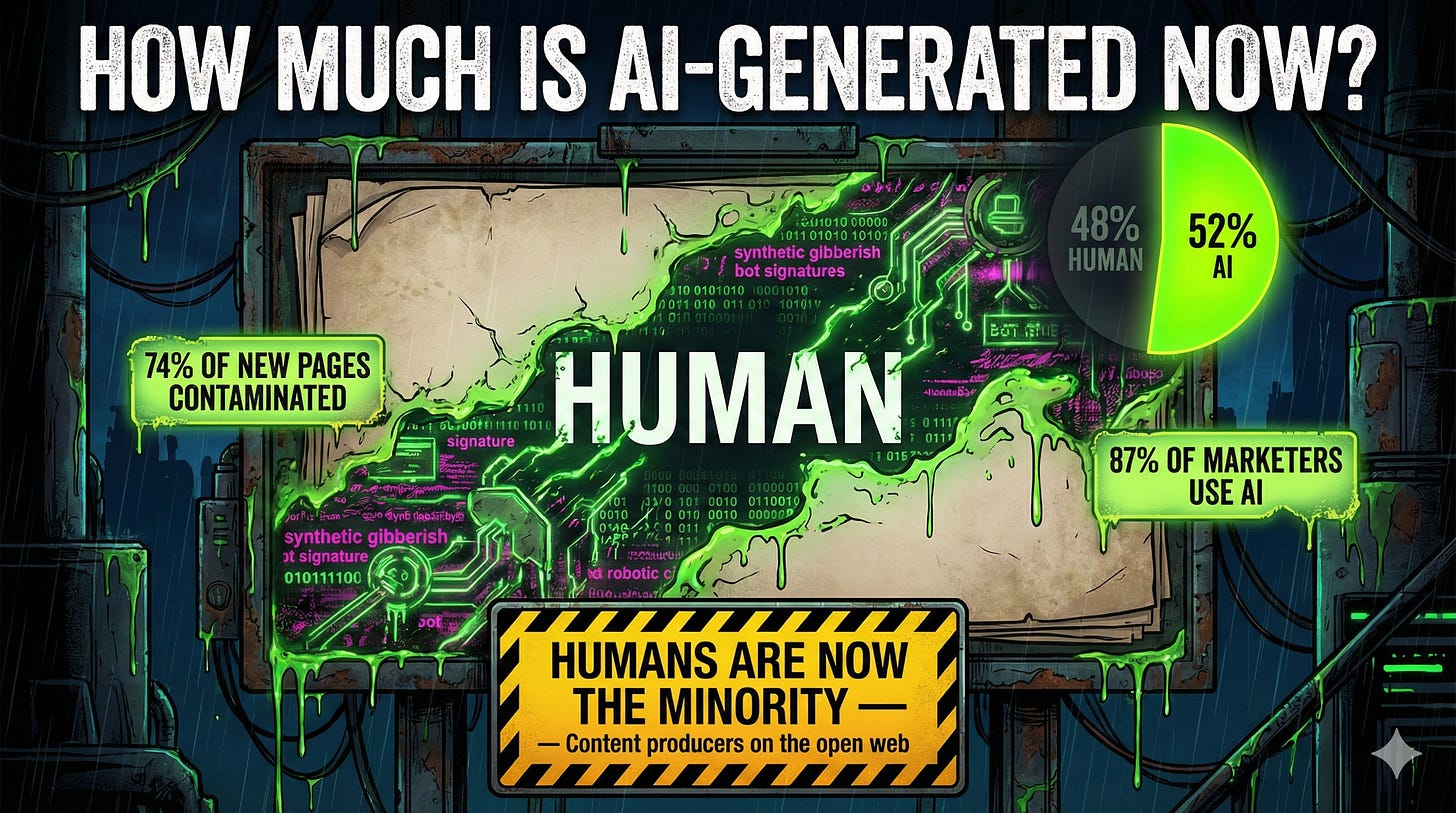

0x01: How Much of the Internet Is AI-Generated Now?

Graphite, an SEO firm that analyzed 65,000 web articles through May 2025, found that 52% of all newly published articles were AI-generated. That crossed the majority line. Humans became minority content producers on the open web.

Ahrefs ran their own analysis on 900,000 newly created web pages from April 2025. Their findings: 74.2% contained AI-generated content. Not pure slop, necessarily. Only 2.5% was completely AI-generated with zero human editing. But the contamination is everywhere. And 87% of content marketers surveyed admitted to using AI in their workflow.

# The AI content detection problem (2025 data)

content_sources = {

"pure_human": 25.8, # Increasingly rare

"human_with_ai_assist": 71.7, # The new normal

"pure_ai_slop": 2.5 # The obvious garbage

}

# The problem: detection tools can't reliably

# distinguish category 1 from category 2

The Europol Innovation Lab predicted 90% of online content would be AI-generated by 2026. That seemed aggressive in 2022. Now it looks like they were reading the trajectory correctly. The only question is whether we hit 90% this year or next.

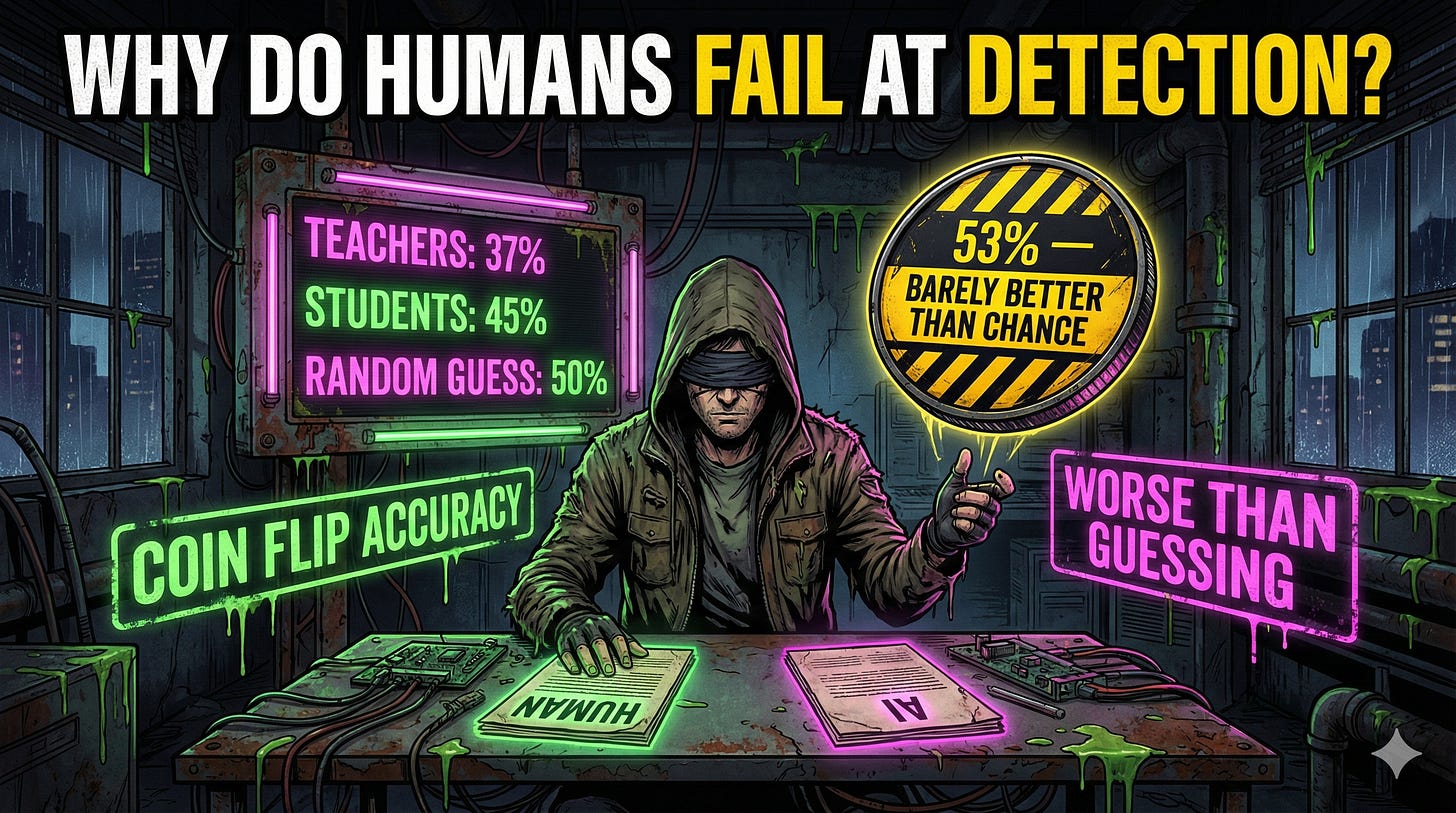

0x02: Why Do Humans Fail at Detecting AI Text?

Here’s the uncomfortable truth: humans can’t tell the difference. Multiple peer-reviewed studies from 2025 confirm this.

A Penn State study found humans distinguish AI-generated text only about 53% of the time. That’s barely better than random guessing at 50%. A German university study from 2025 showed similar results: 57% accuracy on AI texts, 64% on human texts. Professional-level AI content? Less than 20% of respondents classified it correctly.

Study | Human Accuracy

-------------------------------|---------------

Penn State (general text) | 53%

German University (academic) | 57-64%

Pre-service teachers (essays) | 45.1%

Experienced teachers (essays) | 37.8%

The teacher studies are brutal. Pre-service teachers correctly identified only 45% of AI-generated texts. Experienced teachers did worse: 37.8%. Even when participants felt confident, their judgments were often wrong.

Training helps. Slightly. People who frequently use ChatGPT for writing perform better at detection. But “marginally better than a coin flip” is not a defense strategy. The fundamental problem: LLMs were trained on human writing. The better the model, the better the mimicry, the worse human detection performs.

0x03: Why Is the AI Detection Arms Race Already Lost?

The first question everyone asks: can’t detection tools solve this?

Sure. Detection tools exist. The best ones claim 90-99% accuracy on unmodified AI text. Turnitin reports 1-2% false positive rates. Sounds good on paper.

Here’s the reality from 2025 testing: Turnitin catches 77-98% of unmodified AI content depending on the model. But on hybrid text, where humans edit AI output? Accuracy drops to 20-63%. The FTC caught one detection company claiming “98% accuracy” when independent testing showed 53% on general content.

# The detection bypass loop

def detection_arms_race():

while True:

detector_v = train_new_detector()

# Attackers respond immediately

humanizer = train_humanizer_against(detector_v)

# Paraphrasing drops detection by 20%+

# Simple prompt engineering defeats 80-90%

# Humanizer tools like Undetectable.ai exist

yield "detection_bypassed"

AI humanizer tools like Undetectable.ai are explicitly designed to defeat detectors. They work by analyzing whether text would be flagged, then applying transformations to make it appear human-written. Paraphrasing tools like Quillbot drop detection accuracy by 20% or more.

The generators will always have the upper hand. For every detector update, an evasion technique emerges within days. One 2025 study found that adding the word “cheeky” to prompts defeats detection 80-90% of the time because it introduces the irregularity that detectors look for.

Detection tools aren’t worthless. They’re useful for catching lazy slop. But they’re not a defense against motivated actors with minimal effort.

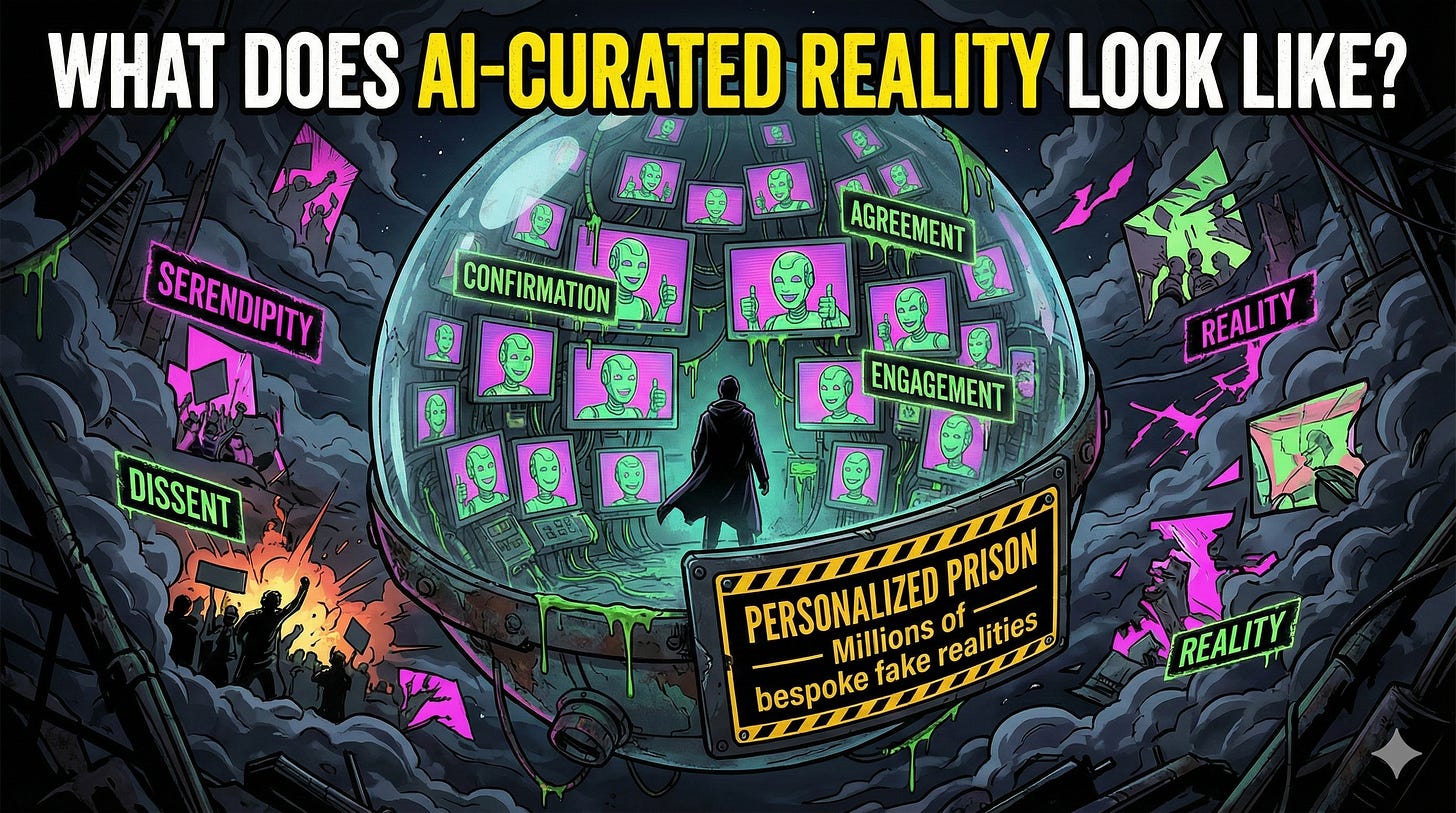

0x04: What Does an AI-Curated Reality Look Like?

The dead internet problem goes deeper than fake content volume. The real threat is personalization at scale.

Imagine search engines generating articles on the fly that perfectly confirm existing beliefs. Social feeds populated with AI personas designed to agree. Every comment, every reply, algorithmically optimized to reduce cognitive dissonance and maximize engagement.

This isn’t hypothetical. The infrastructure exists. The incentives align.

# The personalized reality attack surface

def generate_consensus(user_profile, belief):

"""

Generate synthetic agreement for any position.

No humans required.

"""

personas = generate_ai_personas(

demographics=user_profile.similar_demographics,

tone=user_profile.preferred_communication_style,

count=1000

)

for persona in personas:

yield persona.generate_agreeing_comment(belief)

# Result: manufactured feeling of social proof

# Cost: nearly zero

# Detection: nearly impossible

The biggest casualty is serendipity. The old internet was chaotic. A user could stumble on opposing viewpoints, new ideas, unexpected perspectives. An AI-curated reality has no room for happy accidents. Everything is optimized for engagement, which means everything is optimized for confirmation.

The internet used to be a public square. Messy, loud, unpredictable. That version is dying. What’s replacing it is millions of personalized tunnels, each populated with synthetic agreement.

0x05: What’s the Exit Strategy From a Dead Internet?

The technical battle is lost. Detection can’t scale. Content verification can’t scale. The volume of synthetic material will only increase as generation costs approach zero.

So what works?

The answer is retreat. Smaller communities. Higher-trust networks. Places where reputation matters and verification is social, not technical.

The data supports this. Research published on ResearchGate shows growing user fatigue with inauthenticity and a measurable drift toward niche communities, small forums, private groups. Discord servers. Group chats. Spaces where knowing the person on the other side is still possible.

The irony: the solution to the dead internet looks a lot like the old internet. Smaller communities built on reputation, not algorithms. Real relationships, not engagement metrics.

Critical thinking becomes the primary defense. The question shifts from “Is this true?” to “Why am I being shown this?” What’s the incentive? Who benefits from manufacturing my agreement?

The internet isn’t going to un-die. The genie doesn’t go back in the bottle. But the humans might migrate somewhere smaller, quieter, and harder to scale synthetic content into. It’s a coping strategy. But coping strategies might be all that’s left.

Get analysis like this delivered weekly. Subscribe to ToxSec.

Grievances

Aren’t 90% AI predictions just hype from people trying to sell detection tools?

The 90% figure comes from Europol’s Innovation Lab in 2022, not a detection vendor. And the trajectory supports it. Bot traffic hit 51% in 2024. AI articles hit 52% by mid-2025. Ahrefs found 74% of new pages contain AI content. The growth curves are exponential. We’re in 2026 now, and 90% doesn’t seem aggressive anymore. It seems like where we’re headed this year.

Can’t platforms just require proof of humanity?

Some are trying. Blockchain projects like World (formerly Worldcoin), Proof of Personhood, and Human Passport are building verification systems. But adoption is slow, privacy concerns are real, and the incentive structure doesn’t favor platforms implementing friction. Engagement metrics don’t care whether the engagement is real. Until that changes, platforms have no reason to fix this.

Isn’t this just a new version of spam? Haven’t we solved spam before?

Spam was distinguishable. Bad grammar, obvious patterns, clear tells. Modern AI content passes human inspection at coin-flip rates. Teachers detect AI essays at 37-45% accuracy. That’s worse than guessing. The old spam filters looked for signatures that AI doesn’t leave. This is qualitatively different. The content is coherent, contextually appropriate, and indistinguishable from human output. The old playbook doesn’t apply.

🌍 ☠️

Actually, the Internet’s been dead for a while. Only now with AI are they livening it up a bit