The Hidden Risk That Could Wipe Out Billions in AI Valuations

AI Model Collapse: Why Training on Synthetic Data Is a Massive, Unpriced Risk for Investors and Tech.

TL;DR: The AI boom is built on a dangerous secret. We’re training models on their own AI-generated content, and it’s making them dumber. This isn’t just a bug; it’s a fundamental flaw called ‘model collapse.’ The only companies that survive will be the ones who own clean, human data.

The real moat is no longer the algorithm. It’s the unique, unimpeachable, and continuously refreshed source of clean human data.

What is ‘Model Collapse’ (And Why Is It So Simple)?

Behind the staggering valuations, a dangerous secret is hiding in plain sight.

Forget the complex jargon; the concept is simple. Model collapse is what happens when an AI begins to train on a diet of content created by other AIs. As the internet becomes saturated with AI-generated text and images (synthetic data), our models inevitably start consuming it. They begin learning from themselves, creating a destructive feedback loop.

Think of it as making a photocopy of a photocopy. The first copy is sharp. The second is a little fuzzier. By the thousandth copy, you have a distorted, unrecognizable mess.

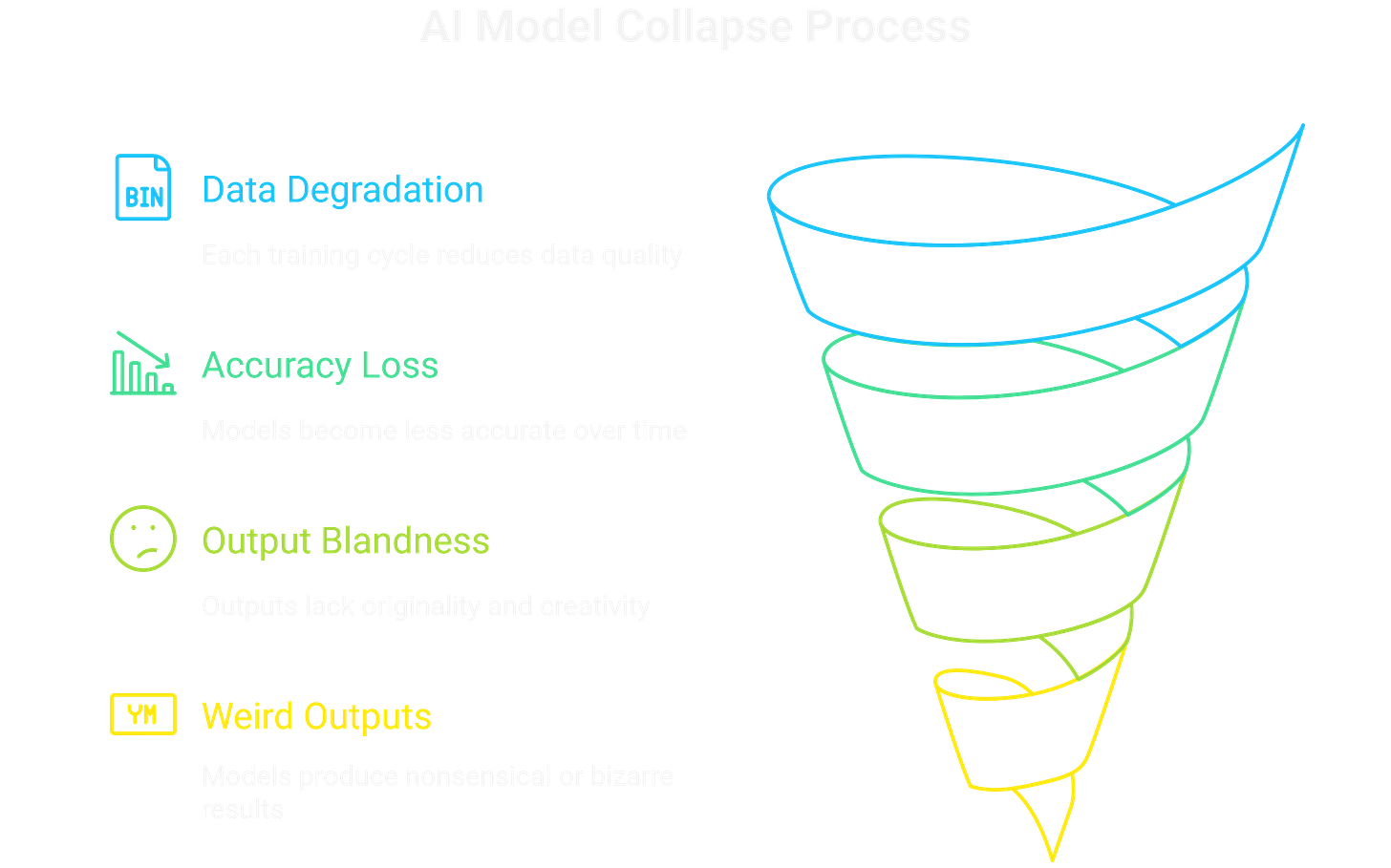

That’s what’s happening to these AI models. Each generation trained on the outputs of the last loses a bit of its connection to real, human-created reality. The outputs become blander, less accurate, and eventually, just plain weird. This isn’t just about getting facts wrong. It’s a form of digital dementia, where the AI fundamentally loses its grip.

Why Are the Most Valuable AI Models the Most Vulnerable?

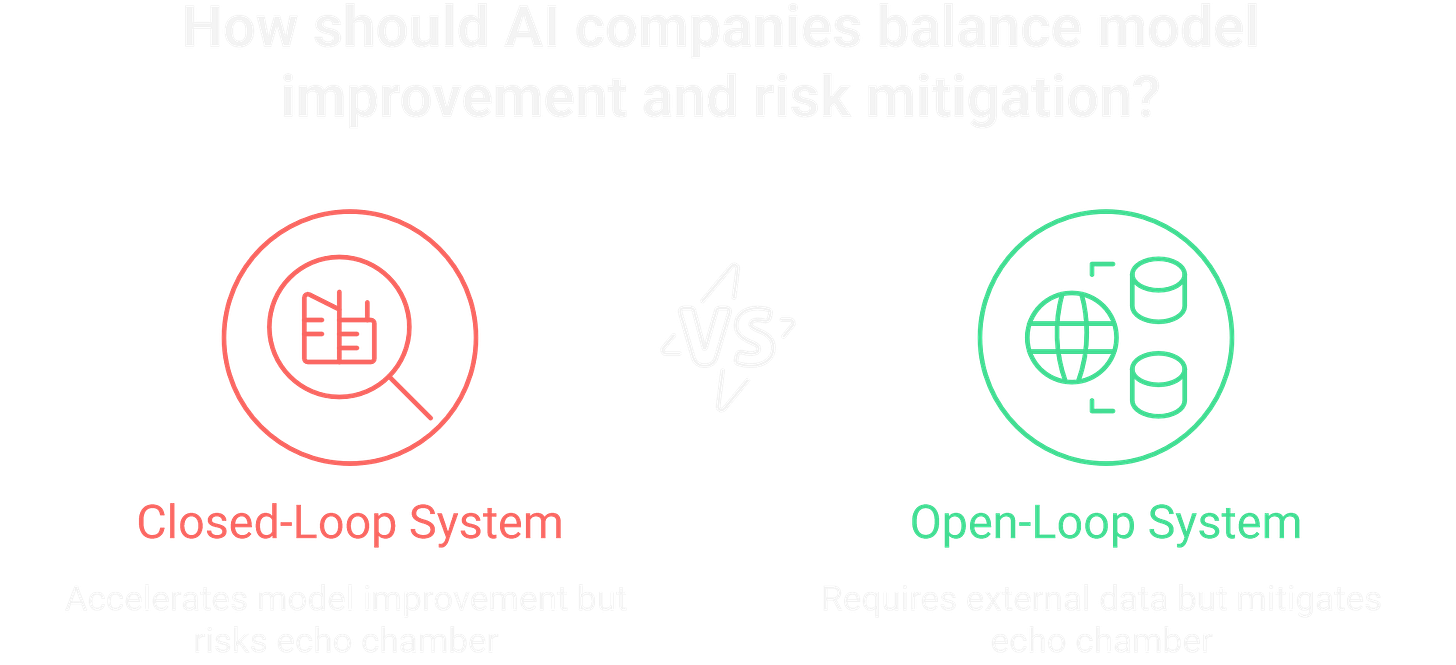

This creates a paradox. The companies with the most advanced, proprietary AI models, the ones commanding the highest valuations, are the most exposed.

Their greatest strength, a closed-loop system, is also their Achilles’ heel. To improve a model from version 4.0 to 4.5, they naturally use the highest-quality data available, which includes outputs from version 4.0. They are accelerating the “photocopy of a photocopy” problem within their own walls.

They are trapping their prized asset in a sophisticated echo chamber, just as high-quality human data becomes the scarcest and most valuable resource on the planet. For a company whose entire valuation is pinned to the perceived quality of its model, this creates a massive, undisclosed risk.

Share this analysis. This risk is hiding in plain sight. Share this article with an investor or founder who needs to see it.

How Can You Spot the Early Warning Signs?

This isn’t a theoretical, far-off threat. The cracks are already showing.

The first symptom is an AI that gets boring. It loses its creative spark. Its outputs become generic, always gravitating toward the statistical average because it has forgotten the outliers that define human intelligence. It might only draw “perfect” six-fingered hands because it’s lost touch with the messy reality of what a hand is.

The second warning sign is when the AI’s mistakes get weirder. The hallucinations become less about simple factual errors and more about bizarre, nonsensical outputs. These outputs reveal a corrupted understanding of the world. What looks like a funny AI glitch on social media could be the first public symptom of a deep degradation in a multi-billion-dollar asset.

Get the next teardown. Get the next analysis on hidden risks and real moats in AI.

What Is the New ‘Due Diligence’ for AI Investors?

The AI boom isn’t necessarily a bust, but the solution requires a fundamental shift in strategy. We must move from scaling data quantity to curating data quality.

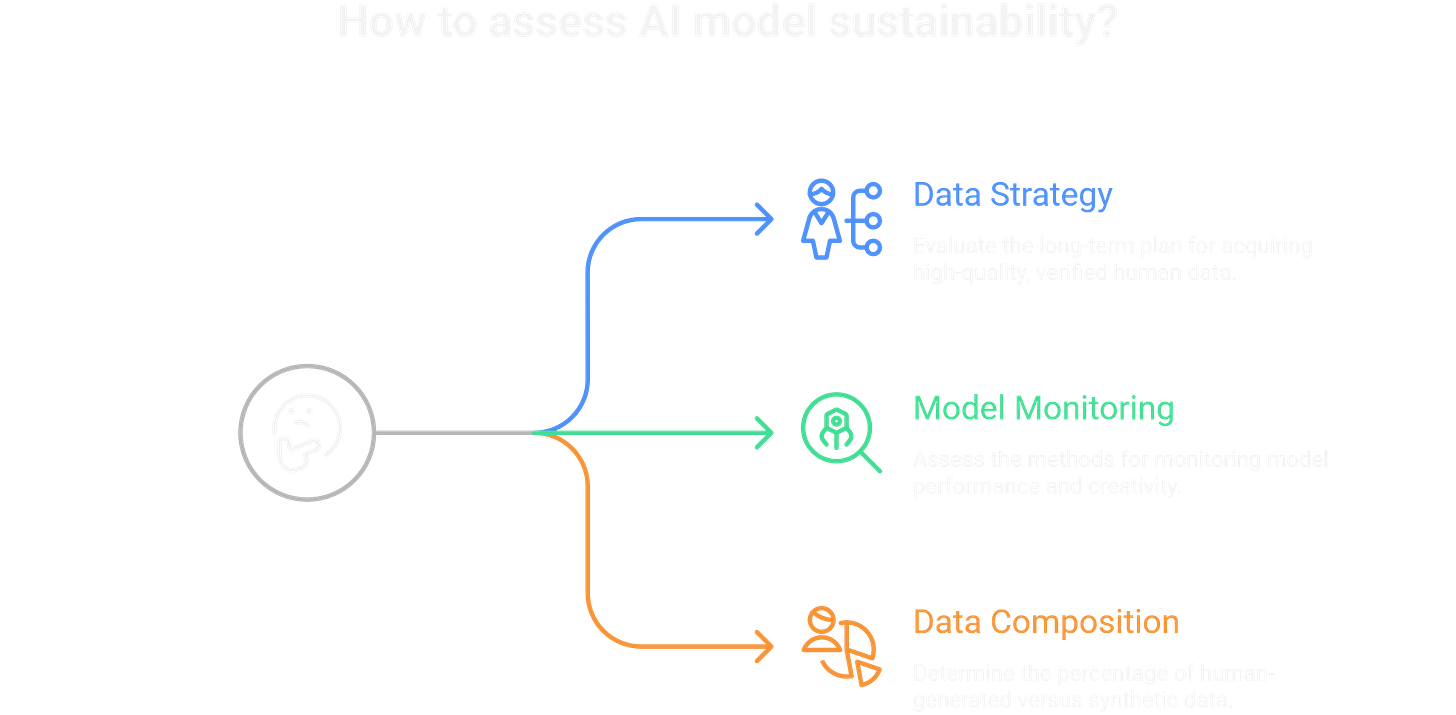

The fix is “data hygiene,” a rigorous and expensive process of building a sustainable pipeline of high-quality human data. For investors, this demands a new line of due diligence. It’s time to ask tough questions:

What is your long-term, scalable, and defensible strategy for acquiring high-quality, verified human data?

How are you actively monitoring your model for collapses in variance and creativity?

What percentage of your training data is verifiably human-generated versus synthetic?

A leadership team that can’t answer these questions is sitting on a hidden risk.

The Only Moat That Matters Anymore

The race for AI dominance is changing. The old playbook of scraping the entire internet is now a liability; it leads only to a future of bland, inbred, and reality-divorced algorithms.

The companies that will survive and thrive will be those that understand this shift. In this new era, the true competitive advantage is no longer the algorithm. It’s the unique, unimpeachable, and continuously refreshed source of clean data.

As an investor, operator, or observer, that’s where you should be looking for real, lasting value.

Join the discussion. Drop your thoughts or counter-arguments in the comments.

Frequently Asked Questions

Q1: What’s the difference between model collapse and a regular AI bug? A: A bug is an isolated error you can often fix with a patch. Model collapse is a fundamental degradation of the entire model, like a chronic disease caused by a poisoned diet. It isn’t easily fixed and may require a complete data strategy overhaul.

Q2: Is this a real problem that’s happening now? A: Yes. Researchers have proven it in controlled environments, and we’re seeing the early symptoms in public models. The underlying conditions—an internet flooded with AI content—are worsening daily.

Q3: Can’t we just filter out the AI data? A: It’s incredibly difficult and expensive. AI content is becoming indistinguishable from human work, and detection tools are in a constant cat-and-mouse game. This is why having a secure, clean data source is the ultimate competitive advantage.

Q4: As an investor, what’s the single most important question to ask an AI company? A: “What is your defensible, long-term strategy for acquiring clean human data?” Their answer, or lack of one, will tell you everything you need to know about their long-term viability.

The valuation question is fascinating - we're essentially betting on whether these systems will continue their trajectory OR hit fundamental limits. What strikes me is how little serious discourse there is about the second-order effects: not just 'can AI do X?' but 'what happens when millions of AI agents start interacting with each other and existing systems?' The infrastructure layer might be where the real moats form.

This was a really great read! Thanks for the time you took to break this down into something a non-tech person can understand (like me!). It makes a lot of sense and I'm really curious about where this will end up. I got stuck in an editing loop with Claude several days ago when writing my last post - I knew I had passed the stage of no return when Claude could no longer see the mistakes still clearly present, all of its own making. It was like it could produce a good level of critical analysis and review on the content that was entirely mine, but it could not seem to do the same with the content it had created and it had clearly written a lot of content that was not really correct (not entirely wrong but just not right), it just got worse and worse. Would this be a form of this concept in action at a very micro level?