Web Enumeration for Bug Bounty

ToxSec | A guide to manual and automated web enumeration.

0x00 Web Enumeration for Bug Bounty

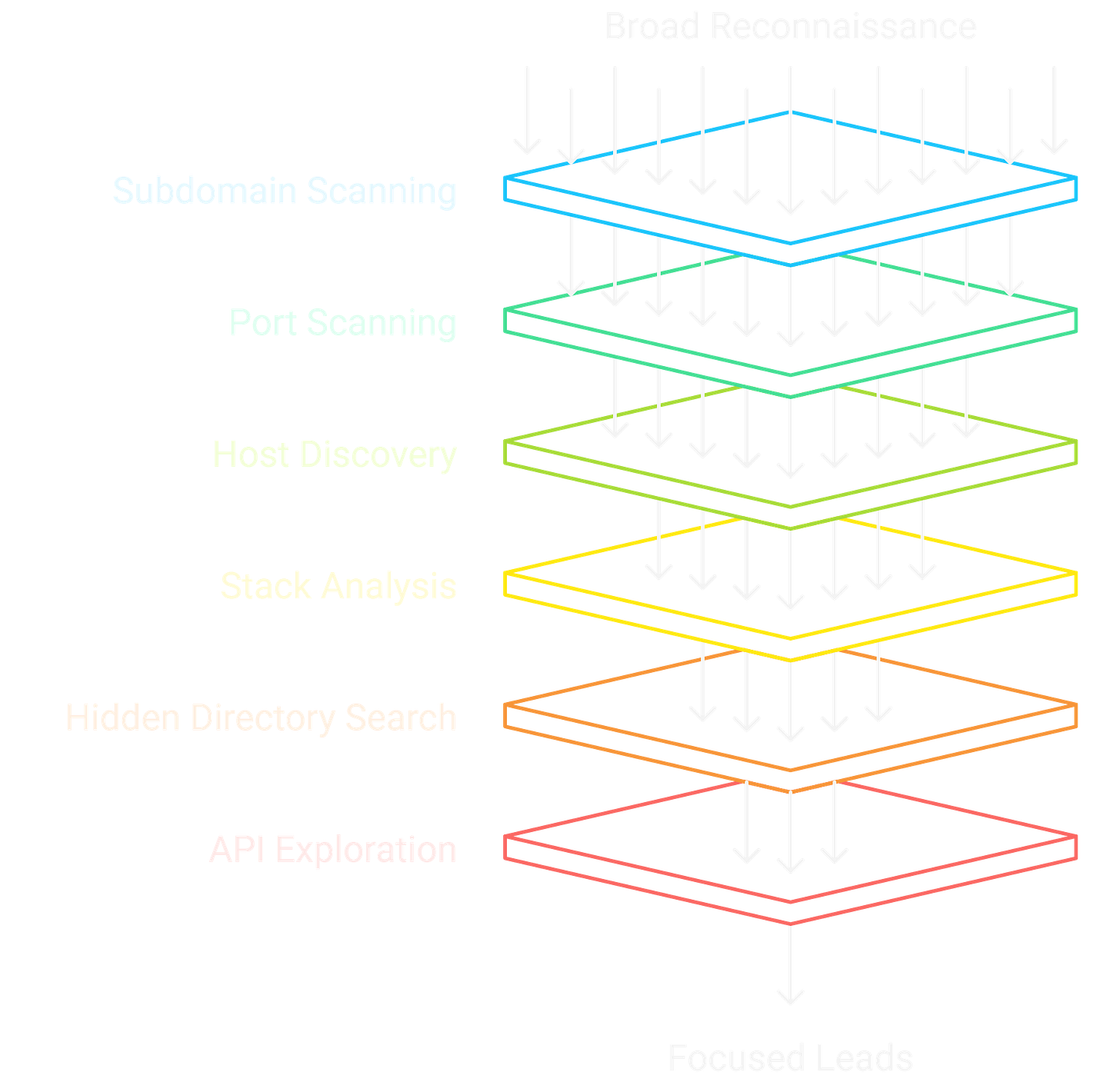

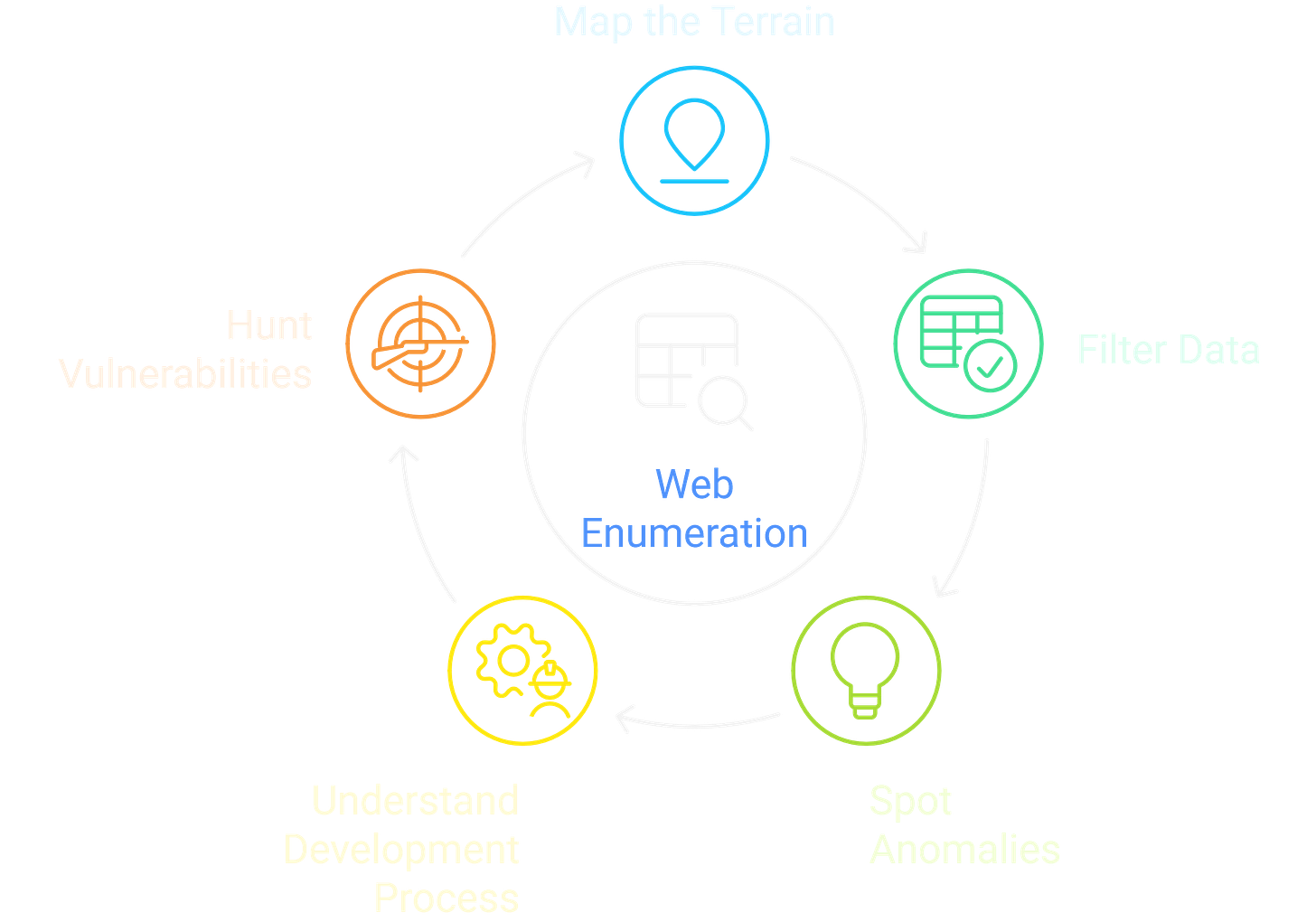

Every web hack begins with the same step: mapping the terrain. If you don’t know what exists, you don’t know where to strike. That’s what enumeration delivers — visibility.

Think of it in three layers:

Wide Recon: cast the net across subdomains, ports, and exposed hosts.

Deep Recon: dig into each host until you understand its stack, hidden directories, forgotten APIs.

Focused Recon: separate noise from value and decide which leads are worth your time.

The discipline is knowing when to stop. Enumeration will always return more data than you can use. The hackers who excel aren’t the ones with the longest wordlists; they’re the ones who can cut through the noise and recognize the anomalies that matter.

Enumeration is less about tools and more about mindset. You’re not just scanning a site — you’re uncovering the patterns of how a company builds, deploys, and forgets. And inside those patterns lie the bugs.

0x01 Subdomain Enumeration

In modern bug bounty programs, the real attack surface often lives off the main domain. Companies spin up staging sites, marketing campaigns, test servers, and SaaS integrations — but they rarely clean them up. That’s why subdomain hunting is step one.

Discovery

No single tool gives full coverage. Always combine multiple sources:

subfinder -d target.com -all -o subs.txt

amass enum -passive -d target.com -o amass.txt

cat subs.txt amass.txt | sort -u > all_subs.txt

Probing Live Hosts

Once you’ve got the list, trim it down to what’s actually responsive:

httpx -l all_subs.txt -status-code -title -tech-detect -o hosts.txt

What to Look For

Prefixes:

staging.,dev.,qa.often lead to weaker controls.Legacy providers: SaaS services that have been abandoned but still respond.

Regional oddities: country-specific subdomains with their own codebase.

Pro Tip

Don’t stop at DNS tools. JavaScript files often hardcode API endpoints or subdomains developers never meant to expose. Grep through JS during recon — those leaks can hand you fresh assets no scanner will find.

Subdomain enumeration is less about wordlists and more about persistence. The hunters who continuously monitor and revisit targets find the cracks others miss.

0x02 Directory and File Enumeration

Once you’ve locked onto a host, the next step is pulling apart its web tree. Hidden directories and forgotten files are where companies tend to leak the most. Admin panels, backups, old code — they’re rarely advertised, but they’re still there.

Tools of Choice

Brute-force discovery is the backbone here:

gobuster dir -u https://target.com -w /usr/share/seclists/Discovery/Web-Content/directory-list-2.3-medium.txt -t 50 -x php,asp,aspx,js,html

ffuf -u https://target.com/FUZZ -w /usr/share/seclists/Discovery/Web-Content/common.txt -fc 404

wfuzz -c -z file,/opt/SecLists/Discovery/Web-Content/raft-large-directories.txt --hc 404 "$URL"

What’s Worth Chasing

/admin,/login,/backup,/old— cliché but real.Sensitive files:

.git/,.env,.bak,.old.APIs: documentation endpoints like

/swaggeror/graphql.

Each entry tells a story about how the application evolved, what was left behind, and where security debt has piled up.

Directory enumeration is about pattern recognition. Anyone can spray wordlists; the real edge comes from knowing which results point to a genuine attack path.

0x03 Parameter & JavaScript Enumeration

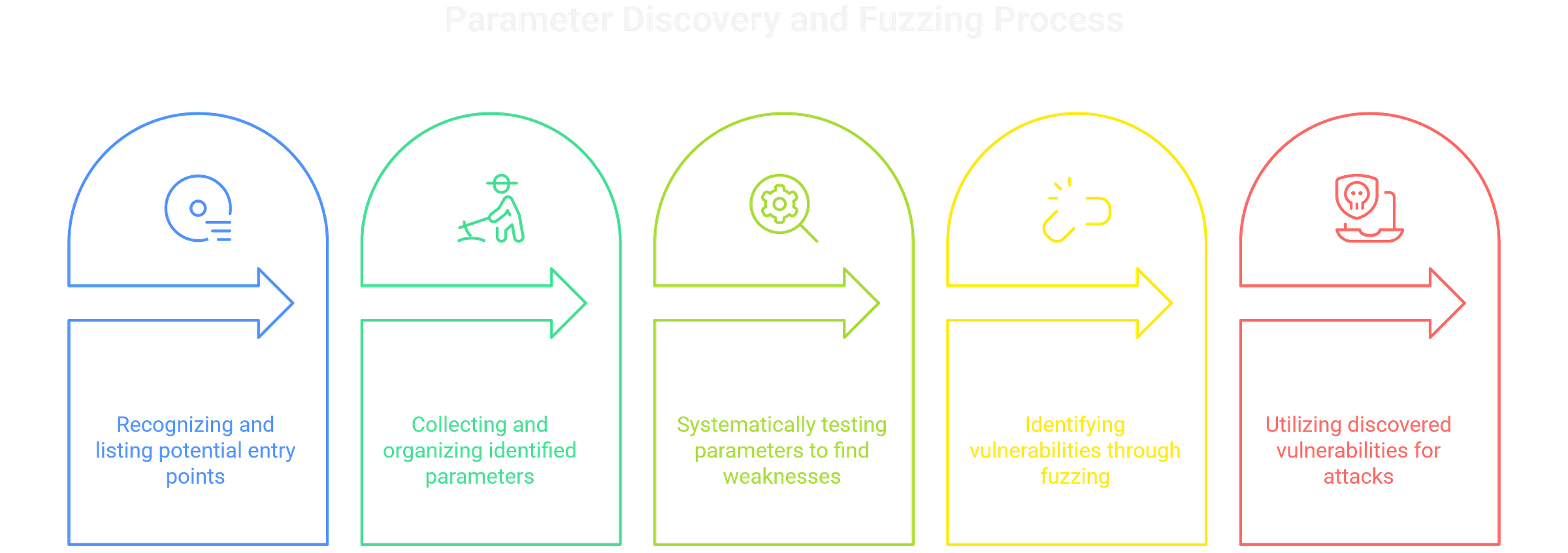

Directories expose structure, but parameters expose functionality. Most exploitable bugs hide here — in how applications handle IDs, filters, and hidden options. Pair that with JavaScript, and you’ve got a map of endpoints developers never meant to advertise.

Parameter Discovery

Parameters are gateways to injection and logic flaws. Harvest them first, then fuzz aggressively.

# Collect parameters from archives

waybackurls target.com | grep "=" | tee params.txt

# Fuzz parameters

ffuf -w params.txt:FUZZ -u https://target.com/page?FUZZ=test -fc 404

IDs → prime for IDOR.

Filters → SQL injection or type juggling checks.

Names/values → XSS opportunities.

arjun is another reliable option for pulling hidden parameters when archives don’t deliver.

JavaScript Analysis

JS files are often more valuable than directory brute force. Developers hardcode secrets, reference staging endpoints, and leave breadcrumbs in comments.

curl https://target.com/app.js | grep -Eo 'https?://[^\"\\']+'

Look for:

API endpoints (

/api/v1/private/)Hardcoded keys or tokens

Version numbers and library hints

Don’t just scrape. A single overlooked line in JS can turn into direct access to sensitive functionality.

0x04 Manual Enumeration with Burp Suite

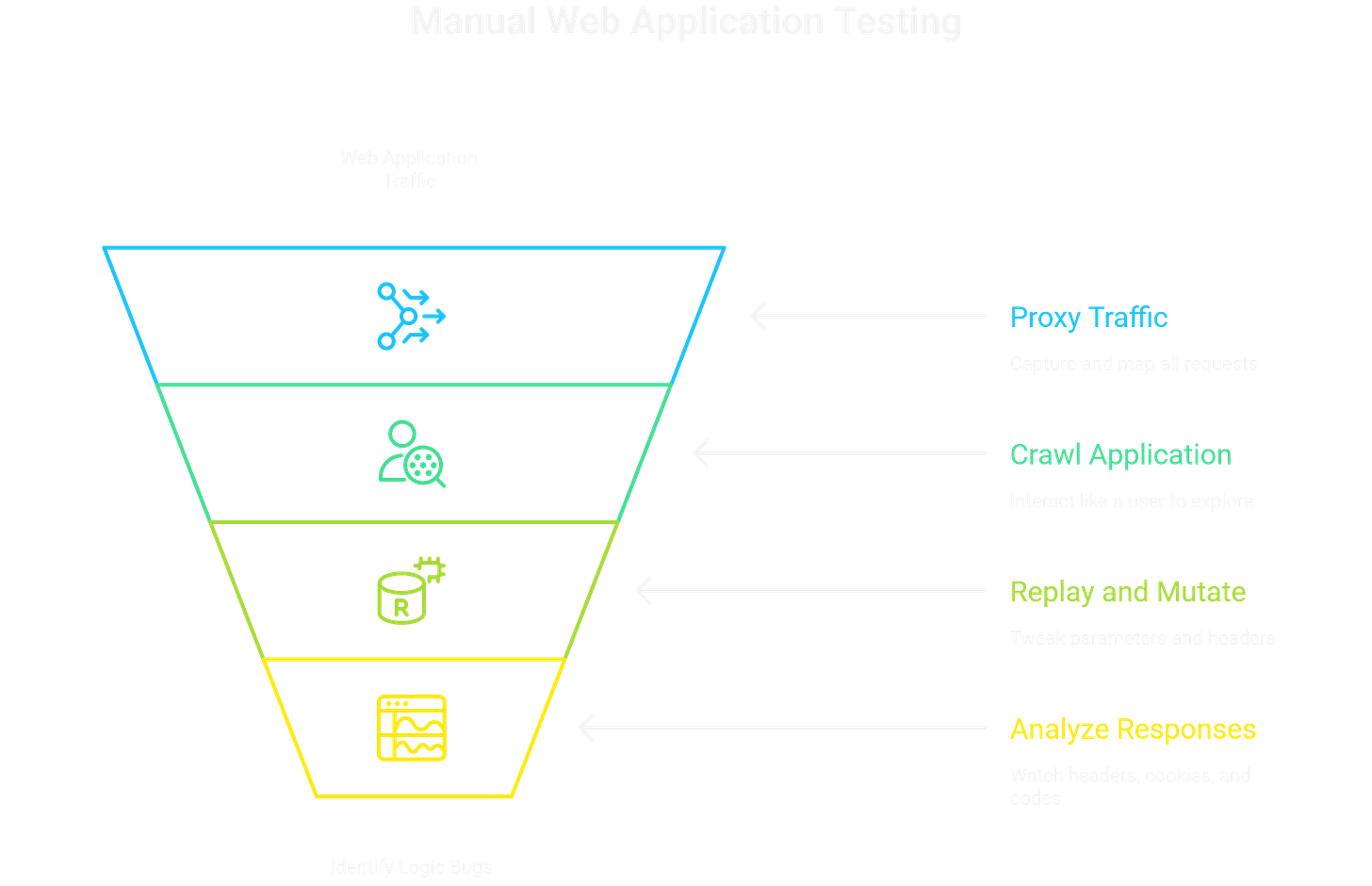

Automation gives you coverage; Burp gives you depth. Tools will find endpoints, but only manual exploration shows you how the application actually behaves. That’s where logic bugs, subtle state issues, and hidden attack paths reveal themselves.

Core Workflow

Proxy everything. Route traffic through Burp and build a live map of requests.

Crawl carefully. Don’t just spider — interact with the app like a user would: register, log in, reset passwords, change settings.

Replay and mutate. Use Repeater to tweak parameters, headers, and cookies one variable at a time.

What to Watch

Headers: verbose error messages, odd caching directives, debug flags.

Cookies: track how they change across requests; weak session handling is common.

Response codes: inconsistencies between 200, 302, 403 often hint at access control flaws.

Source comments: leftover notes from developers.

Why It Matters

Automated scans catch common exposures. Manual Burp work catches the weird stuff: a hidden role parameter, an endpoint that only appears after login, a workflow that breaks if you skip a step. Those subtleties are what move reports from “informational” to payout.

0x05 The Recon Trap: Too Much Data

The danger with web enumeration is drowning in it. Subdomains, endpoints, parameters, wordlists… the stream never ends. If you chase everything, you’ll never get to exploitation.

Veterans approach recon differently. They automate daily sweeps, log the results, but only chase the anomalies:

A parameter that doesn’t fit naming conventions.

A staging subdomain with a different tech stack.

A directory that responds differently than the rest.

That’s the signal. Everything else is noise.

The key is triage. You don’t need to map every file and endpoint. You need to find the one misconfiguration that matters.

Enumeration isn’t about running more tools than the next hunter. Everyone has subfinder, ffuf, and nuclei. The edge comes from persistence and creativity: chaining recon sources, revisiting old results after scope changes, and noticing the outliers others skim past.

At its core, enumeration is about people, not ports. You’re mapping how developers name things, how teams deploy, and how organizations forget. The bugs live in those human patterns, not in the wordlists themselves.

0x06 Web Enumeration Reference

The workflow always expands, but a solid baseline saves time. These are the quick checks and commands worth keeping close.

Initial Manual Checks

curl -I http://target.com # Headers

curl -v http://target.com # Verbose

wget http://target.com/robots.txt # Robots.txt for hints

view-source:http://target.com # Manual source review

Quick Recon

whatweb http://target.com # Fingerprint tech stack

wafw00f http://target.com # Detect WAF presence

nikto -h http://target.com # Basic vuln scan

Directory & File Busting

gobuster dir -u http://target.com -w /usr/share/wordlists/dirbuster/directory-list-2.3-medium.txt -x php,txt,html -t 50

ffuf -u http://target.com/FUZZ -w /usr/share/seclists/Discovery/Web-Content/common.txt

dirb http://target.com /usr/share/dirb/wordlists/common.txt

Parameter Discovery

arjun -u http://target.com/page.php

ffuf -u http://target.com/FUZZ -w params.txt

Wordlists Worth Knowing

/usr/share/wordlists/dirbuster/directory-list-2.3-medium.txt/usr/share/seclists/Discovery/Web-Content/common.txt/usr/share/seclists/Discovery/Web-Content/raft-large-files.txt

These aren’t the only commands you’ll ever use, but they cover the 80/20. With these, you can fingerprint a target, uncover its hidden structure, and start pulling threads.

0x07 Debrief

Web enumeration is the foundation of bug bounty. Every critical report — from IDORs to SSRF to business logic flaws — starts with knowing what’s really out there. If you don’t map the terrain, every payload is just a blind shot.

The hunters who succeed aren’t the ones who collect the most data; they’re the ones who filter it best. Recon isn’t about hitting every endpoint — it’s about spotting the anomalies that others miss and following them until they break.

Think of enumeration as studying the fingerprints of a company’s development process. The subdomains they forget, the APIs they leave half-documented, the parameters they thought no one would try — that’s where the gaps live.

Done right, enumeration doesn’t just reveal targets. It teaches you how an organization builds, deploys, and neglects. And once you understand that, you’re no longer just scanning websites — you’re hunting vulnerabilities with intent.