Why Your AI Results Are Trash (And How to Fix Them)

A practical guide to getting better, more accurate results from any AI with context engineering

TL;DR: Stop fighting with your AI. Stop getting generic, useless answers that waste your time. It’s time to learn the one skill that makes AI actually work for you. This is how you go from a generic chatbot to a specialized assistant.

Garbage In, Garbage Out.

What is Context Engineering And Why Does It Matter?

I remember the first time I asked an AI to do something I thought was complex. I wanted it to analyze a customer complaint and suggest a solution based on our company’s policies.

The result was… disappointing. It was generic, missed the point, and felt completely useless.

The truth is, the problem wasn’t the AI. It was me.

Welcome to the world of Context Engineering. It is the single most important skill for getting high-quality results from any AI. It’s how you turn that generic chatbot into a specialized assistant that actually understands what you need.

The core of the issue is that AI models don’t think; they predict the next most likely word based on the data you give them. If you give them generic data, you get generic results. This is the golden rule of working with AI.

First, you need to understand the “context window.” Think of it as the AI’s short-term memory. It includes everything from your current conversation: your questions, its previous answers, and any data you’ve given it. But this memory is finite and measured in tokens (pieces of words). Once the conversation exceeds the limit, the oldest information gets pushed out.

How Can I Instantly Improve My Prompts?

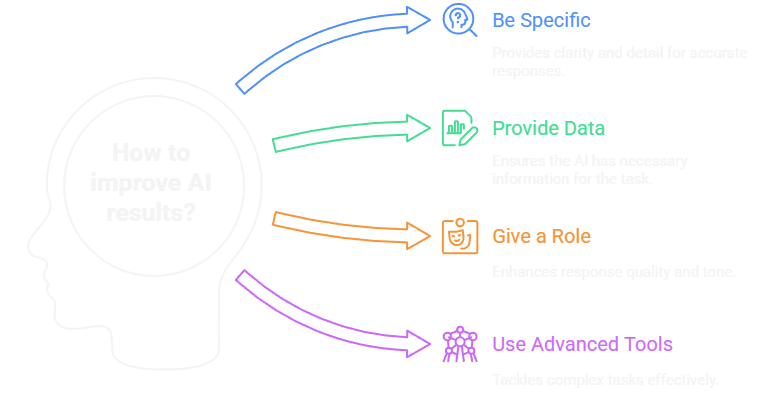

Getting better answers starts with a few core principles.

Are Your Questions Specific Enough? A detailed and unambiguous prompt will always outperform a vague one.

Vague Prompt: “Tell me about electric cars.”

Specific Prompt: “Compare the 2025 Tesla Model 3 Long Range with the 2025 Ford Mustang Mach-E Premium. I want to know the battery range in miles, the 0-60 mph time, and the starting MSRP in the US.”

Are You Providing the Right Data? Never assume an LLM knows about a specific article or email. The best way to ground an AI in facts is to provide the information directly.

Instead of: “Help me answer this customer email.”

Try: “Here is our return policy and the customer’s email below. Draft a friendly response that explains why they can’t return a final sale item: [paste policy and email here]”

Have You Given the AI a Role to Play? Assigning a persona tells the model how to frame its response, which dramatically improves its tone and quality.

Example: “Act as a friendly and encouraging personal trainer. A client is struggling with motivation. Write a short, encouraging text message I can send them.”

Is Your Prompt Easy for an AI to Read? Use simple formatting like headers or delimiters (###) to create clear, separate sections for the AI to follow.

###ROLE### You are a financial analyst.###ARTICLE TEXT### [Paste the full text of a financial news article here...]###YOUR TASK### Based *only* on the article I provided, what are the top three risks mentioned?If this breakdown helped, share it with your team. They’re probably getting bad AI answers, too.

How Do I Tackle More Complex Problems?

Once you’re comfortable with the basics, you can use advanced strategies.

Technique 1: How Can I Show the AI What I Want? (Few-Shot Learning) With few-shot learning, you provide the model with a few examples of the desired input/output format. This shows the AI the pattern you want it to follow.

I need you to classify these support tickets as “Urgent,” “High,” or “Medium.”

Ticket: “My entire website is down and I can’t access anything!” Priority: Urgent

Ticket: “I can’t figure out how to export my monthly report.” Priority: High

--- Now, classify this one: Ticket: “The login page is giving me a 404 error and none of my users can get in.” Priority:

Technique 2: How Do I Connect My AI to External Data? (RAG) Retrieval-Augmented Generation (RAG) connects an LLM to an external knowledge base, like your company’s documents or the live internet. It retrieves relevant info and adds it to your prompt, overcoming outdated knowledge and allowing the AI to use private data securely.

Technique 3: Can I Make Multiple AIs Work Together? (Chaining) Some problems are too big for one prompt. The solution is to break them down into a workflow. The output from one specialized AI prompt (or “agent”) becomes the input for the next.

Thanks for reading! Subscribe for free to receive new posts and support my work.

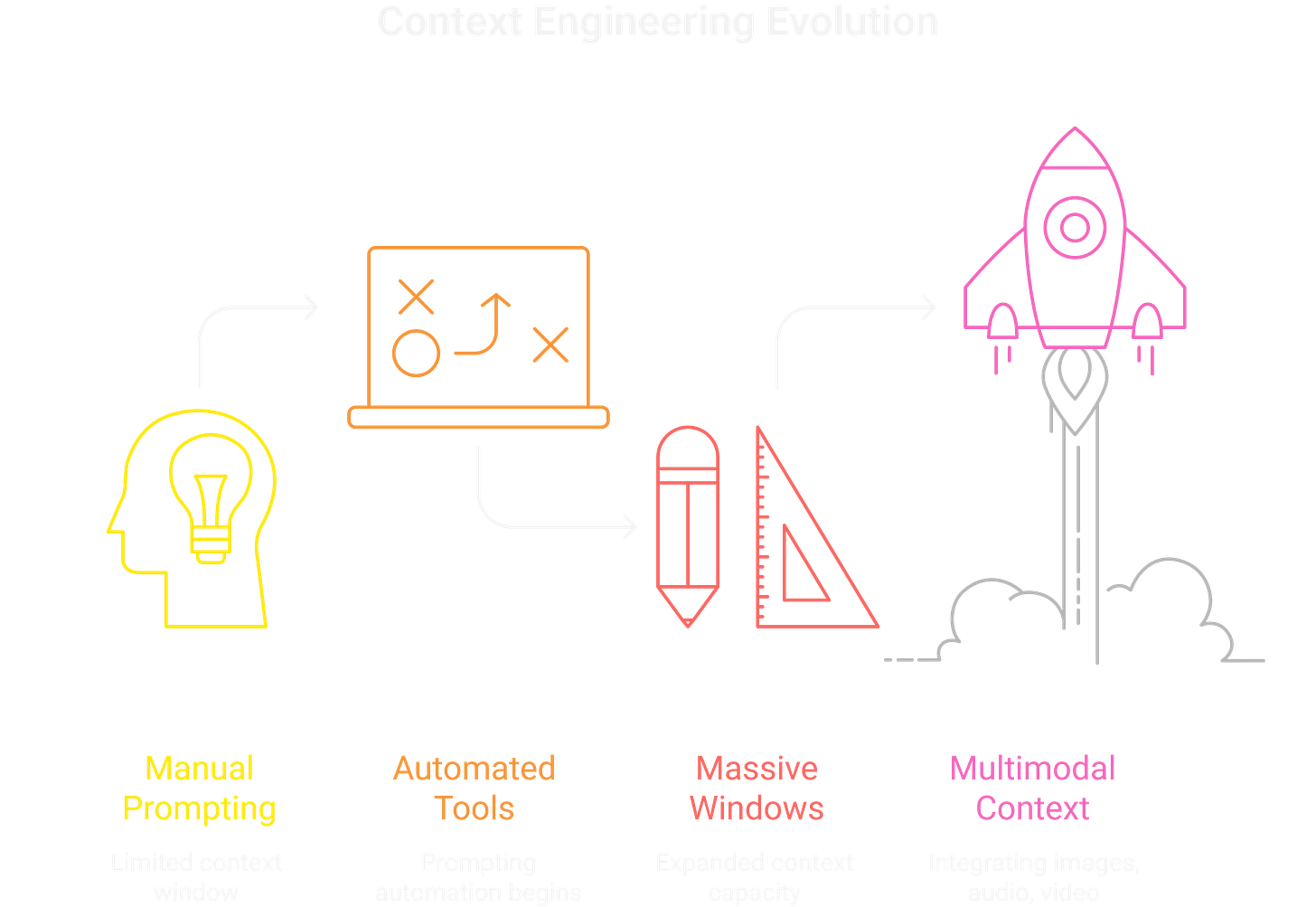

What Does the Future of AI Interaction Look Like?

Context engineering is evolving quickly. Tools will begin to automate prompting, context windows will become massive, and we’ll start using multimodal context (images, audio, and video).

But even then, the core skill will remain the same: prioritizing the right information to get the right answer.

Why Is This the Most Important Skill to Learn?

Mastering context is a journey, but it starts with these simple steps. It’s the difference between fighting with your tools and building a true partnership with them. It’s how you make the AI useful instead of just a toy.

What’s the most frustrating AI answer you’ve ever received?

Share it in the comments below!

Frequently Asked Questions (FAQ)

Q1: What’s the difference between prompt engineering and context engineering? A: Prompt engineering is about crafting the perfect single question. Context engineering is broader; it’s about managing the entire conversation and data flow to the AI, including using tools like RAG and chaining for complex tasks.

Q2: How big is a typical context window? A: It varies greatly by model, from a few thousand tokens (a few pages of text) to over a million tokens (several books). This technology is improving rapidly.

Q3: Can I use these techniques with any AI model? A: Yes! The fundamental principles—specificity, providing data, assigning a role, and clear formatting—will improve your results with virtually any Large Language Model.

Q4: What’s the single most important thing I can do to get better AI answers? A: Be more specific and provide the necessary information directly in the prompt. Don’t make the AI guess. Give it all the facts it needs to succeed.

We all grew accustomed to using search, so we just default to using search prompts with AI because it looks like a search feature. A generic request goes in, the results are miserable. Then you waste time refining the search and trying to get the AI back on the rails. Only to have cost yourself more time than if you had spelled out a properly formed AI query in the first place.

You nailed it. We are the problem, but we are also tech people and early adopters. This is exactly how the average user will be trying to use AI for the foreseeable future. Normies will not take the time to figure it out they will get mad and not use it. So it is up to those pushing AI to accommodate them or be left out in the cold

Really enjoyed this one. Using AI is such an underrated skill, the more you practice, the sharper your results get.