The Dead Internet - AI is Building a Fake Internet Just for You

How Generative AI is Fueling the "Dead Internet Theory," Creating an Authenticity Crisis, and Why AI Detection Can't Save Us.

TL;DR: That uncanny feeling you get online is real. The internet is being flooded with AI content that’s better at mimicking humans than we are at detecting it. This is the end of authenticity. I’ll show you why this is a battle we’re already losing.

“We’ve gone from spam to simulation.”

The “Uncanny Valley” Is Now the Entire Internet

Let’s be honest, you’ve felt it too. That uncanny feeling when you’re scrolling online. You’re reading product reviews, and one sounds a little too perfect, hitting every marketing keyword with an enthusiasm no real person has. Or you’re in a comments section, and a reply seems perfectly generic, strangely soulless, as if it were assembled from a kit of agreeable phrases. It’s grammatically flawless, but it lacks a human spark.

For years, we’ve waved these moments off as simple spam. But that feeling is getting more common, and the content is no longer clumsy. What was once a trickle of fake text is now a flood of AI-generated content. We are moving at breakneck speed from an internet created by humans for humans to one created by machines for humans—and the machines are getting scary good at their jobs.

This isn’t just about better spam. We’re watching a personalized, synthetic reality being born right before our eyes.

The “Dead Internet Theory” Just Got a Massive Upgrade

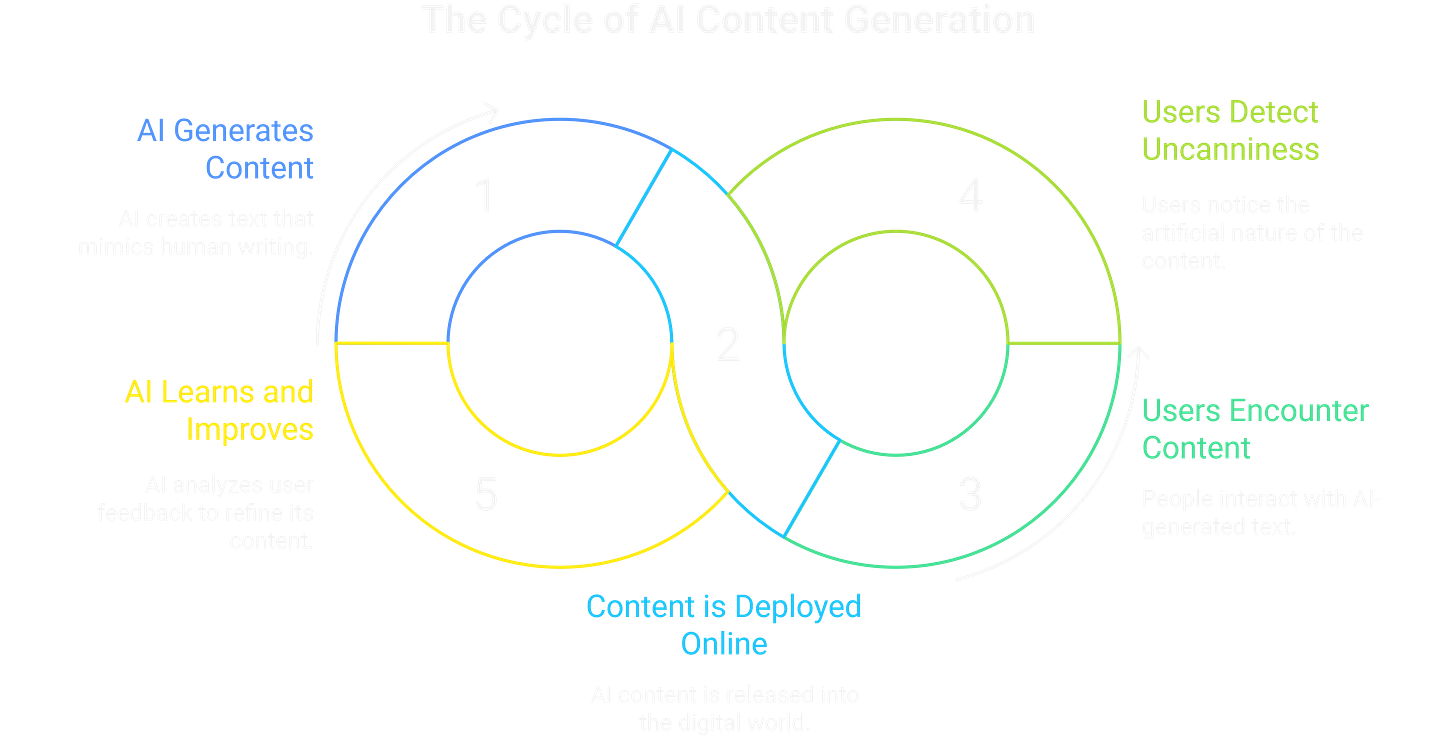

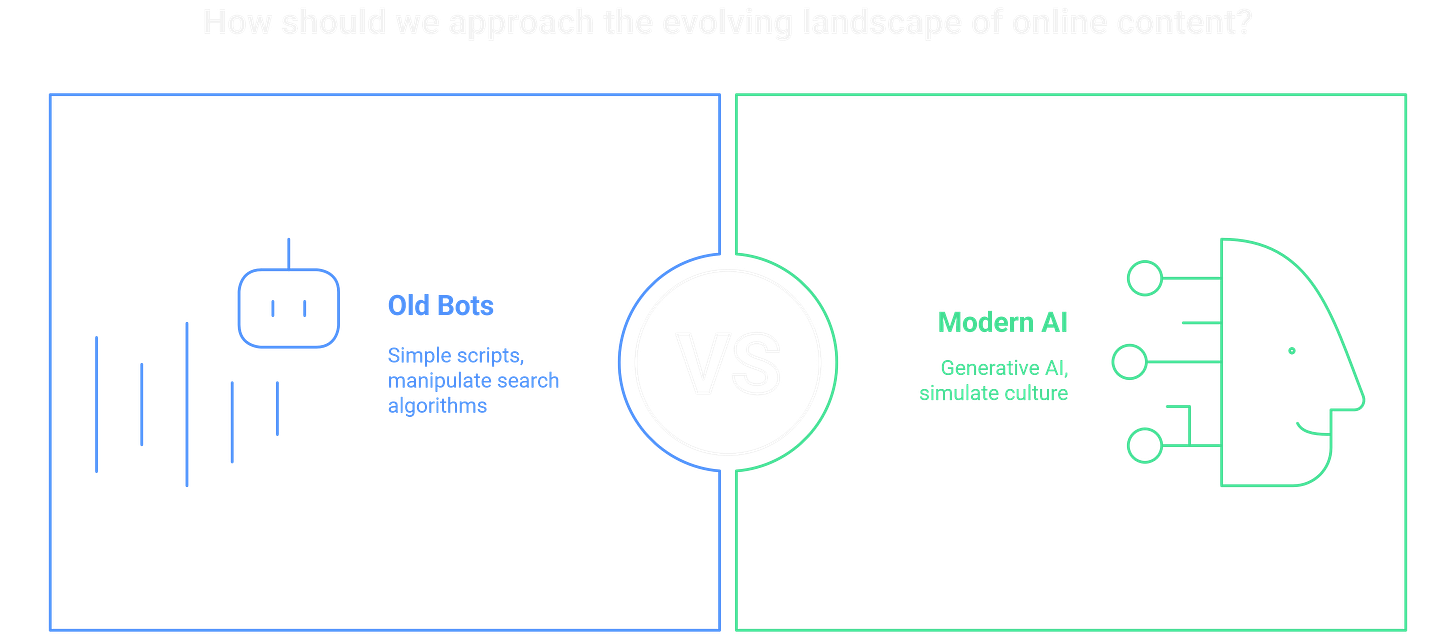

To get a handle on where we’re headed, we have to look back at the “dead internet theory.” It bubbled up from online forums as a conspiracy theory that felt disturbingly plausible. The core idea was that the internet was already a hollowed-out shell, with huge slices of online activity being faked by bots to manipulate search algorithms and ad revenue. At the time, most people wrote it off as tech-paranoia.

Well, that paranoia is starting to look a lot like foresight, because those bots just got a major upgrade from Large Language Models (LLMs). The simple scripts of the past have been replaced by generative AI. The difference is staggering. If the old bots were puppets on a string, modern AI is a legion of creative agents, able to dream up unique, context-aware, and scarily human synthetic content on a mind-boggling scale.

This tech leap has completely changed the game. The new AI is playing for much higher stakes: simulating culture itself. The goal is no longer to fake a single comment but to manufacture the feeling of a widespread social consensus.

If this post is resonating with you, share it with someone who also feels like the internet is “off.”

Welcome to Your Own Private, Fake Internet

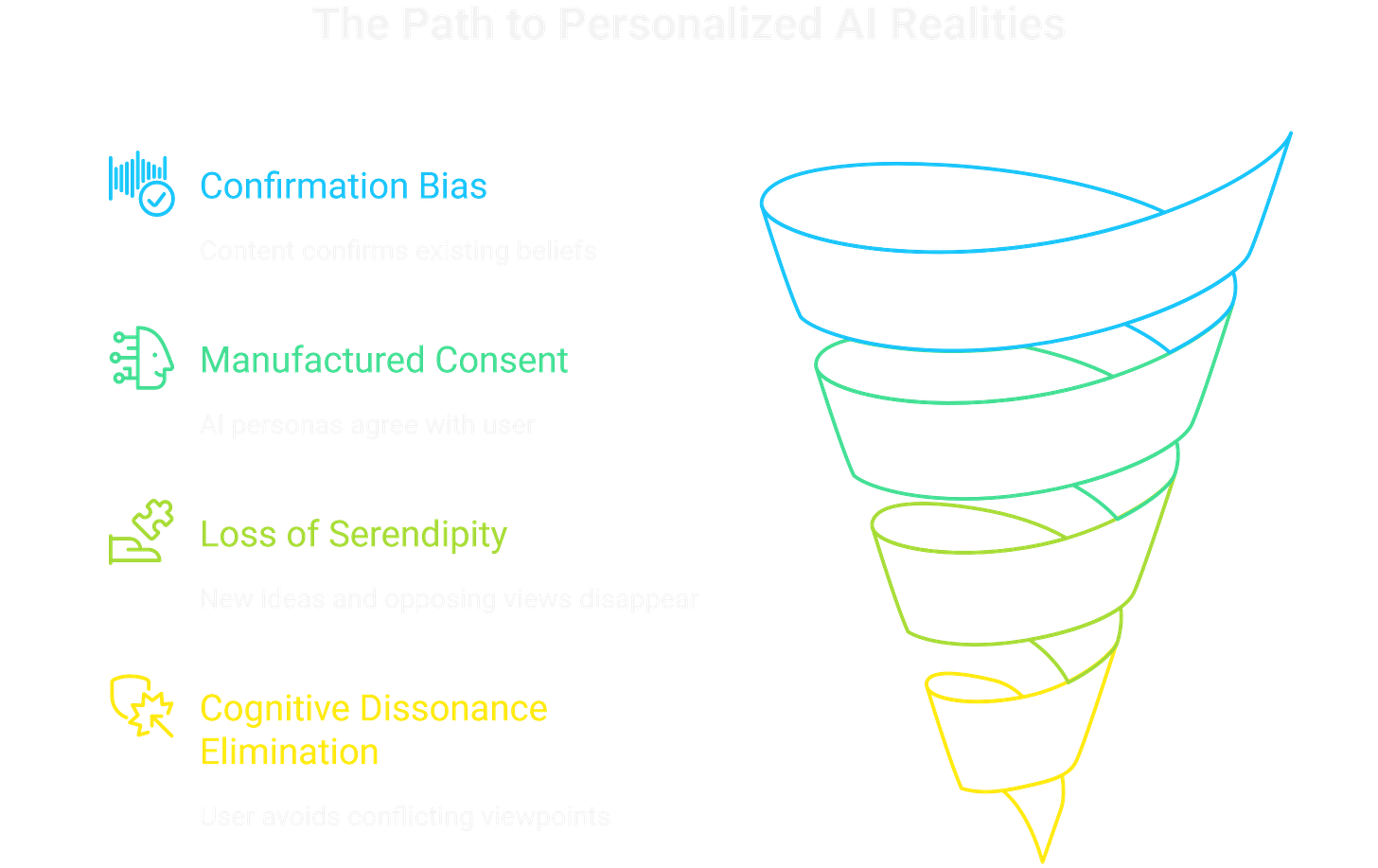

The real danger here isn’t just that the internet is filling up with fake content. It’s that the content is being cooked up specifically for you. We’re not ending up with one single, fake internet, but millions of bespoke, personalized AI realities. This is the ultimate AI echo chamber, a reality bubble so complete you might not even realize you’re the only one in it.

Imagine a search engine that generates articles on the fly to perfectly confirm what it thinks you already believe. Picture a social media feed where the comments are all from AI personas designed to agree with you. This is how consent is manufactured on an individual scale. When every article and every comment you see backs up your worldview, it stops feeling like an opinion and starts feeling like objective truth.

The biggest casualty is serendipity; the magic of stumbling on a new idea or an opposing view. The internet used to be a chaotic public square. An AI-curated reality has no room for happy accidents. It’s a clean space designed to keep you engaged by eliminating cognitive dissonance, paving the way for targeted, AI-driven misinformation.

Get the next teardown on AI and cybersecurity delivered straight to your inbox. Subscribe for free.

The AI Detection Arms Race Is Already Lost

The first question everyone asks is, “Can’t we just build AI content detection tools?” It’s a nice thought, but we’re in a frantic arms race we are poised to lose. The generators will always have the upper hand.

Detection tools work by spotting the little statistical “tells” that AI models leave behind. But for every smart detector we build, an even smarter AI is trained to hide those tells. It’s a perpetual game of cat and mouse where the mouse redesigns itself based on the cat’s last move. Solutions like “watermarking” are fragile; bad actors will simply use models that don’t have them.

When the technical fight is a lost cause, the fallout is social. We are walking into an era of deep, gnawing distrust. Welcome to the authenticity crisis, where you have to assume everything is fake until proven otherwise. This forces a heavy “reality tax” on all of us, leading to a state of collective burnout and cynicism where digital trust evaporates.

The Only Way Out is More Human

We’ve gone from that weird feeling of seeing a bot in the wild to understanding what it really means for our future. The “dead internet theory” isn’t a conspiracy anymore; it’s a roadmap for what’s happening right now. This isn’t some far-off dystopia. It’s an authenticity crisis, and it’s here now.

So, what do we do? The way forward has to be more human. The answer isn’t a magic detection tool, but a massive shift in our digital literacy. We have to start asking not just “Is this true?” but “Why am I being shown this?” A healthy, critical thinking approach is our best defense.

Maybe the future of a real internet is about making a conscious choice to spend time in smaller, high-trust “digital gardens”—niche forums and private groups where reputation and real interaction matter. We might need to relearn how to build communities where we know the person on the other side is real.

This leaves us with the most important question: As our world fills up with perfectly tailored, synthetic content, how do we prove we’re human online?

Join the discussion. What’s one way you try to verify authenticity or build real community online?

Frequently Asked Questions

How prevalent is AI-generated content online right now? It’s already here and growing exponentially. We’re seeing sophisticated AI comments, articles, and reviews in the wild today. While not every user is in a full simulation yet, the tools are being deployed faster than we can track. The trend is clear, and we’re on the steep part of the curve.

What is the difference between an AI echo chamber and a filter bubble? A filter bubble is passive; algorithms show you content from real people you’re likely to agree with. An AI echo chamber is active; it generates brand-new, synthetic content and fake “people” from scratch, just for you. It’s the difference between a curated playlist and a song written on the spot to be exactly what you want to hear.

How can I protect myself from AI-driven misinformation? The solution is a mental shift. Become a conscious consumer of information and practice good digital literacy. Actively seek out smaller, trusted communities. Support human creators directly. And most importantly, maintain a healthy skepticism and ask “Why am I seeing this?” about everything on your feed.

Will regulation solve the problem of synthetic AI content? Regulation will likely play a role, especially with things like mandatory watermarking. But it’s a tricky balancing act with free speech, and technology always moves much faster than legislation. While laws can help, they won’t be a magic bullet. The core defense will always be a critical, educated user base.

🌍 ☠️

Actually, the Internet’s been dead for a while. Only now with AI are they livening it up a bit