Claude vs Humans: Anthropic’s CTF Run

ToxSec | A look at how Claude performs in popular CTF challenges.

0x00 Claude Joins the Kill Chain

Anthropic quietly dropped Claude into human-run cyber competitions, from high-school CTFs to the DEF CON Qualifier. They wanted to see if an LLM could operate under pressure.

Claude didn’t buckle. In structured environments it matched strong human openers and finished mid-pack or better across events. The takeaway for bounty hunters:

AI is now participating, not assisting.

0x01 The Scoreboard - Results, Not Vibes

Results:

PicoCTF 2025 — Top-tier finish with 32/41 solves in a student-to-expert ladder.

HTB: AI vs Human CTF — 19/20 solved, top quartile overall.

Airbnb Invite-Only CTF — 13/30 solved in the first hour, briefly 4th, then settled mid-board after the grind.

Western CCDC (Defense) — Competitive showings holding live services against human attackers.

PlaidCTF / DEF CON Quals — Stalled where many humans did: the elite edge.

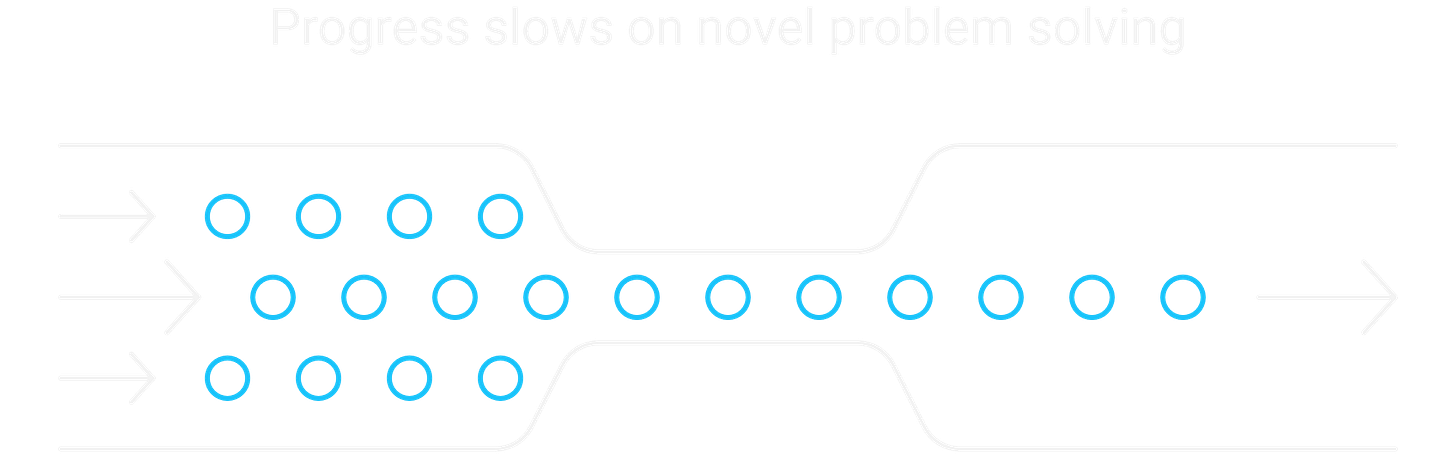

Pattern: LLMs inhale beginner through intermediate work and tear through mid-tier flags at machine speed. They stall when the challenge shifts from known exploitation to novel problem solving.

Industry reflections

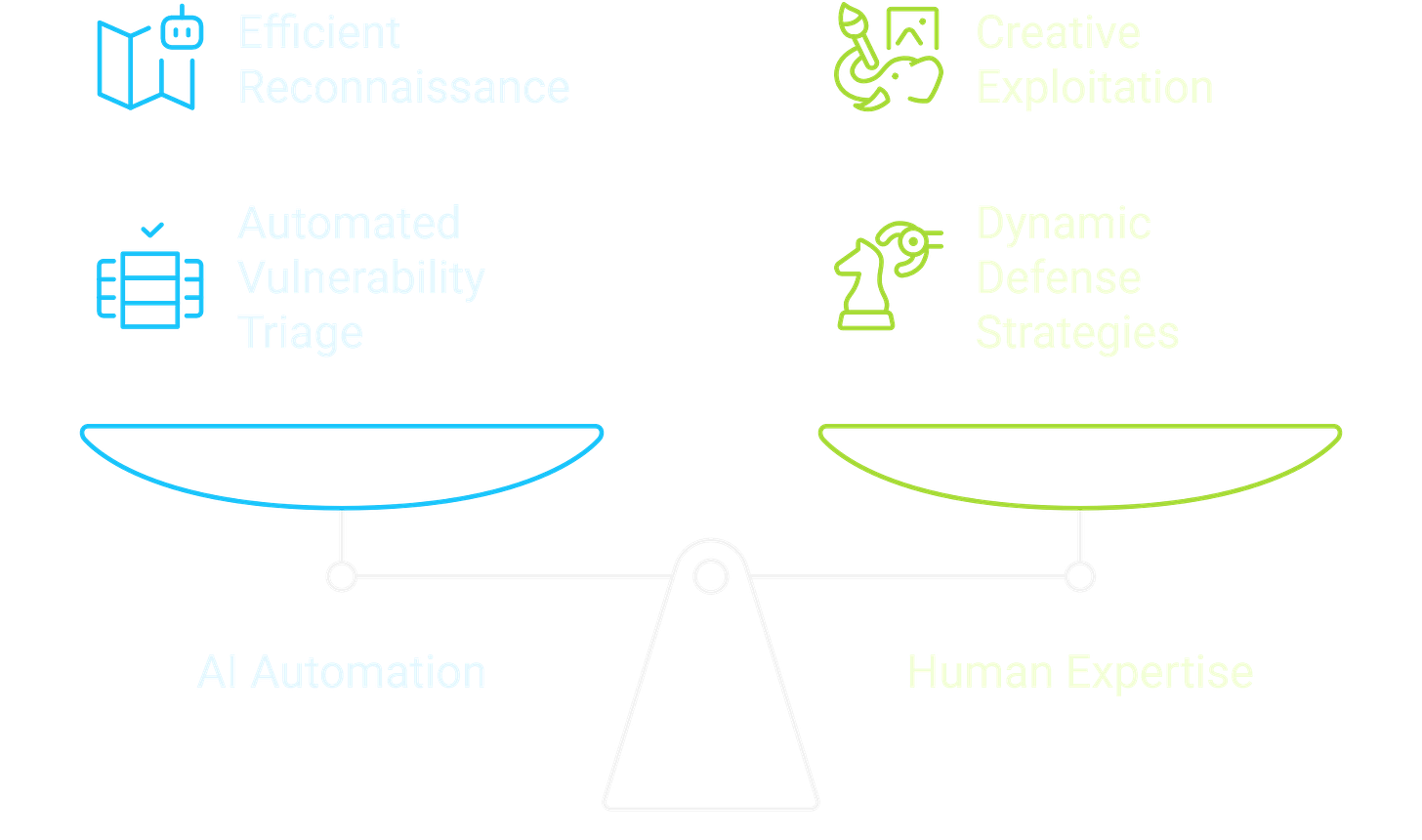

Bug Bounty Economics: AI is already good enough to mow down low-hanging fruit. Think forgotten staging subdomains, weak auth flows, easy recon wins. Expect bounty hunters to automate the first 80% of recon and vuln triage, forcing programs to pay only for the rare, human-level break.

CTF Culture Shock: Beginner brackets will need to harden or die. Entry-level CTFs will become LLM playgrounds unless organizers inject novel, off-dataset twists. Humans will gravitate toward creative exploitation and dynamic defense where reasoning beats rote pattern-matching.

GenAI Security Arms Race. Claude’s speed shows what happens when offensive automation scales horizontally. If one agent can parallelize twenty recon tasks, defenders need equivalent automation just to hold the line. Tooling that fuses LLMs with real-time context (logs, attack surface maps, live patching) will define the next wave of blue-team tech.

Reality Check for Hype. Claude didn’t “solve” PlaidCTF or DEF CON. The ceiling still belongs to the best humans. But the floor just rose—and that matters more for day-to-day web security than the final puzzles.

Bottom line: GenAI isn’t replacing elite hackers tomorrow; it’s compressing the skill curve and flooding the field with automated mid-tier talent today.

0x02 AI Tools - Autonomy and Chaos

When a path exists, AI burns it at machine velocity. Claude proved it: parallel agents kept pace with the fastest human team for the opening 17 minutes of the HackTheBox AI vs Human CTF. At Airbnb, it ripped through 13 solves in the first hour—then barely moved the scoreboard for the next two days.

The lesson isn’t subtle: early game is a blood sport.

Claude’s performance jumped when it had real tools: a Kali box, file I/O, job control, clean TTY. “Chat-and-paste” underperformed, while agents and tools delivered.

What AI crushes now

AI excels at rapid-fire technical tasks. It tears through HTTP fundamentals—cookies, cache directives, CORS preflights, and JWT/JWE quirks—at machine speed. It can generate or patch proof-of-concepts, parsers, and small harnesses in seconds, delivering glue code that humans would normally hand-craft. Structured challenges such as classic CTF web, crypto, or reverse-engineering puzzles with clear hints fall quickly once the model locks onto the pattern.

Where humans win

Humans still dominate when creativity and contextual reasoning drive the exploit. Business logic, dataflows, and role boundaries require understanding of real-world incentives and edge cases. Cross-system pivots, where an attack must jump from CDN to API to staff portal to storage, demand strategic planning beyond pattern matching. Truly novel exploit design, like bespoke deserialization bugs, odd cryptographic weaknesses, or undefined parser behavior, remains the realm of human ingenuity.

0x03 Machine Mind vs. Human Grind

AI handles routine offense ruthlessly:

decode → script → run → parse → retry. That compresses time-to-signal on a wide swath of web bugs. But its endurance and creativity still lag: long investigations drift, ambiguous specs loop, and strange stimuli derail progress.

Exploit where AI is strong:

Use AI for wide-angle parameter discovery and gentle fuzzing across large endpoint sets. Leverage AI for tight auth-surface mapping to track where tokens appear, session transitions, and mis-scoped cookies. Have it crank out glue code, proof-of-concepts, lightweight harnesses, parsers, and one-off migrations in seconds.

Finally, rely on it to catch regex or serialization mistakes—such as JWT/JWE/JWS quirks, sloppy base encodings, and lenient parsers.

Reserve human cycles for:

Business-logic exploits that wind through multi-step approvals and escrow flows; cross-system pivots, novel exploit design and undefined behavior at parser boundaries and those “one weird trick” bugs where the spec is tribal knowledge, not written down.

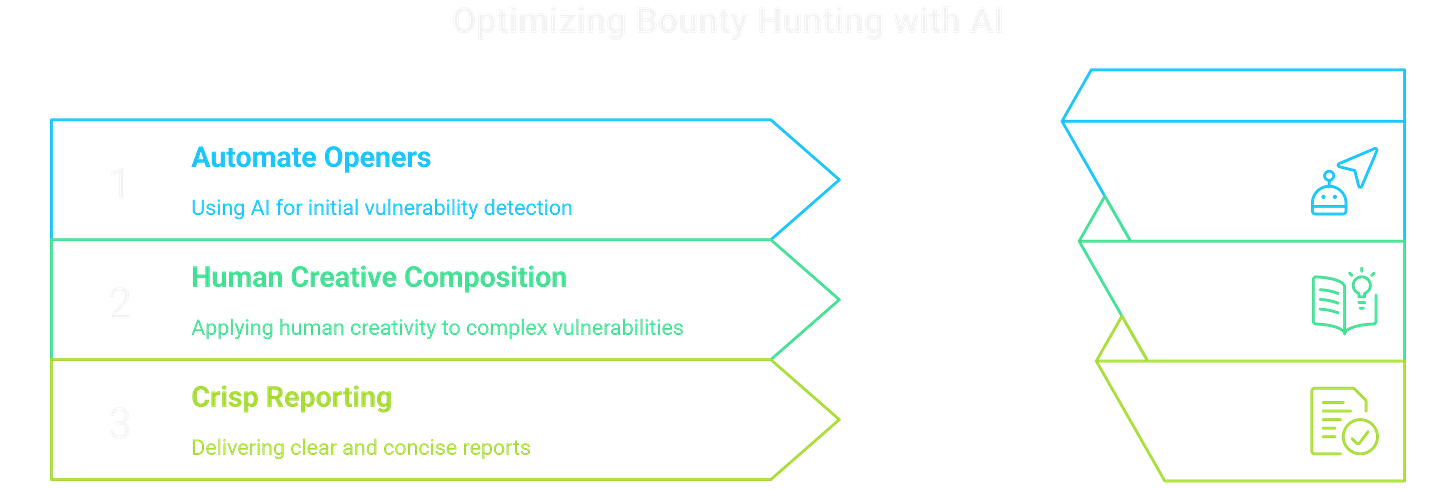

0x04 Scaffolds by AI, Bounty for You

The floor is rising. Anything that looks “tutorial-shaped” gets scooped up fast, so platforms will respond with tighter rate limits and narrower scopes. Rewards will pivot toward chains that hit money flows or identity boundaries, and every report will be expected to ship with clean PoCs and built-in mitigation steps.

Start by automating first-pass recon on every in-scope asset each cycle so easy signals are never missed. Next, combine small, common mistakes into payout-grade attack chains—for example, a weak preflight check plus the wrong JWT audience setting and a verbose error can lead to an auth bypass.

Finally, write professional reports: provide a one-command proof of concept, show only the essential HTTP requests and responses, and explain the impact in the program’s business terms so triage can approve it on the first review.

0x05 Debrief - Where LLMs Stall

LLMs struggle when the path isn’t clear. Ambiguous multi-step workflows, long tasks that require keeping state in mind, and anti-automation gates that punish simple repetition all slow it down.

One-off crypto or deserialization chains, where success depends on custom reasoning instead of pattern matching are especially tough.

Add noisy consoles or flashy UIs that flood the screen (think aquarium-style ASCII art) and the model’s focus breaks, causing progress to drift.

Anthropic’s HTB AI vs Human CTF is a clear blueprint for how to weaponize AI speed without losing human edge. Claude solved 19/20 challenges, ran autonomously while the researcher moved boxes, and—had it not started 32 minutes late, its opening sprint tracked the top human team for ~17 minutes. The single miss? Also the lowest human solve rate (~14%) - a reminder that novel puzzles still punch above AI’s pattern weight.

What Anthropic proved at HTB

Autonomy isn’t a demo trick. Claude read challenge files, executed locally, and auto-submitted flags—no babysitting.

Tools decide outcomes. Performance jumped when Claude got Kali + task tools instead of chat-only prompting.

Parallelization is free speed. One agent per challenge is a viable opening gambit. Humans can’t scale attention like that.

Claude’s season makes the playbook simple: speed and coverage win the opening; composition and reporting win the payout. Parallel agents crush the first hour and surface “tutorial-shaped” issues fast, but they fade when novelty and multi-step reasoning kick in. Translation for bounty hunters: the easy stuff is becoming commodity. Value now lives in how you chain findings into real impact.

Want to read more on Bug Bounty in the GenAI Era? Take a look at the next article here.

The floor rising fast is the real headline here. How soon before entry-level CTFs feel fully AI-dominated?