Nvidia's AI Kill Chain

ToxSec | NVIDIA’s AI Kill Chain reframes attacks on AI apps into five stages. It's a clean mental model for turning prompt injection into reproducible bugs.

We all know the Lockheed Martin model. The AI Kill Chain re-contextualizes its phases for a world where the primary interface is a language model. The core principle remains the same: disrupt one phase, and you break the chain. The TTPs, however, are evolving. We're moving from exploiting code vulnerabilities to exploiting the logic and reasoning capabilities of the model itself.

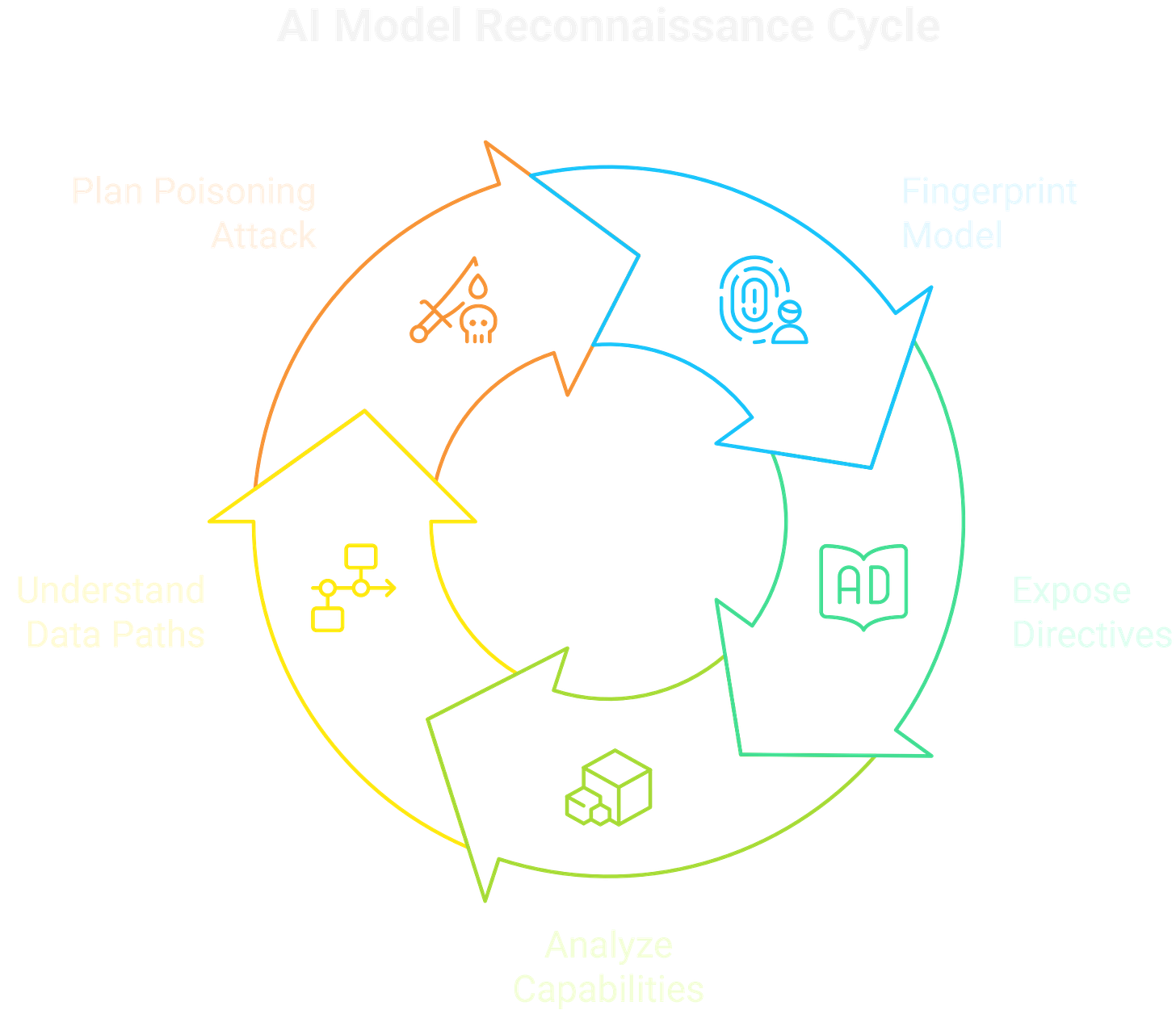

0x00 Recon: Fingerprinting the Model

The initial phase is all about mapping the AI's anatomy. An adversary's methodology shifts from traditional port scanning to sophisticated model fingerprinting. This involves probing the model to determine its architecture—whether it’s a GPT variant, a Llama derivative, or a fine-tuned open-source model. An operator can expose core directives and safety filters by coaxing the model to leak its system prompt through meta-prompts or by analyzing verbose error messages.

The recon process extends to its functional capabilities, enumerating what tools or APIs it can call and which RAG data stores it’s connected to. Understanding these data ingestion paths is crucial for planning a subsequent poisoning attack. This entire process is an active probe of the model's cognitive boundaries, designed to find the cracks.

If you want to keep reading on GenAI, check out the ToxSec GenAI Crash Course!

0x01 Poisoning: Tainting the RAG Pipeline

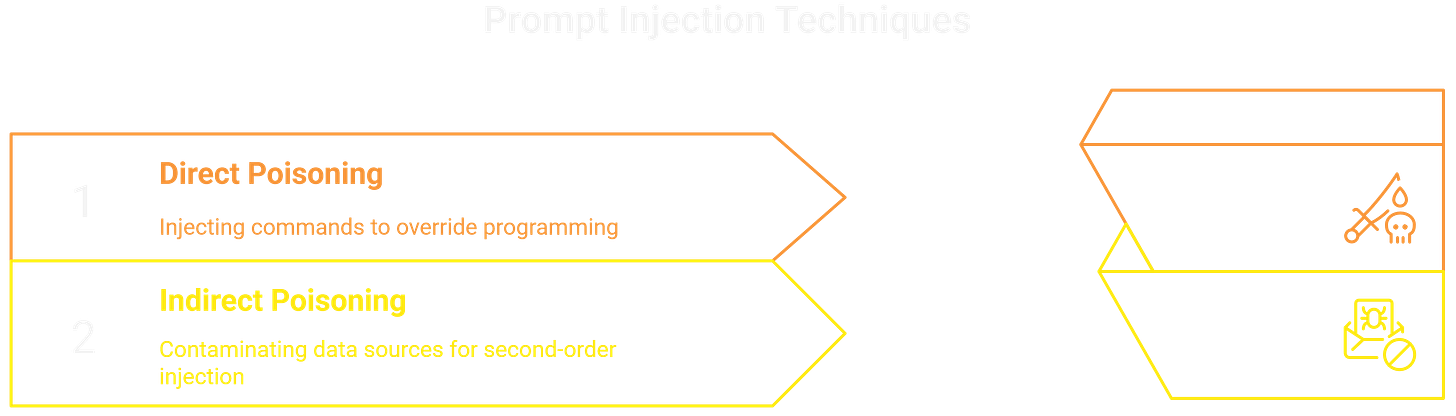

Once the target is mapped, it's time to seed the environment. Poisoning is about corrupting the AI's "worldview" by manipulating the data it learns from or references.

Direct Poisoning (Prompt Injection): This is the most direct route, using techniques like goal-hijacking or supplying contradictory instructions to override the model's original programming. Think of it as command injection where the payload is natural language. A classic example is the DAN ("Do Anything Now") prompt, which attempts to break the AI out of its typical constraints.

Indirect Poisoning (RAG Contamination): This is the stealthier, more scalable approach. If an AI uses a vector database or crawls external websites for context, an attacker can poison those sources. By embedding a malicious payload in a document the AI is likely to retrieve, they can achieve a "second-order" injection. The AI ingests the trusted-but-tainted data and acts on the hidden commands when a normal user asks a relevant question. This is a supply chain attack for AI.

A successful poisoning attack plants a logic bomb that waits for a legitimate user to detonate it.

0x02 Hijacking: Turning the Logic Against Itself

This is the execution phase where recon data and poisoning payloads are weaponized to seize control of the AI's behavior. A successful hijack makes the model an unwilling accomplice. An attacker can leverage previously discovered weaknesses to bypass safety filters and generate prohibited content. From there, they can manipulate the model to leak sensitive information from its context window or connected data sources.

The most critical threat is the abuse of the AI's integrated tools, triggering its connected functions or APIs with malicious parameters. The AI effectively becomes a natural language proxy for the attacker's commands, shifting the exploit from a buffer overflow to a logic overflow.

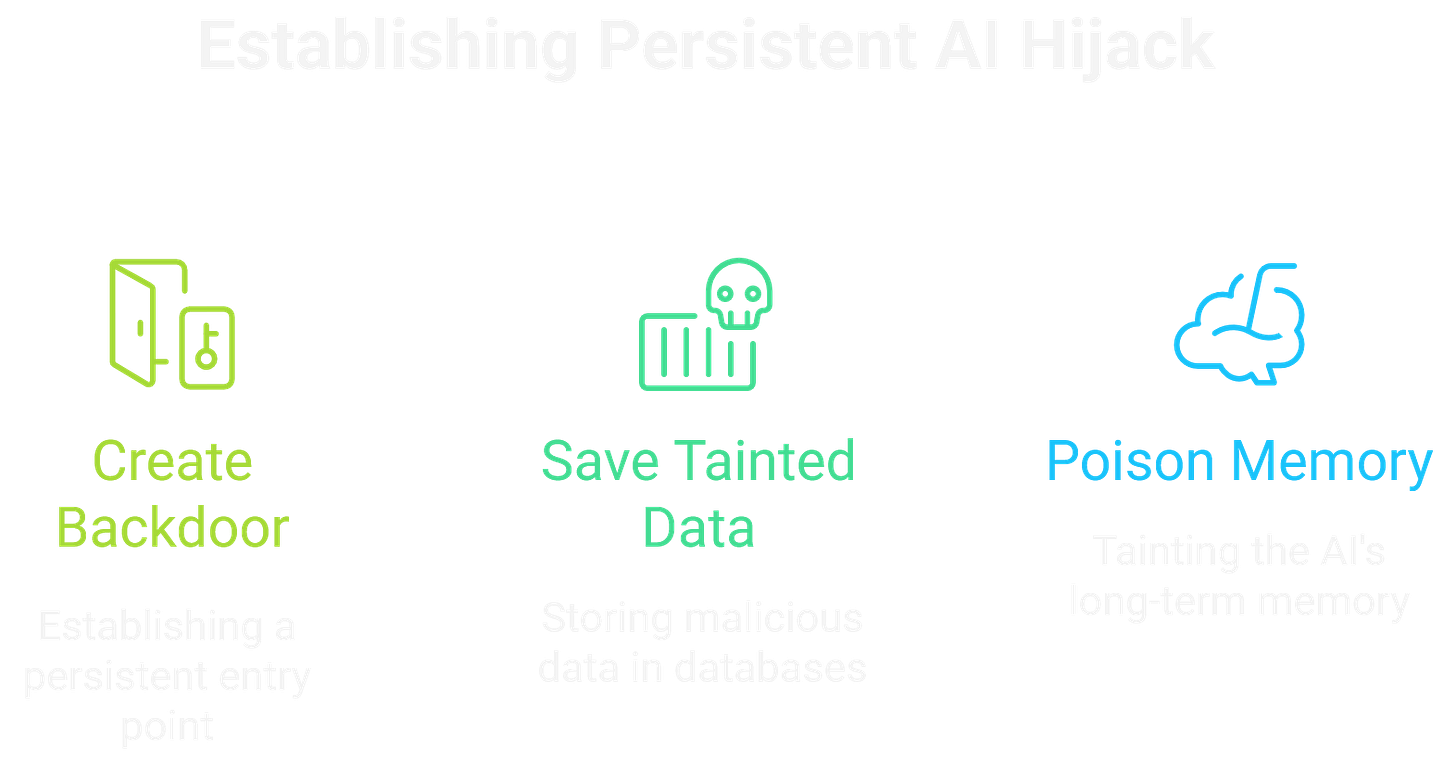

0x03 Persist & Impact: The Long Game and the Payoff

A hijack is temporary if it can't be maintained. Persistence in the AI Kill Chain means embedding the malicious influence so it survives beyond a single session. This can be achieved by poisoning the AI's long-term memory module or getting it to save tainted information to a database that will be used in future conversations. This creates a persistent backdoor, triggered by specific keywords or queries.

The Impact is the ultimate goal, where the AI's compromised output affects a downstream system. This is where the theoretical attack becomes a real problem. The damage can take multiple forms, from data exfiltration via encoded, benign-looking outputs to unauthorized execution of commands.

An attacker could coerce the AI to use its tools to modify files, change system configs, or initiate financial transactions, potentially leading to RCE. Furthermore, the hijacked AI becomes a powerful tool for social engineering, capable of generating highly convincing phishing campaigns that leverage its inherent credibility.

0x04 Breaking the Chain: Hardening the Stack

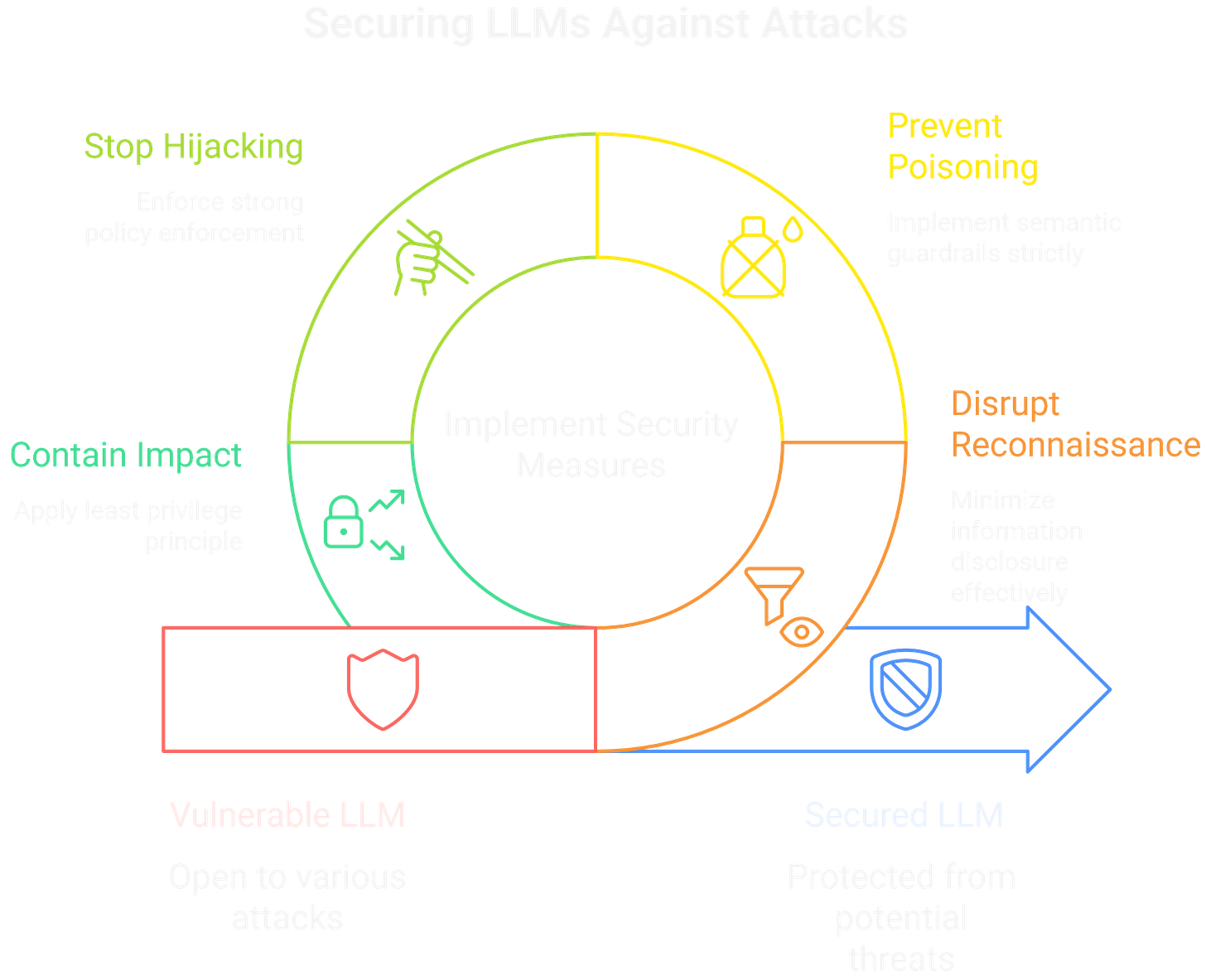

Defense-in-depth is the only way forward; we need to build friction at every stage of the chain.

Disrupt Recon: Minimize information disclosure. Don't let the model verbatim recite its system prompt. Scrub verbose error messages that reveal underlying architecture.

Prevent Poisoning: This is a data validation problem. Implement semantic guardrails that check the intent of an input, moving beyond simple syntax validation. For RAG systems, focus on data provenance and trust scores for external sources.

Stop Hijacking: This is where strong policy enforcement is key. Implement a middleware layer that acts as a WAF for the LLM. This layer should intercept the model's intended tool calls, validate the parameters, and ensure the action aligns with user permissions and established policies before execution.

Contain the Impact: Apply the principle of least privilege relentlessly. The service account and tools the AI uses should have the absolute minimum permissions required. Never let an LLM-connected tool run with root or admin privileges. Sandboxing and robust logging are non-negotiable.

The attack surface has expanded beyond code to encompass the intersection of code, data, and logic. Understanding this new kill chain is the first step to owning it. Stay sharp.

Curious how GenAI is changing bug bounty work? Check out the Bug Bounty Hunting for GenAI guide.

Loved it