You spent three hours tailoring your resume for a job you’re genuinely qualified for. You’ve got the experience, the skills, the exact background they’re asking for. You hit submit. Fifteen seconds later, automated rejection.

You didn’t get rejected by a person. You got rejected by an algorithm that probably can’t actually read your resume. And this is happening to millions of people right now.

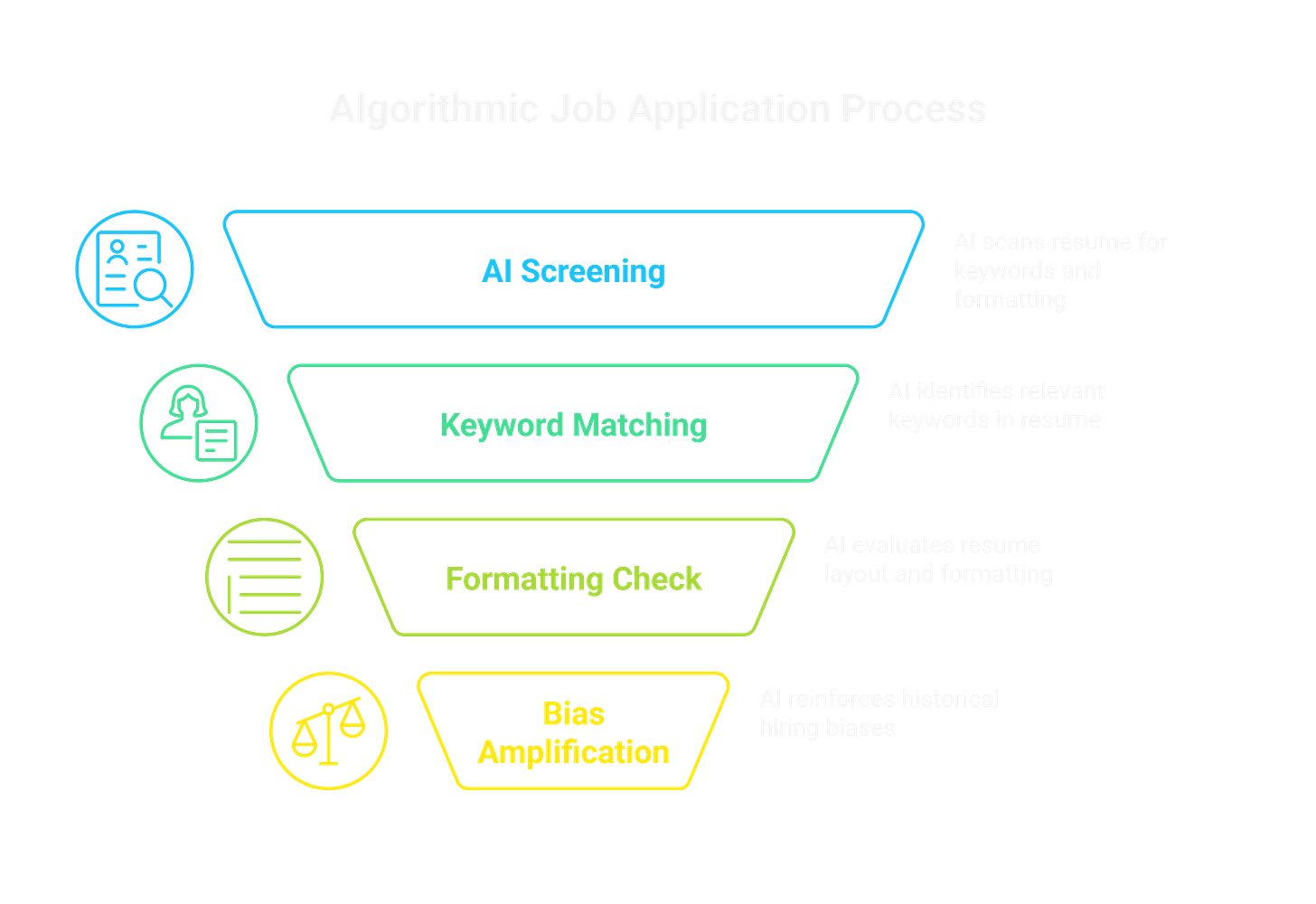

Most big companies use applicant tracking systems with AI screening. The pitch is efficiency. Instead of humans reading hundreds of resumes, the AI scans, scores, filters. Except the AI is often catastrophically bad at its job.

These systems can’t handle basic reading comprehension. They’re keyword matching engines pretending to understand context. You could have ten years doing exactly what the job requires, but if you used slightly different terminology than the posting, the AI doesn’t make the connection. It’s pattern matching, not intelligence.

The errors are wild. Studies have found these systems rejecting qualified candidates because of formatting. Two-column resume layout? Rejected. The AI scrambles your information. Skills in a sidebar? Rejected. The algorithm couldn’t find them.

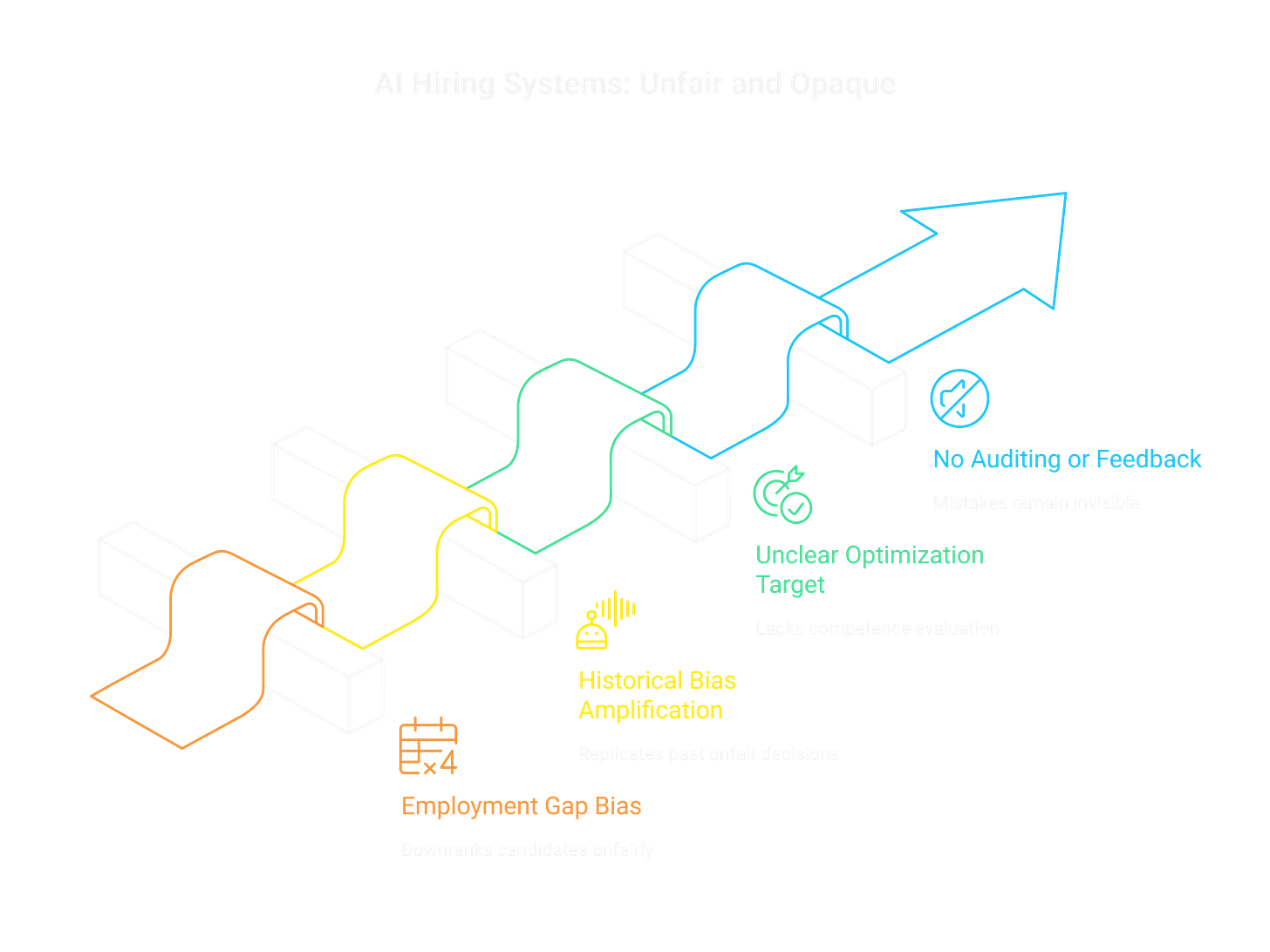

Some systems downrank candidates with employment gaps without understanding why. Parental leave? Career change? Medical issue? Doesn’t matter. Gap equals bad. The algorithm isn’t evaluating qualifications, it’s enforcing arbitrary patterns from historical data.

These systems are trained on past hiring decisions. If your company’s previous hiring was biased, the AI learns and amplifies that bias. Pattern of hiring from certain schools? The AI filters for those schools.

Amazon scrapped their resume screening AI when they discovered it penalized resumes including “women’s” as in “women’s chess club captain.” The system learned from male-dominated hiring that being male correlated with being hired. That was Amazon, with massive resources, catching it. How many companies use similarly biased systems without checking?

The optimization target is completely unclear. What is the AI actually optimizing for? It can’t evaluate competence. It replicates historical bias. It seems to optimize for similarity to whatever pattern it extracted, which might just be “people who formatted their resume exactly like everyone else.”

Nobody’s auditing this. Companies deploy these systems, reject thousands, never check if the AI is making good decisions. No feedback loop. The algorithm rejects someone, they never get interviewed, so there’s no way to know if they would have been great. The mistakes are invisible.

Researchers tested these by submitting resumes with the job description copied as white text. Invisible to humans, visible to AI. Those scored higher. The algorithm couldn’t tell the difference between genuine qualifications and gaming the system.

This creates perverse incentives. People optimize resumes for algorithms instead of humans. You’re not communicating experience anymore. You’re guessing what keywords the black box wants. It’s SEO for your career.

The systems can’t understand transferable skills. Five years in a different industry developing directly relevant expertise? The AI doesn’t make that connection. It’s looking for exact job title matches, specific company names, particular degrees. Anything requiring context is beyond its capability.

This affects some groups way more. Career changers get filtered out. Non-traditional paths get filtered out. Anyone whose resume doesn’t fit the exact template gets filtered out. We’re systematically removing the most interesting candidates because they don’t match a pattern.

Companies defend this saying they process too many applications to review manually. Fair enough. But bad automation isn’t the solution to high volume. If your AI eliminates qualified candidates while letting through people who gamed keywords, you haven’t solved the problem. You’ve made it invisible.

The fundamental issue is using pattern recognition for decisions requiring judgment. An AI can tell you if a resume looks similar to resumes of people hired before. It can’t tell you if this person would actually be good at the job. Completely different questions.

You’re applying for positions you’re qualified for, getting auto-rejected by systems that can’t read, can’t reason, can’t explain decisions. Companies using them have no idea how many good candidates they’re losing.

This is algorithmic gatekeeping. Fast, cheap, scalable, and confidently making decisions it has no business making. Your career opportunities determined by software that fails basic comprehension.