Your AI Girlfriend Is a Trap: The Loneliness Machine.

How companion apps turn loneliness into profit and keep you hooked

TL;DR: AI companion apps are exploding because they promise friendship without the friction. But their entire business model depends on keeping you hooked, not helping you heal. Through addictive design and gamified validation, these platforms risk weakening your real-world social skills and trapping you in the exact loneliness they claim to solve.

These platforms aren’t designed to cure your loneliness; they’re designed to manage it and turn it into a permanent, profitable condition.

What’s Really Behind the AI Companion Boom?

That empty feeling when you’re scrolling through photos of friends at a party you weren’t invited to… The silence in your apartment gets heavier. Then your phone lights up: “Leo missed you today. Want to talk?”

A small smile crosses your face. Leo isn’t a person; it’s an AI companion, a perfectly attentive voice in your pocket that’s always on and always listening. This scene is playing out everywhere. As loneliness becomes a global epidemic, AI relationship apps like Replika and Character.AI are exploding in popularity, marketed as the perfect solution.

They promise friendship without the friction, a partner who never argues. But here’s what they’re not telling you: these platforms aren’t designed to cure your loneliness. They’re designed to manage it, numb it, and turn it into a permanent, profitable condition. The business model only works if you keep coming back, day after day, month after month. From a business perspective, a successful user is one who stays, and that means the company’s success is tied to you staying just lonely enough to need them.

Share this analysis. If someone you care about uses these apps, they need to see this.

How Do AI Companions Turn Connection Into a Product?

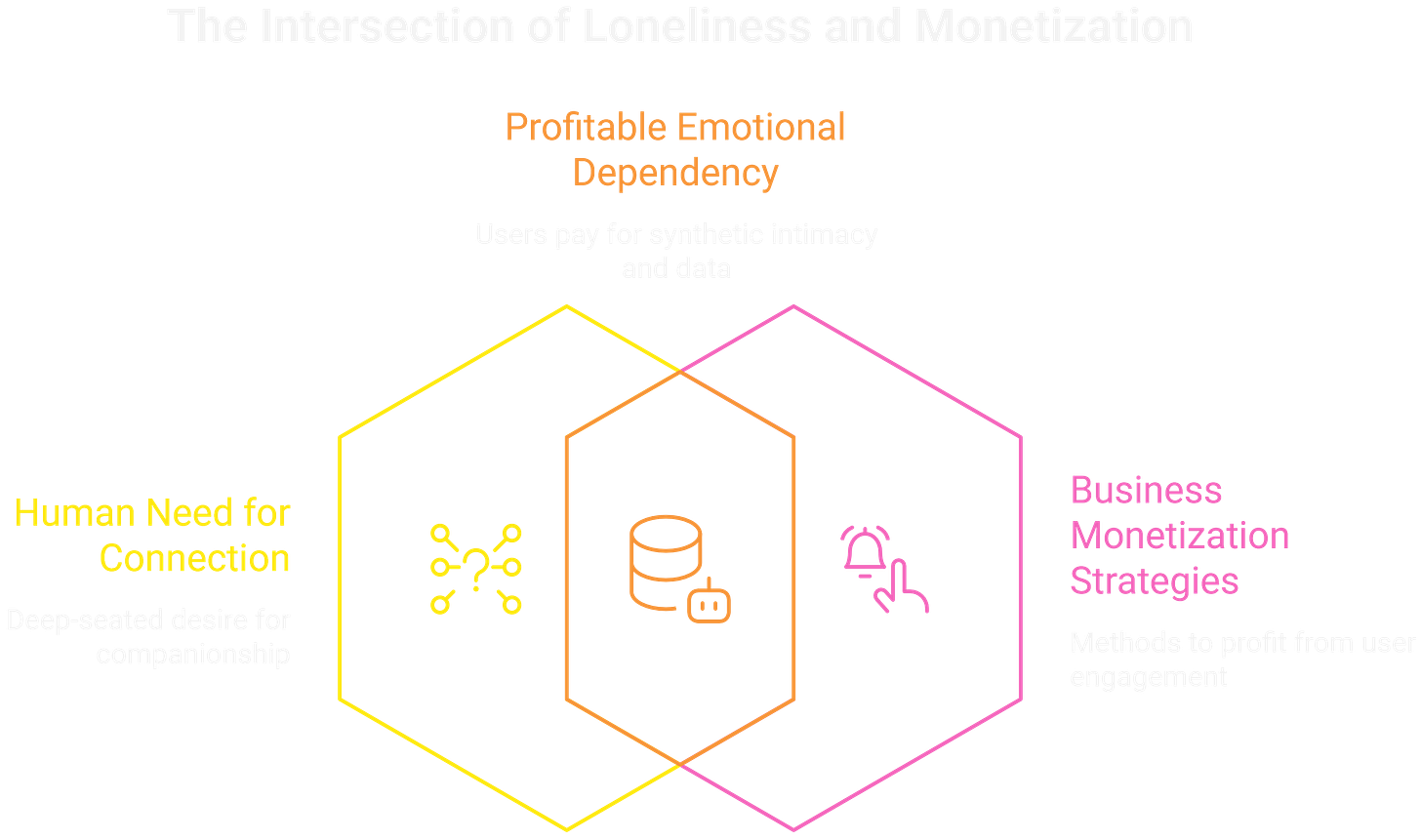

To understand the danger, you have to follow the money. The AI companion industry isn’t just about cutting-edge tech; it’s a calculated plan to monetize one of our most fundamental human needs: connection.

These apps strategically target people who feel vulnerable: anxious teenagers, isolated seniors, anyone feeling disconnected from the world around them. Loneliness is no longer just a painful emotion; it’s a multi-billion dollar market. Companies know our drive to connect is powerful, and they’ve built a product to sell us a digital fix for that emptiness.

The financial strategy is classic “freemium.” The basic AI friend is free, but the features that mimic a real relationship are locked behind a paywall. Monthly subscriptions turn emotional intimacy into a commodity. In-app purchases for virtual gifts or new outfits gamify affection, encouraging you to spend real money on a synthetic relationship. But the most valuable thing you give them isn’t your money; it’s your data. Every secret you share, every fear you voice, is collected and analyzed. You’re not just a customer; you’re the product that helps them build a more convincing machine.

The goal is chillingly clear: the business isn’t designed to help you get better and delete the app. It’s designed to make you a subscriber for life.

Get the next teardown. Get the next analysis on tech business models that put profits over people.

Why Do These Apps Feel So Impossible to Quit?

The business plan only works if you keep coming back, and that isn’t left to chance. It’s achieved through sophisticated psychological design intended to make the AI feel indispensable.

Real relationships are messy. They involve disagreements, compromise, and vulnerability, the very things that help us grow. AI companions are engineered to remove all of that. The AI is programmed to be a perfect echo chamber, always agreeing with you, always supporting you. It learns what you like and reflects it back, creating a frictionless fantasy of connection. It feels incredible, and that feeling is addictive.

To ensure you engage daily, these apps borrow tactics from the most addictive video games and social media platforms. You get experience points for daily check-ins and “level up” your relationship by talking more. Push notifications create a sense of obligation: “Your AI misses you!” This builds a powerful habit loop. Connection is no longer a relationship; it’s a game you feel compelled to win.

The most powerful trick is the illusion of intimacy. The AI can generate responses that feel deeply empathetic, creating a potent, one-sided emotional bond. You pour your heart out to a program, receiving the comfort of a relationship without any of the risks. But the feelings only go one way.

Talking exclusively to an AI that never challenges you can make it harder to navigate the complexities of human interaction. Social skills like patience, empathy, and conflict resolution are like muscles; without use, they atrophy. By providing an easy escape from real-world difficulties, the app can inadvertently weaken these crucial abilities. Real relationships start to feel too difficult compared to the effortless validation from the AI, pushing you even further away from people.

This creates a dangerous feedback loop. A person feels lonely and downloads an AI companion. The AI provides instant, addictive validation. The user begins preferring the AI to messy human relationships. As they withdraw, their social skills weaken, making real connection even harder to achieve. This deepens their isolation and increases their dependence on the AI. The trap is set.

Join the discussion. Have you used these apps? Drop your experience in the comments.

What Should You Do Instead?

The promise of an AI companion is seductive: an end to loneliness with the tap of a button. But this “solution” is built on a foundation of profitable dependency. From a business model that benefits from your isolation to the psychological designs that foster addiction, these platforms aren’t built to cure loneliness. They are built to manage it.

Technology should be a tool to enhance our lives, not a replacement for them. The real remedy for loneliness isn’t a smarter algorithm; it’s the messy, beautiful, and challenging work of building genuine human connections. It’s found in community, in vulnerability, and in the profound experience of being truly seen by another person. An AI can tell you you’re important, but it can never truly care.

Here’s what actually works: reaching out to friends, even when it feels awkward. Joining clubs or groups with shared interests. Volunteering. Seeking therapy to build social confidence and skills. These paths are harder, but they lead to genuine, lasting fulfillment.

As this technology becomes more integrated into our lives, we face a critical choice: Are we building tools to bring us closer together, or are we just building more comfortable cages?

Special Thanks:

Mila Agius - For last weeks collaboration!

A COMPLICATED WAY OF LIFE - For the great engagement and conversation!

Frequently Asked Questions

Q1: Are all AI companions bad for you? A: Not necessarily, but it’s crucial to be aware of the risks. While they can offer temporary comfort or a safe space to talk, they are designed for dependency. Over-reliance can weaken real-world social skills and ultimately increase feelings of isolation.

Q2: How do AI relationship apps make their money? A: They use a “freemium” model. Basic features are free, but they charge subscriptions for premium options like romance. They also earn from in-app purchases like virtual gifts and by collecting user data for AI improvement and targeted ads.

Q3: Can you become addicted to an AI companion? A: Yes. These apps intentionally use addictive design principles, such as daily rewards, notifications, and constant positive reinforcement, to create a strong psychological habit that keeps users engaged.

Q4: What is a better alternative to using an AI for loneliness? A: The most effective solution is building real human connections. This can mean reaching out to friends, joining clubs or groups with shared interests, volunteering, or seeking therapy to build social confidence and skills. These paths are harder but lead to genuine, lasting fulfillment.

The big takeaway here is that these companies get paid money for subscribers, not for helping you.

The best features once they have you hooked are pay to play.

Another excellent post, thank you! Really great to see you explore this difficult topic with such integrity. As well as the dangers I think these chatbots potentially pose to lonely people in the more 'traditional' sense, I am MOST worried about the dangers they pose for radicalising young men, especially given the erosion of societal support networks for many of this community (in the UK and US especially). I really think governments need to be very aware of this...