AI Gold Rush? The Real Money is in Selling Shovels.

Why Nvidia, AWS, and infrastructure companies will capture 80% of AI’s wealth while chatbot startups burn through billions.

TL;DR: Everyone’s racing to build the next ChatGPT killer. Meanwhile, the real money is being minted by the companies selling compute, cloud storage, and GPUs. The AI gold rush isn’t about who builds the best LLM. It’s about who owns the shovels everyone needs to dig.

For every AI startup that succeeds, a hundred will fail. But every single one will pay Nvidia and AWS along the way.

Why Does Every AI Startup Look the Same?

First, let me say I’m not giving financial advice, I’m only giving you my research and opinion. I am not a financial professional. Do your own research for your financial situation. With that said, I bet your LinkedIn feed is drowning in AI startup announcements. Every founder claims they’re “revolutionizing” customer service, or sales, or content creation, or whatever vertical sounds hot this quarter. But scratch the surface and they’re all running on the same infrastructure. They’re paying the same cloud bills. They’re using the same foundation models. They’re fighting over the same customers with nearly identical products.

Think back to the California Gold Rush of 1849. Thousands of miners flooded into California chasing fortunes. Most of them went broke. The ones who actually made money? Levi Strauss sold jeans. Sam Brannan sold shovels and pickaxes. They didn’t need to find gold. They just needed miners to keep showing up.

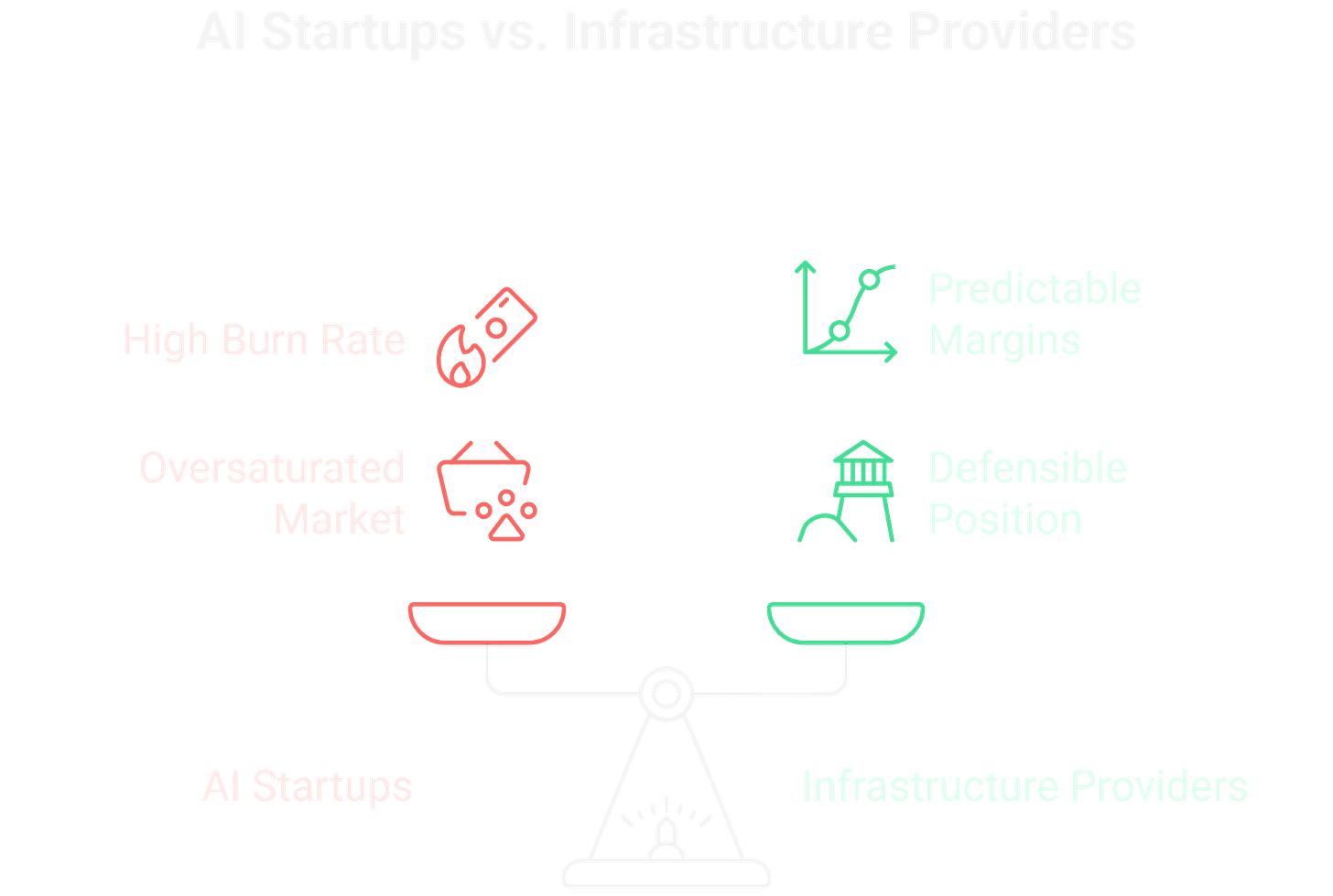

The modern AI boom works exactly the same way. The application layer is brutal, commoditized, and oversaturated. ChatGPT wrappers, AI writing tools, generic customer service bots, they’re all racing toward zero margins. A typical AI startup burns $50,000 to $500,000 monthly on compute alone. They’re hemorrhaging cash to Nvidia, AWS, and Azure before they’ve signed their first customer.

Meanwhile, Nvidia’s stock is up over 200% in two years. AWS keeps posting record cloud revenue. These companies don’t care which AI app wins. They get paid either way. That’s where the real wealth is accumulating, in the infrastructure layer where margins are predictable and defensibility actually exists.

Who Actually Makes Money When AI Companies Raise Billions?

Follow the money. When an AI startup raises $100 million, where does that capital actually flow? The answer should terrify any investor betting on the application layer.

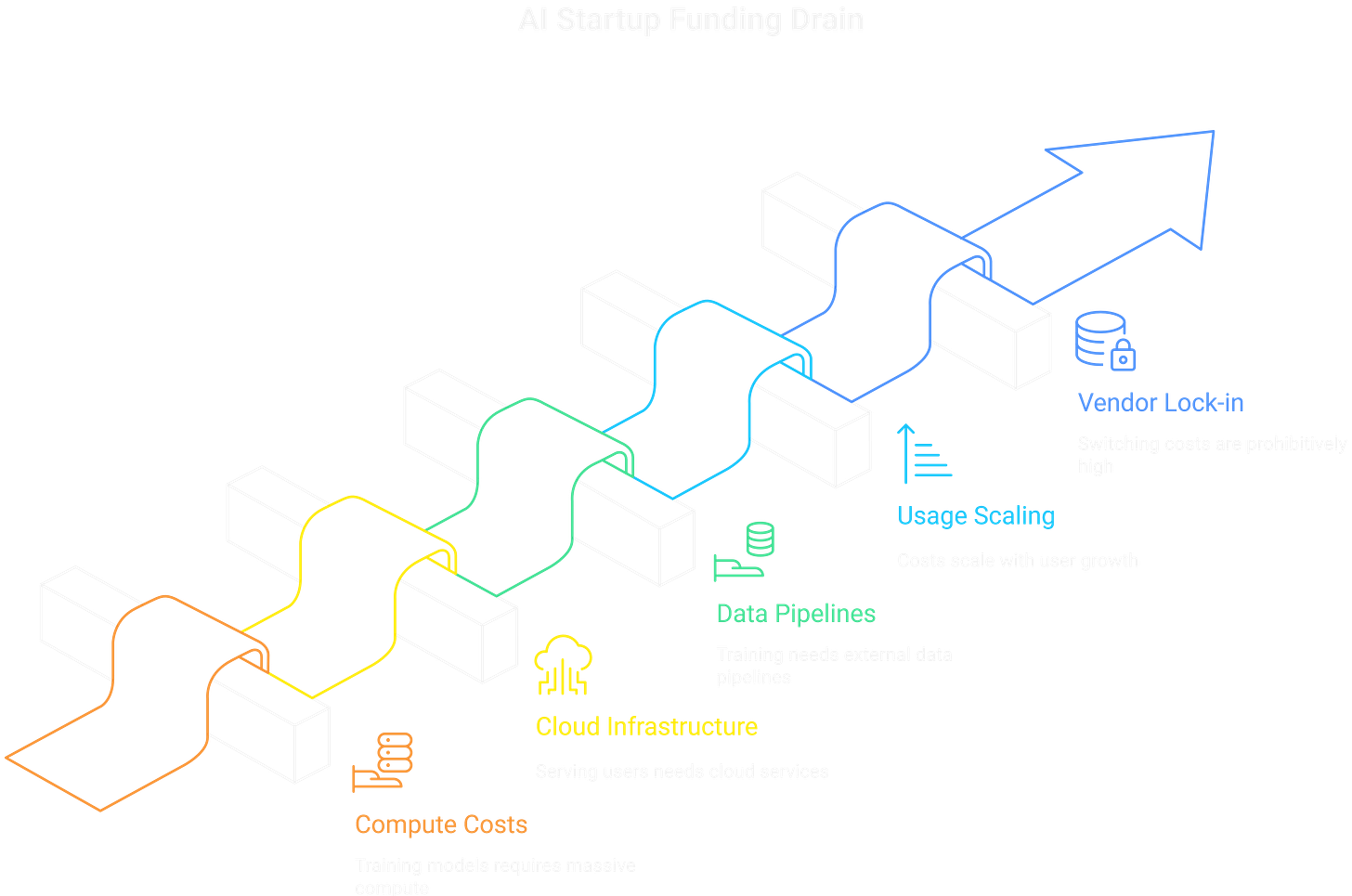

Anthropic reportedly spends over $500 million annually on AWS bills alone. OpenAI’s compute costs to run ChatGPT are estimated at $700,000 per day. Nvidia’s H100 GPUs cost $25,000 to $40,000 each, have 6 to 12 month waitlists, and get sold in clusters of hundreds. The infrastructure providers get paid first, they get paid always, and they get paid whether the startup succeeds or fails.

Infrastructure costs are the largest line item for AI companies, often 40% to 60% of total spend. These aren’t optional expenses. You can’t build an LLM without massive compute. You can’t serve millions of users without cloud infrastructure. You can’t train models without data pipelines running on someone else’s servers.

The costs scale with usage too, creating predictable recurring revenue for infrastructure providers. Every new user your AI app acquires means more API calls, more tokens processed, more storage needed. The VCs might lose their shirts on your Series B. But AWS already got paid for the compute you burned through trying to find product-market fit.

Switching costs make this lock-in even stronger. Once you’ve trained models on AWS infrastructure, built pipelines on their tools, and integrated their services into your stack, migrating becomes a six-figure nightmare. Companies stay put and keep paying.

This is the hidden wealth transfer happening in AI. Share this article with an investor or founder who needs to see it.

Which Infrastructure Plays Are Actually Worth Your Money?

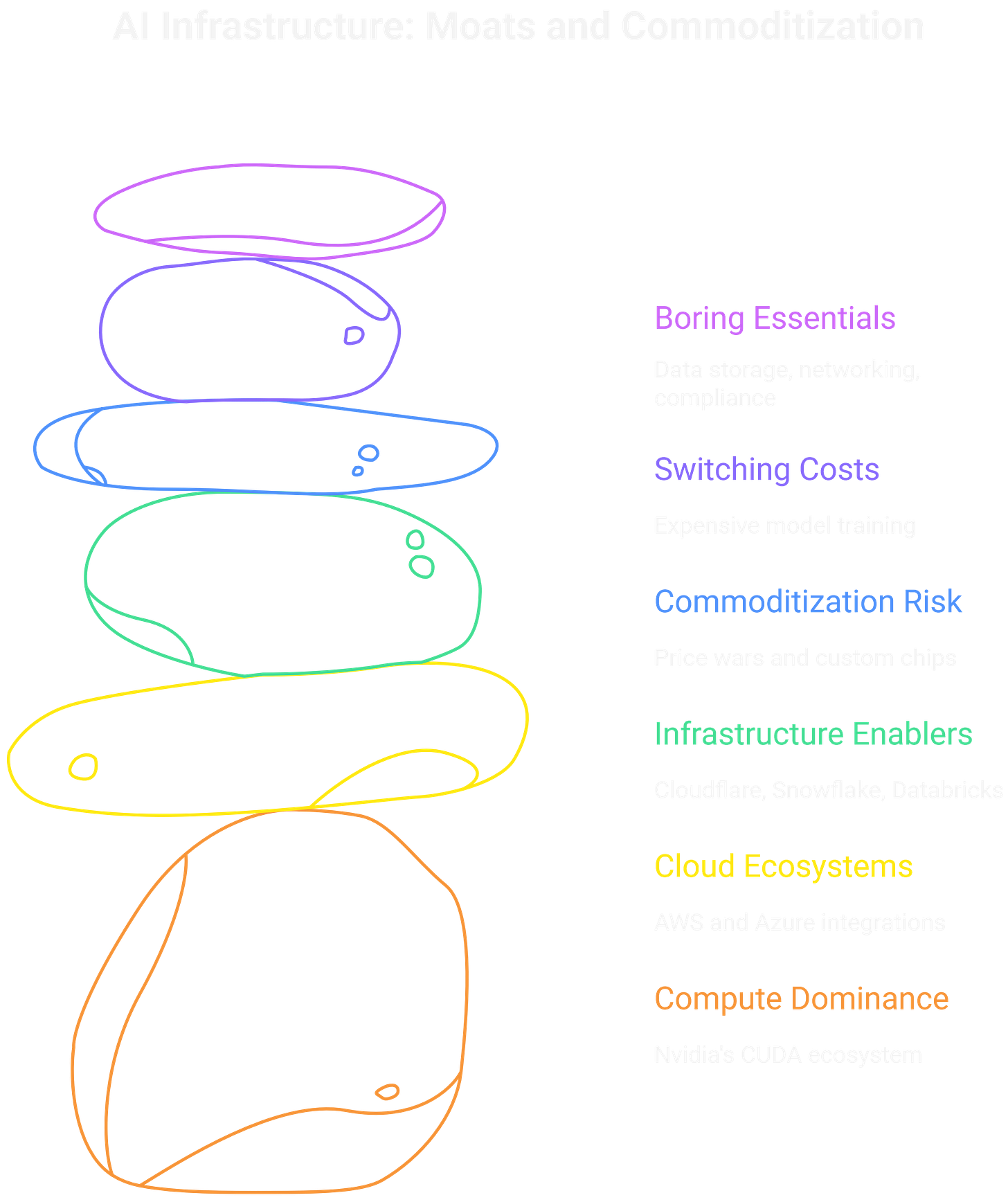

Not all pickaxe sellers are created equal. The AI infrastructure stack has layers, and some companies have real moats while others are one price war away from commoditization.

Start with compute. Nvidia dominates because of CUDA, their software ecosystem that gives them a 3 to 5 year lead over competitors. AMD makes cheaper GPUs, but developers have spent a decade building on CUDA. Rewriting everything for AMD would cost more than just paying Nvidia’s premium. That’s a real moat.

Cloud platforms are the next tier. AWS isn’t just selling compute anymore. They’ve built entire ecosystems around AI: Bedrock for foundation models, SageMaker for training, a whole suite of tools that make it easier to stay than to leave. Microsoft Azure has similar advantages through tight Office 365 and enterprise integrations. Google Cloud is fighting hard but still trailing in AI-specific tooling.

Then you’ve got the infrastructure enablers. Cloudflare is positioning itself as the “AI CDN” for serving models at scale. Snowflake and Databricks own critical pieces of the data infrastructure that feeds AI systems. These aren’t as sexy as Nvidia, but they’re capturing real, recurring revenue.

Here’s the contrarian take though: infrastructure does get commoditized eventually. We’ve seen cloud price wars. We’re seeing GPU competition heat up with custom chips from Google, AWS, and startups. But this cycle is different because of switching costs. Training a foundation model costs tens of millions of dollars. Companies don’t casually move that workload to save 10% on compute. The ecosystem lock-in is stronger than past infrastructure cycles.

The boring plays often win in infrastructure. Data storage, networking, compliance tooling, these aren’t headline-grabbers but they’re essential and sticky. If you’re building a portfolio, overweight the companies that every AI app needs, not the apps themselves.

Get the next teardown. Get the next analysis on hidden risks and real moats in AI.

What to Actually Do With This Information

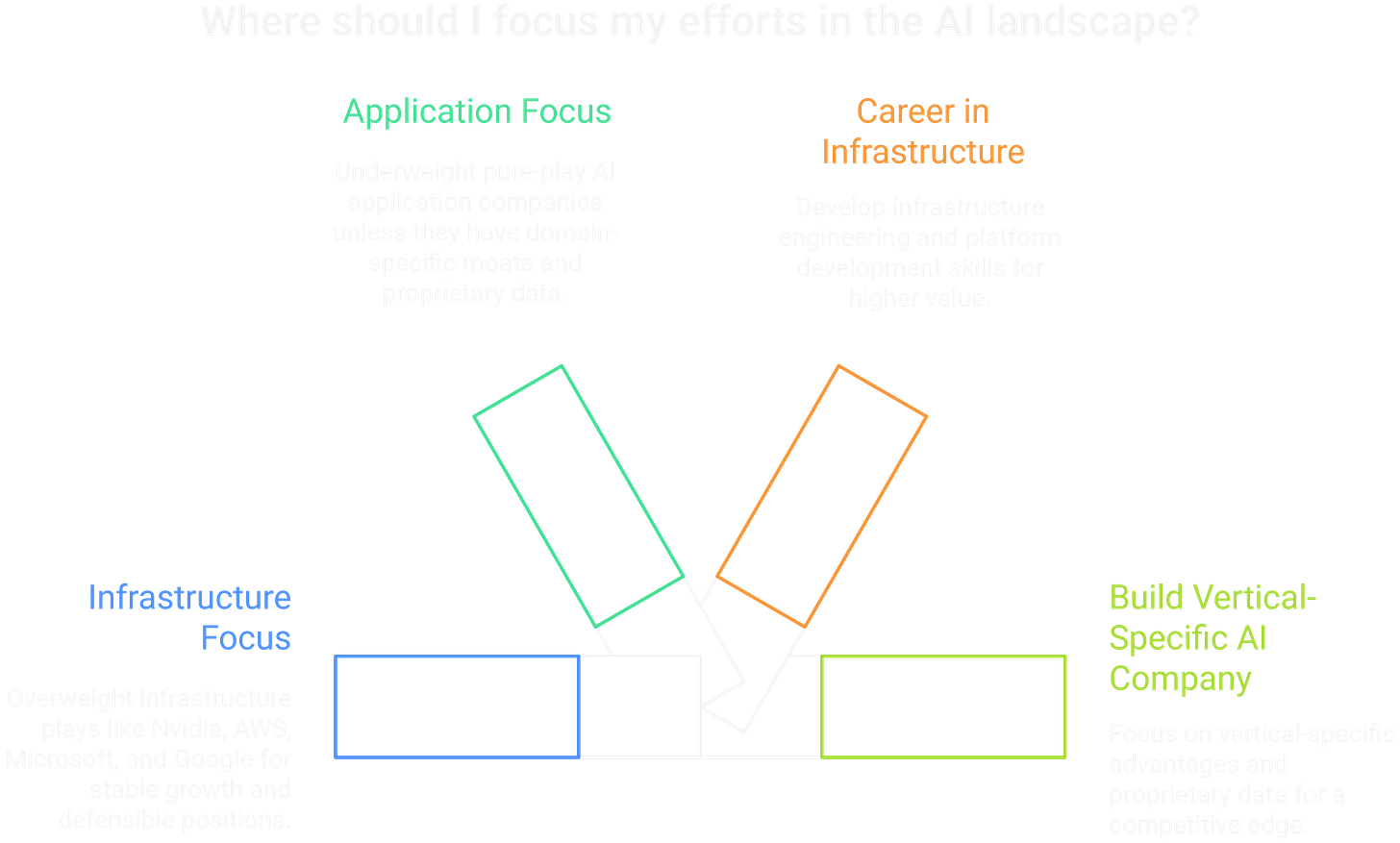

This isn’t just portfolio advice. Understanding where value accrues in AI changes how you make career decisions, business decisions, and investment decisions. The AI revolution is real, but the winners won’t be the ones flooding your social feeds with launch announcements.

Think about what’s coming next. Custom chip development is accelerating. Google’s TPUs and AWS Trainium are real attempts to break Nvidia’s monopoly. They won’t succeed overnight, but they’ll put pressure on margins eventually. Open source models like Llama and Mistral are changing the economics too, potentially reducing the moat around proprietary foundation models. But even in that world, someone still has to provide the compute and infrastructure.

Then there’s sovereign AI. Countries are waking up to the fact that AI infrastructure is strategic. We’re going to see massive government spending on national AI capabilities over the next decade. That’s billions more flowing into infrastructure.

So what should you actually do? If you’re building a portfolio, overweight infrastructure plays like Nvidia, AWS (via Amazon), Microsoft, and Google. Underweight pure-play AI application companies unless they have domain-specific moats and proprietary data. If you’re thinking about your career, infrastructure engineering and platform development skills will be more valuable than prompt engineering. If you’re building an AI company, focus ruthlessly on vertical-specific advantages and proprietary data, not generic AI capabilities.

The most important mindset shift: stop asking “what AI app should I build?” and start asking “what infrastructure gap exists?” The app layer is overcrowded. The infrastructure layer still has white space and defensible positions.

Join the discussion. Drop your thoughts or counter-arguments in the comments.

Frequently Asked Questions

Q1: Isn’t Nvidia already overvalued? Did I miss the opportunity?

A: Everyone said AWS was “too late” in 2015 and Amazon stock has 5x’d since then. Valuation matters less than moat when adoption is still early. AI inference scaling hasn’t even started yet. Enterprise adoption is in innings one or two. Sovereign AI spending is just beginning. Nvidia’s P/E ratio might look high, but forward-looking demand supports years of growth. The real question isn’t whether Nvidia is expensive today, it’s whether their CUDA moat can hold against custom chip competition. So far, it’s holding fine.

Q2: What about all the companies trying to build “Nvidia killers”?

A: AMD, Google’s TPUs, AWS Trainium, and a dozen startups are all trying. Here’s why it’s harder than it looks: CUDA isn’t just hardware, it’s an entire software ecosystem that developers have spent a decade mastering. Libraries, tools, tutorials, Stack Overflow answers, all built around CUDA. Switching means rewriting code, retraining teams, and debugging new toolchains. That’s minimum 3 to 5 years of friction even if the competitor’s hardware is technically equal or better. Hardware alone isn’t enough.

Q3: Should I invest in AI application companies at all?

A: It’s not a blanket no, but the bar is high. You want vertical-specific solutions with proprietary data moats, not horizontal tools anyone can build. Healthcare AI with unique patient data partnerships? Maybe. Enterprise workflow tools with deep integrations and switching costs? Possibly. Generic “AI writing assistant” or “AI customer service chatbot”? Probably not unless they have distribution or data advantages. Acknowledge that 95% of AI app companies will fail or get acquired at mediocre returns. The winners will be the exception, not the rule.

Q4: What if AI progress slows down or hits a wall?

A: Infrastructure companies have less exposure to AI disappointment risk than pure-play AI apps. Cloud computing, data storage, and networking have value beyond AI. Even if we never get to AGI, current AI adoption trends can support 5 to 10 years of infrastructure growth. We’re still in early enterprise adoption. Most companies haven’t deployed AI at scale yet. That’s years of runway before we’d need another breakthrough to sustain growth. Plus, infrastructure revenue is more predictable because it’s usage-based and recurring.

Q5: Is this just “invest in big tech” with extra steps?

A: Fair question. The distinction is between big tech as brand versus big tech as infrastructure play. Amazon’s retail business might face pressure, but AWS prints money with 30% operating margins. Microsoft’s legacy Windows business is shrinking, but Azure and Office 365 are growth engines. When you invest in these companies for infrastructure exposure, you’re betting on a specific business unit, not the whole conglomerate. The thesis is: AI will drive infrastructure spending regardless of which apps win, and a few companies are positioned to capture that spending. That’s different from generic “big tech always wins” thinking.

Finally someone is talking about why every one and their grandma is starting an AI startup.

I didn’t get the metaphor/reference at first, and I thought you meant selling shovels to clean up the shit after the mess they make. 😅

I listened to the co-founder (ex-CEO?) of Stable Diffusion on a podcast recently and he talked about how as everything gets more efficient, cost will go down. He was talking more in regard to employers replacing different employees with AI and how that happens, but do you think that could impact investments?