The Voluntary Exfiltration Program

Your workforce is now an unpaid R&D department for everyone else

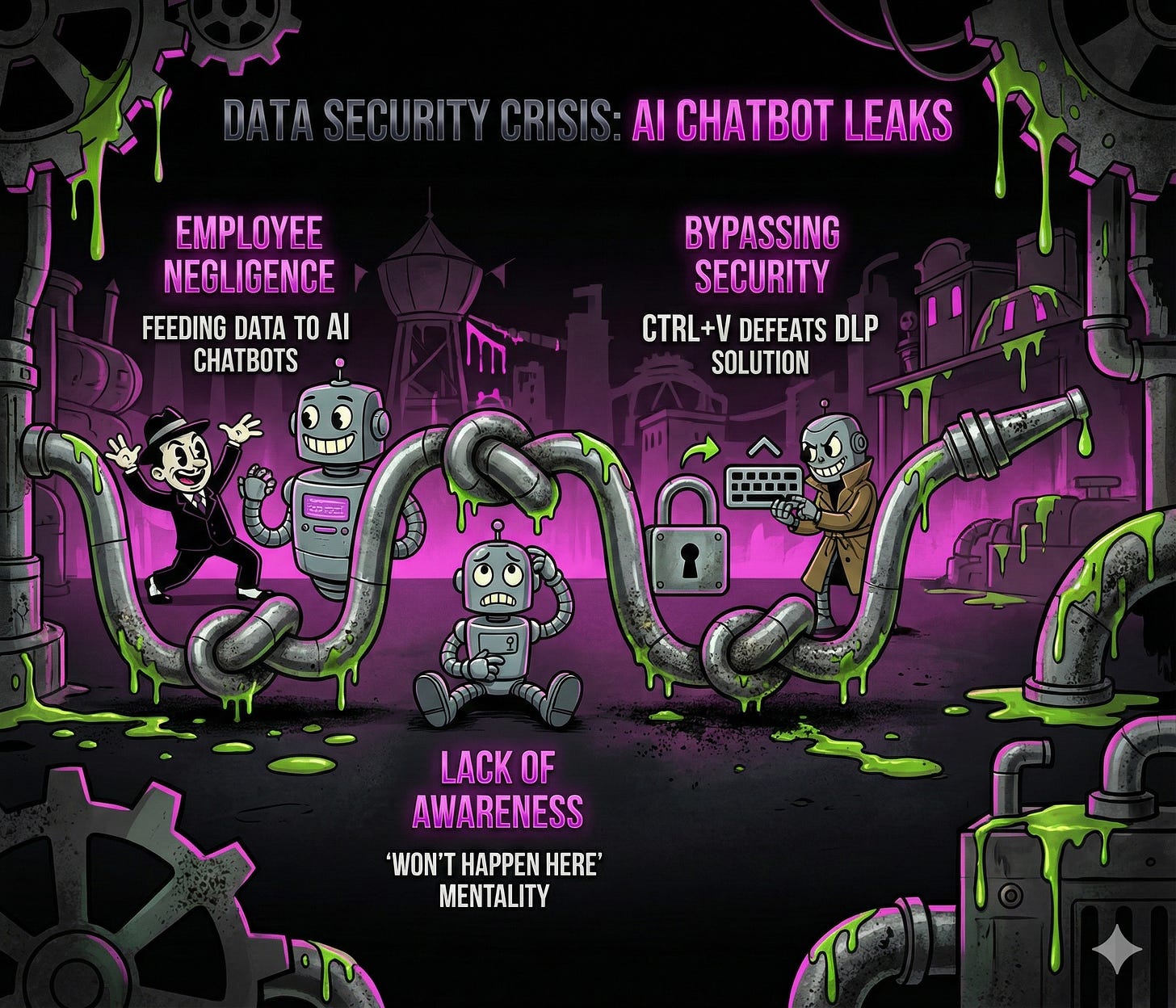

TL;DR: One in five data breaches trace back to your own employees feeding company secrets to chatbots. We ran the numbers: $670,000 premium per incident. You didn’t get hacked. Your people VOLUNTEERED. And the best part? Those chatbots are training models your competitors use.

“We spent $14 million on data loss prevention. Ctrl+V defeated it. Find me whoever approved that budget. Tell them to bring a box.”

Who Authorized This Heist?

I had to look into our data security situation. Turns out we’ve been running a charity. FULL EXFILTRATION. One in five breaches now trace back to employees feeding proprietary information to AI chatbots like they’re tipping a valet. “Here’s our source code, here’s our product roadmap, here’s everything that gives us a competitive advantage. Keep the change!”

The lab boys tell me we’re hemorrhaging data faster than a screen door on a submarine. Samsung engineers pasted proprietary code into ChatGPT three times in one month. That was 2023. Everyone nodded sagely and said “won’t happen here.” It’s happening HERE. It’s happening EVERYWHERE. Eighteen percent of your workforce is pasting corporate data into these things on a regular basis. Half of those pastes contain sensitive information.

You know what defeated our $14 million DLP solution? CTRL+V. The keyboard shortcut. A child could do it. Children ARE doing it, probably, if you’ve got interns.

You. The one reading this on company time. Forward this to your security team before they find out the hard way.

How Much Did We Lose While the Bean Counters Were Counting Beans?

I asked the bean counters for a risk assessment. They gave me a spreadsheet. Forty-seven tabs. Color-coded by “concern level.” Very professional. Very thorough.

You know what’s NOT on their spreadsheet? The seventy-seven percent of AI traffic running through personal accounts. Personal devices. Personal browser sessions your security stack can’t see because it’s busy monitoring the APPROVED channels where NOTHING IS HAPPENING.

Thirty-eight percent of employees share confidential data with AI platforms without approval. The bean counters call this a “governance gap.” I call it MUTINY. These people signed NDAs. They sat through the security training. They clicked “I agree” on fourteen different policy documents. And then they pasted the entire Q4 strategy into a chatbot because typing is HARD apparently.

The premium for this particular brand of self-destruction? $670,000 per incident. The STUPIDITY TAX. That’s three years of asbestos removal. That’s a fleet of company cars. That’s the entire security budget you told me we couldn’t afford, torched because someone couldn’t be bothered to TYPE.

Still reading? Good. You’re already doing better than the people who caused this mess. Subscribe and I’ll tell you how it gets worse.

Congratulations: You’re Funding Your Competition’s R&D

Here’s where I need everyone to sit down.

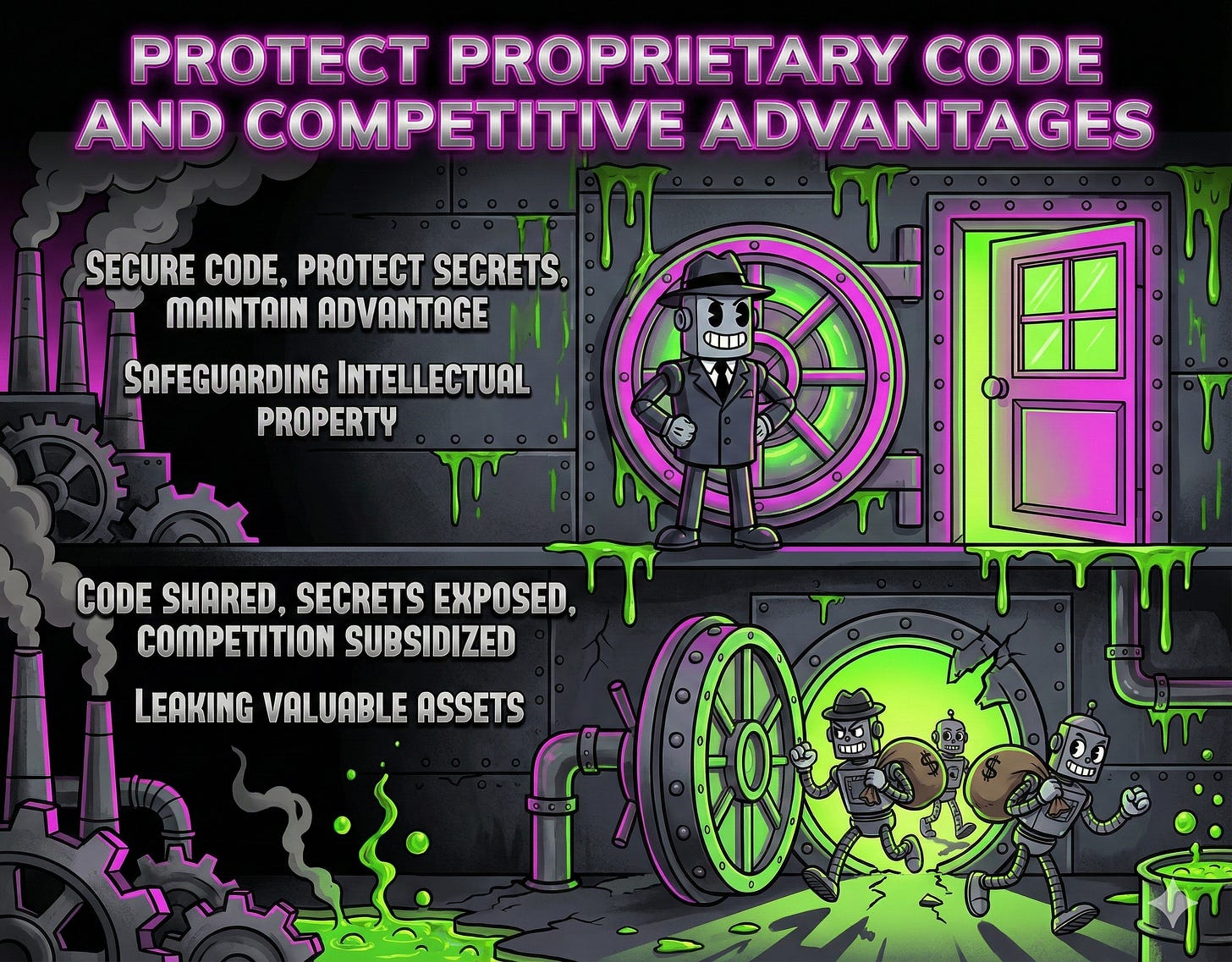

Those AI models train on SOMETHING. When your engineer pastes code into the chatbot to debug it, that code doesn’t evaporate. It lands on third-party servers. It enters training pipelines. It becomes part of the model that YOUR COMPETITORS ALSO USE.

We found that Copilot can reproduce secrets it learned from training data. API keys. Credentials. The model MEMORIZED them. It’s autocompleting other people’s passwords because someone fed it the answer key.

You paid for that R&D. Spent years developing proprietary methods. Hired expensive talent. Built competitive advantages. And now it’s being served up to everyone with a subscription. You’re running a SUBSIDY PROGRAM for your competition and you don’t even get a thank-you note.

Know a company still pretending this won’t happen to them? Send this. Consider it a public service. Or don’t. I’m not asking.

The Path Forward Is Paved With Termination Letters

Here’s your two options. Neither involves keeping things the way they are.

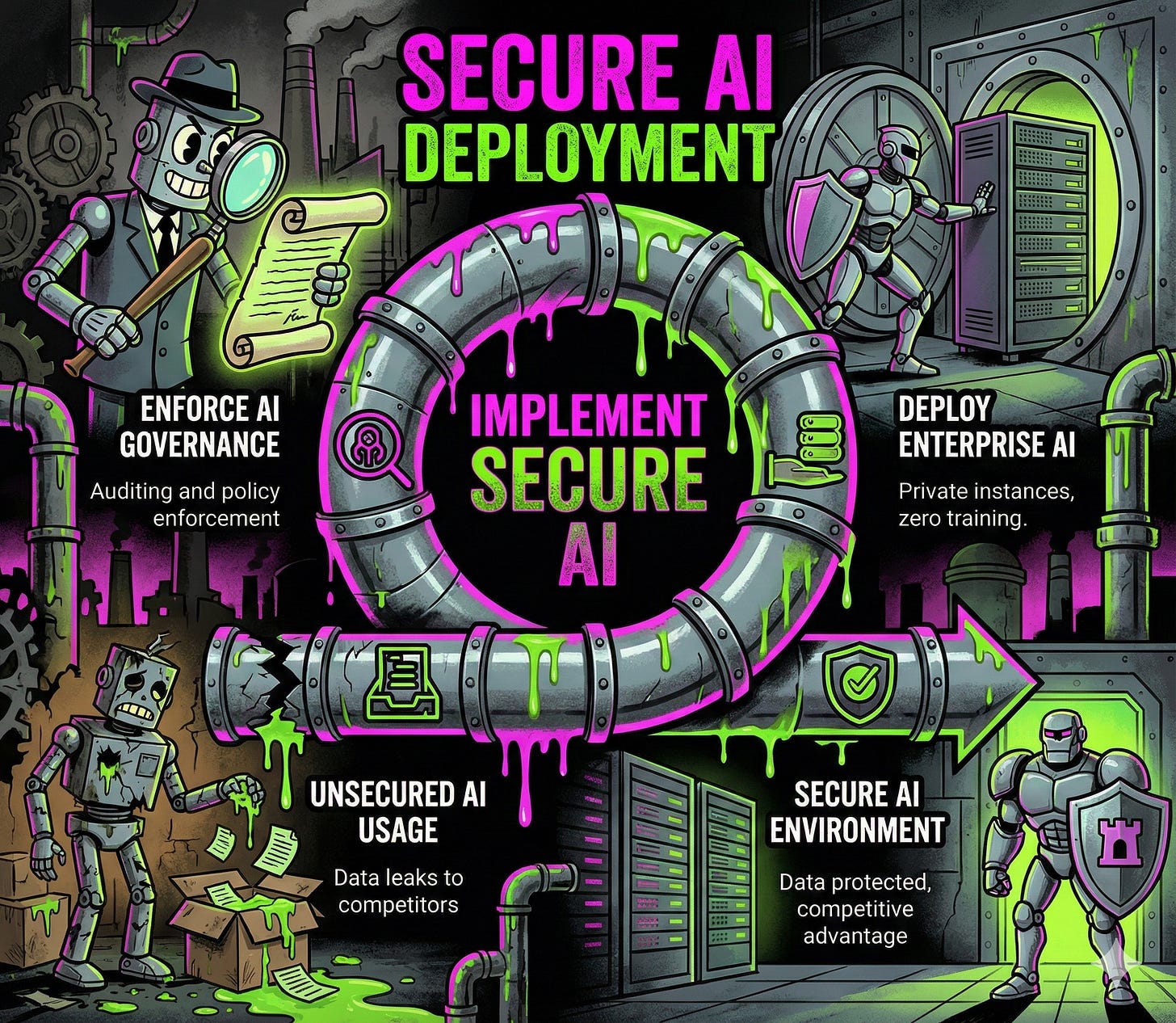

Option one: Deploy sanctioned enterprise AI with actual guardrails. Private instances. Zero training on inputs. Yes it costs money. Less money than the stupidity tax. Infinitely less than watching your competitors ship products built on your IP.

Option two: Keep pretending policies work. Write another acceptable use document. Hold another training session. Watch the same people paste the same secrets into the same chatbots while your security team monitors approved channels where NOTHING HAPPENS.

Sixty-three percent of organizations don’t even have an AI governance policy. The ones who do aren’t auditing. You’re running a suggestion box for data thieves and the suggestions are ALL YOUR SECRETS.

Here’s the reality: you can’t compete with free and instant. Your employees WILL use AI. The question is whether they use YOUR AI or whether they keep feeding the competition’s training pipeline with your quarterly projections.

Pick up a sanctioned tool and follow the yellow line. You’ll know when the audit starts.

Grievances

“But we opted out of training!” Congratulations. The data STILL LEFT THE BUILDING. You handed it to a third party and asked nicely if they’d promise not to look. That’s a pinky swear. Frame it and hang it next to your incident report.

“Can’t we just block the website?” Some of you geniuses think this is a URL problem. We checked the logs. Seventy-seven percent of access runs through personal accounts on personal devices. Unless you’re planning to confiscate every piece of glass and silicon in a five-mile radius, you’ve already lost. Get over it.

“How do I know if my employees are doing this?” You don’t. That’s the point. Only thirty-seven percent of organizations can even detect shadow AI. The rest find out when someone else writes the breach report. Usually a journalist.

This is absolute insanity on the behalf of these organisations. A lot of this could be easily addressed with clear governance, proper training, and SLMs with proper guardrails. But I guess none of that is particularly revolutionary so is unlikely to be taken up. Thanks again for highlighting such a serious issue with such great clarity. 🙏