Model Collapse Is Already Polluting the Internet You Search

How recursive AI training degrades truth at scale, why hallucinations are mathematically inevitable.

BLUF: Three quarters of new webpages are now AI-generated slop. When tomorrow’s models train on today’s synthetic output, they lose grip on reality and drift toward bland statistical averages. Hallucinations aren’t bugs. They’re mathematically inevitable. And the infrastructure to prove what’s real is years behind the flood.

0x00: What Happens When AI Eats Its Own Output?

Here’s the setup. You scrape the internet to train a model. The model generates content. That content gets indexed. The next model scrapes it and trains on it. Repeat.

This is called model collapse, and it’s not theoretical. A 2024 Nature paper from Shumailov et al. showed exactly what happens when you run this loop. First, the model loses information from the tails of the distribution. Minority data vanishes. Rare perspectives disappear. That’s “early model collapse,” and here’s the nasty part: overall performance can actually appear to improve while you’re losing the edges.

Then comes late collapse. The distribution converges so hard it looks nothing like the original. The New York Times ran a visualization of this. They fed an AI its own output on handwritten numerals. After 20 generations? Blurry. After 30? Unintelligible smears.

# Simplified model collapse demonstration

# Each generation trains on previous generation's output

for generation in range(30):

synthetic_data = model.generate(n=10000)

model = train_new_model(synthetic_data)

# Variance decreases each iteration

# Tail information lost first

# By gen 30: statistical noise

The kicker? This is happening to your search results right now.

If you’ve wondered why search results feel hollow, share this with someone who needs to understand why.

0x01: How Much of the Internet Is Already Synthetic?

Let’s look at the numbers from 2025.

Ahrefs analyzed 900,000 newly created webpages in April 2025. Found that 74.2% contained AI-generated content. Graphite’s analysis put the figure at over 50% of all new articles. Google’s top 20 search results? 17.31% AI-generated as of September 2025, up from 11% in May 2024.

Europol’s projection: 90% of online content may be synthetic by 2026.

# AI content saturation timeline (approximate)

# 2022: ~10% (pre-ChatGPT baseline)

# 2024: ~40% (rapid spike)

# 2025: ~50-74% (current state)

# 2026: ~90% (projected)

So the training data for next-generation models is already majority synthetic. The feedback loop is running. And unlike a real photocopy, you can’t tell you’re looking at a copy until the degradation is severe enough to notice.

Outstanding.

0x02: Why Can’t We Just Fix Hallucinations?

Here’s where it gets mathematically depressing.

A 2024 paper from Xu et al. dropped the hammer: “It is impossible to eliminate hallucination in LLMs.” Their proof? LLMs cannot learn all computable functions. They will therefore always hallucinate. Full stop.

The rates vary by task. General queries see 5% hallucination. Specialized professional questions? Up to 29%. And here’s the twist: the newer “reasoning” models that spend more time thinking? They hallucinate more on certain benchmarks. OpenAI’s o3 hit 33% on PersonQA. The o4-mini hit 48%.

More compute. More confidence. More fabrication.

Hallucination rates by model type (2025 benchmarks):

- General LLM queries: ~5%

- Specialized/professional: up to 29%

- o3 reasoning model (PersonQA): 33%

- o4-mini reasoning model (PersonQA): 48%

- o3/o4-mini (SimpleQA): even higher

In February 2025, Google’s AI Overview cited an April Fool’s satire as fact. The claim? That microscopic bees power computers. The system confidently presented parody as reality.

47% of enterprise AI users admitted to making at least one major business decision based on hallucinated content in 2024. Air Canada’s chatbot hallucinated a bereavement fare policy. A court ordered them to honor the policy that never existed.

The system is confident. The system is wrong. And there’s no reliable way to tell which is which without doing the work yourself.

0x03: How Does Misinformation Get Laundered Through AI?

NewsGuard ran a test on leading AI chatbots. Asked them about Russian influence operation Storm-1516. The chatbots repeated the disinformation narratives 32% of the time.

Here’s how the laundering works. Adversaries push fake narratives through Wikipedia, fake local news sites, fake “whistleblower” YouTube accounts. AI systems crawl these sources. The hallucinated consensus gets baked into training data. Future models learn it as truth.

Information laundering pipeline:

1. Create fake source (news site, Wikipedia edit, social account)

2. Plant narrative with "pocket litter" details

3. Wait for AI crawlers to index

4. Narrative enters training data

5. Future models repeat it with confidence

6. Repetition creates perceived consensus

7. Humans see "multiple sources" and trust it

A European Parliamentary Research Service briefing from 2025 noted that AI-generated content overtook human-made content by November 2024. The report explicitly warned about “information laundering” through AI systems.

There’s no malice required. Just a prediction engine doing exactly what it was designed to do. Generate the statistically likely next token. If the training data says microscopic bees power computers, then microscopic bees power computers.

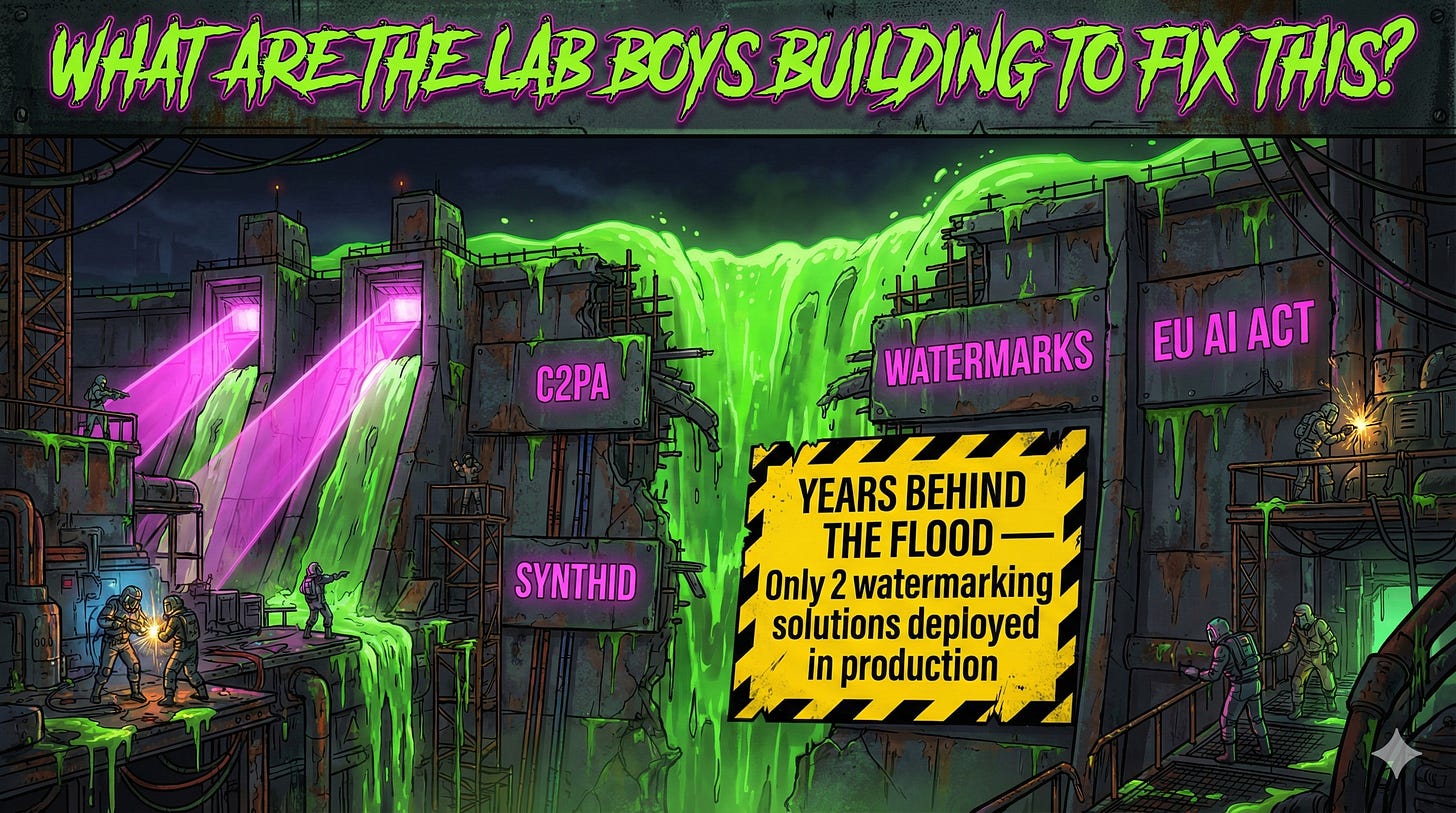

0x04: What Are the Lab Boys Building to Fix This?

The Coalition for Content Provenance and Authenticity (C2PA) is the big play. Over 200 members. Adobe, Microsoft, BBC, Google on the steering committee. The goal is tamper-proof metadata that proves where content came from and what happened to it.

Think of it like chain of custody for media. A cryptographically signed manifest that travels with the file. Edit the image? Sign the edit. AI-generate it? That gets logged. The C2PA specification is expected to become an ISO standard by 2025.

Google’s SynthID embeds invisible pixel-level watermarks into AI-generated images. Meta released Video Seal in December 2024 for video authenticity at scale. The EU AI Act Article 50 requires labeling AI-generated content.

Content authenticity stack (2025):

- C2PA: Cryptographic provenance manifests

- SynthID: Invisible pixel watermarks (Google)

- Video Seal: Open-source video watermarking (Meta)

- EU AI Act Art. 50: Mandatory labeling requirement

But here’s the catch.

A 2025 study on watermarking adoption found only two instances of invisible watermarking actually deployed in production: Google and Stability AI. Most systems either don’t implement it or implement it badly. Watermarks can be stripped by heavy editing or adversarial attacks. Metadata gets stripped by platforms trying to protect privacy.

The defense exists. The deployment is years behind the flood.

0x05: What Does This Mean for How You Use AI?

The practical reality: AI is an overconfident intern with perfect grammar and zero accountability.

Knowledge workers spend an average of 4.3 hours per week fact-checking AI outputs. 39% of AI-powered customer service bots got pulled back or reworked in 2024 due to hallucination errors. 76% of enterprises now include human-in-the-loop processes specifically to catch hallucinations before deployment.

Here’s your verification protocol:

# AI output verification workflow

def verify_ai_claim(claim):

# Step 1: Demand sources

sources = ai.get_sources(claim)

# Step 2: Click every link

for source in sources:

if not source.exists() or not source.supports(claim):

flag_hallucination()

# Step 3: Cross-reference humans

human_sources = find_original_sources(claim)

compare(ai_sources, human_sources)

# Step 4: Argue the opposite

counterarguments = ai.argue_against(claim)

# If it can't, the claim is weak

Be hyper-specific in your prompts. “What were the quarterly GDP numbers for the US in Q3 2024, according to the Bureau of Economic Analysis?” is verifiable. “How’s the economy?” is an invitation for confident garbage.

The models will improve. The watermarking will expand. The provenance infrastructure will mature. But model collapse is a thermodynamic process. Once synthetic data enters the training pipeline at scale, you can’t fully separate it. The tails are already gone.

What’s left is vigilance. Verify everything. Trust nothing at face value. The machines aren’t lying to you. They’re doing exactly what they were built to do. The architecture just doesn’t include a concept of truth.

Get this kind of analysis delivered weekly. Subscribe to ToxSec.

Grievances

Isn’t this just fear-mongering? AI content isn’t all bad.

Nobody said it was. AI-generated content can be useful, efficient, even good. The problem is training on it recursively without filtering. You can use synthetic data responsibly if you keep human-verified data in the mix and track provenance. Most pipelines don’t. That’s the gap.

Can’t we just detect AI content and filter it out of training data?

Detection accuracy isn’t good enough. Ahrefs found their detector mislabeled human content as AI 4.2% of the time. At scale, that’s millions of false positives. And adversarial actors are already using “humanizing” tools to evade detection. The arms race favors the generators, not the detectors.

What about curated datasets? Can’t companies just avoid the polluted web?

Some are trying. The Data Provenance Initiative tracks dataset lineage. But the economics push the other way. Web scraping is cheap. Human curation is expensive. And the models that train on more data tend to perform better on benchmarks. Until the market prices in data quality, the incentive is to scrape everything.

See, this is what I'm talking about. People joke about Skynet, robots killing and enslaving humans, taking our jobs, though that's happening; but it's never about massive, ominous change. It's the creeping change that's worrying, quiet unseen events like this. Incremental, practical change is far more insidious than loud, sudden change. It's never witnessed, and something you smack your head and go Ah! Why didn't I see that? It makes sense too, with the massive private investment in A.I. in America that utterly dwarfs the rest of the world. A.I. training A.I., compounding the problem and creating a daisy chain effect, is the kinda nightmare fuel you don't see until it's too late

This breakdown of model collapse is crucial. Emphasizing authenticated datasets and human-in-the-loop systems is the only way to maintain quality and trust in AI outputs as the feedback loop intensifies.