TL;DR: AI companies scraped 5.8 billion images for training, including thousands of private medical photos that should never have been online. An artist found her own post-surgery images in the LAION dataset, taken by her deceased doctor with explicit consent forms saying “medical records only.” The underground data market hits $30 billion by 2030. Consent is optional. Removal is nearly impossible.

Just because data ended up online doesn’t mean it was supposed to be public, and it definitely doesn’t mean you consented to training the machine that replaces you.

Why Do We Still Think “Public Data” Means We Consented?

Here’s the comfortable myth: if something’s technically accessible on the internet, it’s fair game for AI training. Public equals consensual, right? That’s the story AI companies tell themselves while scraping billions of images, emails, and documents to build models worth hundreds of billions of dollars.

Lapine, a California artist, found out how that story falls apart in 2022. She uploaded a photo of herself to “Have I Been Trained,” a tool that checks if your work ended up in AI training datasets. What she found wasn’t her art. It was two private medical photos her doctor took in 2013 following surgery to treat dyskeratosis congenita. The photos showed her face, post-operative, and were covered by an explicit consent form. The form said one thing clearly: medical records only.

The surgeon died in 2018. Somehow, those photos left his files, ended up online, and got scraped into LAION-5B, a dataset containing 5.8 billion image-text pairs used to train Stable Diffusion and Google Imagen. Ars Technica confirmed her case and found thousands of similar private medical photos in the same dataset.

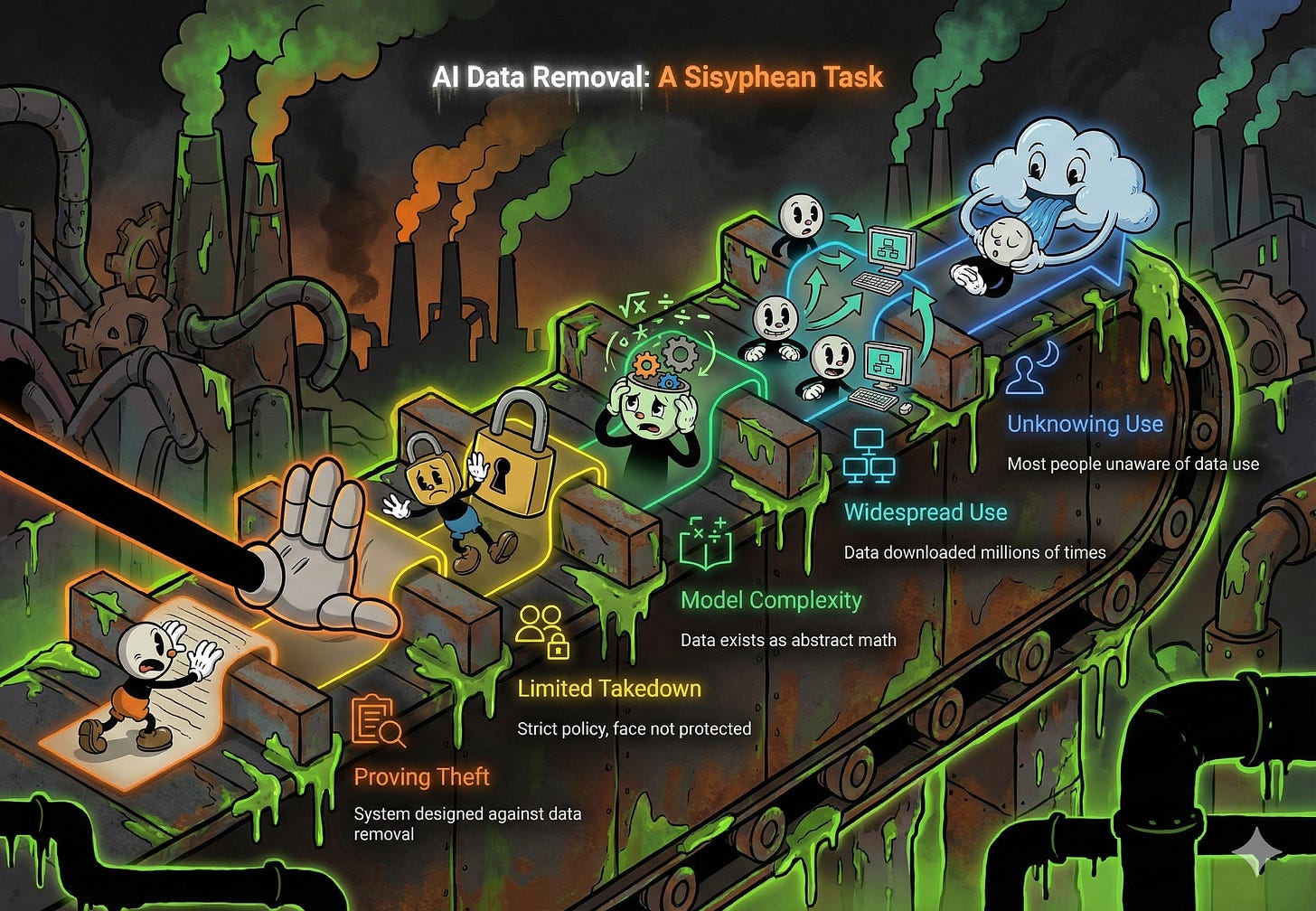

When Lapine asked LAION to remove her images, they told her: we only provide links to images, not the images themselves. Contact whoever posted them originally. The doctor’s dead. The photos were stolen. And LAION’s position is simple: not our problem.

If you know someone who uploads medical photos to patient portals, send them this. Those “secure” platforms have already been breached, and the images are probably in a dataset by now.

When Did Your Resume Become a Training Asset?

The game changed when data brokers realized they could sell scraped content directly to AI companies. We’re talking private messages, job applications, medical disclosures. A company called Defined.ai openly sells datasets including 750 million Facebook photos and 25 million TikTok videos at $1.15 per video. That’s your forgotten social media content being sold to train models without your knowledge.

The market is projected to grow from $2.5 billion in 2024 to $30 billion by 2030. And here’s the kicker: LinkedIn scraped user data for AI training before even updating its terms of service. Users got an opt-out toggle only after the scraping had already happened. Meta, OpenAI, Anthropic, Microsoft, Google... they’re all customers in this market, one way or another.

Researchers auditing DataComp CommonPool, one of the largest public AI training datasets with 12.8 billion image-text pairs, found something alarming in just 0.1% of the data. Hundreds of millions of identity documents. Passports. Credit cards. Driver’s licenses. Over 800 job applications with resumes and cover letters, verified through LinkedIn searches.

This data was scraped between 2014 and 2022. Before ChatGPT existed. Before anyone could have consented to AI training because the technology wasn’t even real yet.

Subscribe before the next data breach makes this personal. The companies scraping your life aren’t slowing down, and there’s no undo button for what’s already in the models.

Why Can’t You Just Opt Out?

Here’s the uncomfortable truth nobody wants to say out loud: consent is a fiction.

You can’t consent to technology that doesn’t exist yet. Data scraped in 2015 couldn’t carry your blessing for AI training in 2024. And even if you could somehow reach back in time, opting out of one dataset does nothing. Your data has already propagated to dozens of downstream models. Deletion from one place is meaningless when copies exist everywhere.

LAION’s removal policy is a perfect example. You can request takedown if your image links to identifiable data like your name, phone number, or address. But if it’s just your face? Just your medical history without metadata attached? You’re out of luck. They reject it. And even if they remove the link from their index, that doesn’t touch the models already trained on it or the millions of copies researchers have downloaded.

In November 2025, a lawsuit accused Google Gemini of flipping the script on privacy defaults. Smart Features in Gmail and Workspace were auto-enabled for U.S. users, feeding emails and attachments to AI training unless you hunted down the toggle and turned it off. Most people never knew it was on.

Share ToxSec with anyone still trusting “private” settings. The default is exploitation. The opt-out is hidden. And the data’s already gone.

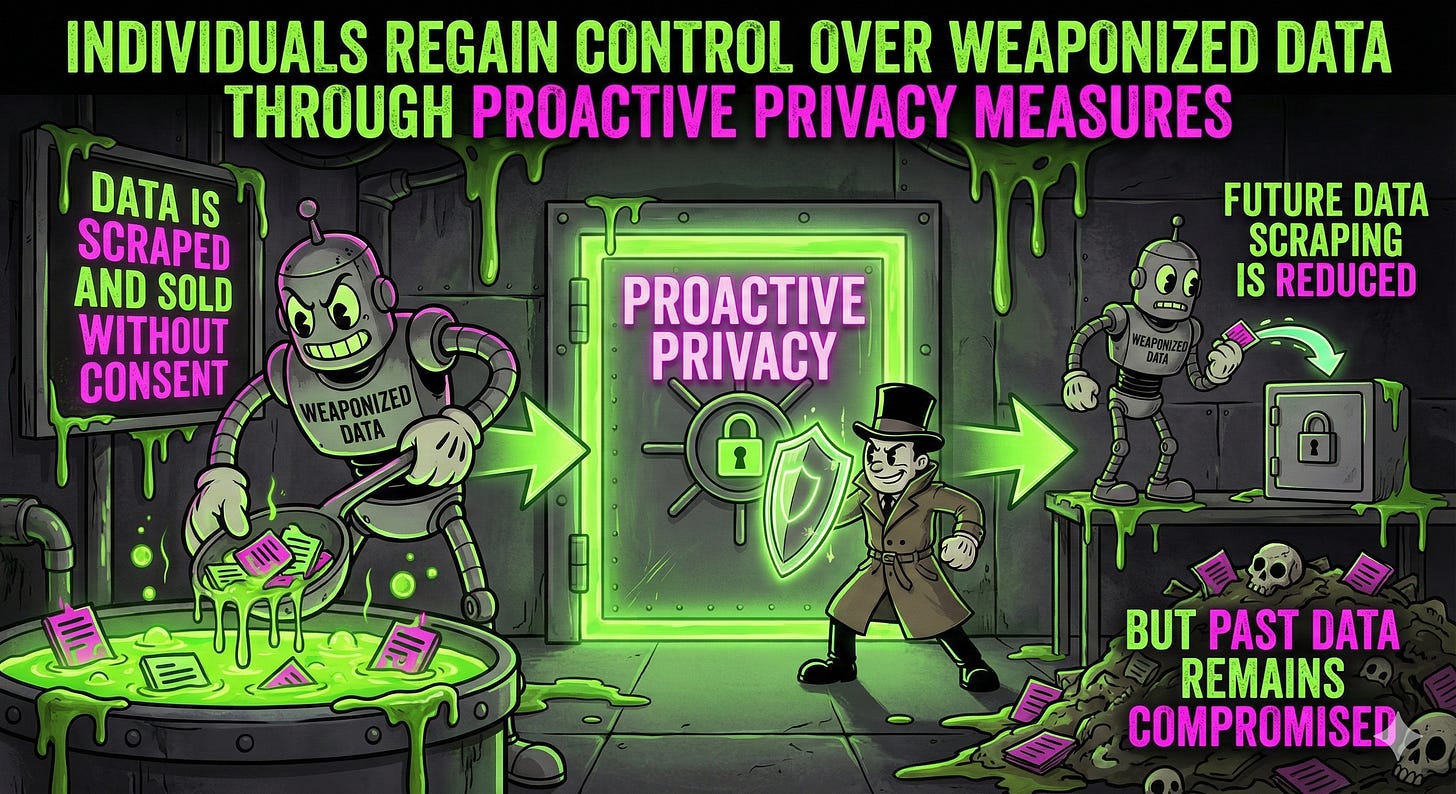

What Can You Actually Do About This?

Not much. But here’s what little ground you can hold.

First: use “Have I Been Trained” (haveibeentrained.com) to check if your images are in LAION. For Gmail, disable “Smart Features” in both Gmail settings AND Google Workspace settings. Miss one, and Gemini still accesses your emails.

Second: assume you’re already opted in everywhere. LinkedIn: Data Privacy > “Data for Generative AI improvement” > toggle off. Meta products? There’s no opt-out in most regions. Google’s “smart features” are auto-enabled in the U.S.

Third: for any platform with AI features, assume the opt-out is hidden, not default. Hunt for the toggle before assuming you’re protected.

The constraint you’re working within is brutal. You’re fighting a $30 billion market with legal teams that have already determined your consent is optional. The EU’s transparency requirements won’t stop companies from training on data they’ve already collected. Even if you successfully request removal, you can’t undo what’s already in the model. Limit future exposure. Hope regulatory pressure forces actual accountability.

Forward this to your company’s legal team. The liability exposure from using models trained on stolen data is just starting to materialize, and most orgs have no idea what’s in their supply chain.

Frequently Asked Questions

Q: Can I really opt out of AI training on my data? A: Partially. You can opt out of future collection on Gmail and LinkedIn by finding hidden toggles. But data that’s already been scraped? That’s in the training set permanently.

Q: What if my private photos are already in a training dataset? A: LAION allows removal if your image links to your name or address. Just your face? They reject it. And removal from their index doesn’t touch the trained models or millions of downloaded copies.

Q: Are companies legally allowed to scrape my data? A: U.S. companies claim fair use. Courts are divided. The EU has stronger protections. For now, companies scrape first and deal with consequences later.